Small autonomous robots are delivering groceries and takeout food orders at airports, on college campuses, and in urban neighborhoods.

The robots typically deliver small orders—two or three grocery or takeout bags—within a range of several miles of the food establishment, and they travel primarily on sidewalks and crosswalks at slow, pedestrian-like speeds.

Multiple Vision Modalities

Numerous companies offer this robotic delivery service, which relies on machine vision technologies for such tasks as navigation and obstacle avoidance. “Many sensory modalities are essentially used together with the aid of a sensor fusion system. With regards to the vision technology itself, either 2D or 3D cameras can be applied,” explains Yulin Wang, Technology Analyst at IDTechEx (Cambridge, UK; www.idtechex.com). “As each type of sensor has its benefits and drawbacks, many sensors (e.g., cameras, LiDAR, etc.) and software (e.g., image processing, machine vision, etc.) are used in combination to achieve a robust autonomous system,” he adds.

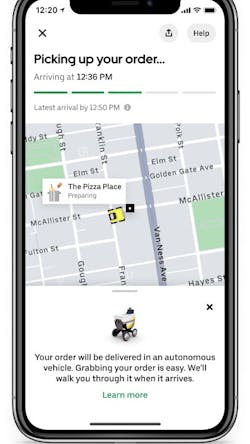

The robotic delivery service typically works like this: A customer places an online restaurant or grocery order via an app and will then use the app to unlock the robot’s storage compartment once the order arrives.

Most of the robots operate at Level 3 autonomy, meaning humans monitor them remotely, although the humans are responsible for more than one robot simultaneously, “and the time interval between each intervention could be around 5 to 8 hours,” Wang says. He also notes that the time interval varies, depending on a robot’s working conditions. What often happens is that humans intervene when an error is reported, “and this error report could happen at any time from picking up food to delivering food,” he explains.

How the Vision System Works

The machine vision technology used in these delivery robots typically follows a similar process.

After one or multiple sensors capture images, those images are converted into digital information. That happens through the combination of hardware, such as a CPU or digital processing engine, and image processing software, which uses algorithms to extract useful information.

“Depending on the specific needs, the processing results could be (a) Distance between robots and obstacles; (b) classification of the obstacle/object; (c) 3D reconstruction of the environments with the aid of other sensors (e.g., LiDAR)— navigation and localization,” Wang explains. This output then informs the movement of the robots.

Wang notes that SLAM (simultaneous location and mapping) software, which is a process for mapping an unknown environment while moving in it, is often used with machine vision in food delivery robots.

For example, Serve Robotics (San Francisco, CA, USA; www.serverobotics.com/level-4-autonomy) says that its robots “are equipped with an extensive array of technologies that ensure the highest degree of safety by utilizing multiple layers of redundant systems for critical navigation functions. This includes multiple sensor modalities—active sensors such as LiDAR and ultrasonics, as well as passive sensors such as cameras—to navigate safely on busy city sidewalks.” In a January 2023 news release, the company says its latest-generation robot is cable of Level 4 autonomy, meaning that robots maneuver with little or no human intervention.

The specific LiDAR sensors the company uses are the OS1 sensor from Ouster (San Francisco, CA, USA; https://ouster.com/). As Ouster explains, “The digital LiDAR is fused into the robot’s autonomy stack to locate its precise position and simultaneously generate a real-time 3D map of its surrounding environment so that it can navigate more safely and efficiently on city sidewalks alongside pedestrians and other road users.”

Starship Technologies (San Francisco, CA, USA; www.starship.xyz), which also says it operates at Level 4 autonomy, also uses a combination of technologies. “Using 12 cameras, ultrasonic sensors, radar, neural networks, and other technology, the robots can detect obstacles, including animals, pedestrians/cyclists, and other delivery robots,” the company says on its website.

Another company, Ottonomy.IO (Brooklyn, NY, USA; https://ottonomy.io/), says its Ottobots “use a combination of multimodal sensors (specifically 3D LiDAR and RGB cameras) optimized for hardware running the mapping and localization pipelines in real time. Ottonomy's navigation maps combine both metric and semantic distance allowing for fully automated and highly accurate location delivery.”

Partnerships in the Market

To attract consumers' attention and customers, competing companies are rolling out new functionality and inking partnerships with food-delivery companies.

For example, Ottonomy.IO unveiled a new robot, Ottobot Yeti, in January 2023 that has an automated package delivery mechanism, allowing it to drop off takeout food or other packages directly to a locker. The robots can maneuver through crowds both indoors and outdoors.

In 2022, the company piloted an earlier version of its robotic service in which humans unlocked the robot’s storage compartment at three airports: Cincinnati/Northern Kentucky International Airport (CVG), Rome Fiumicino Airport (FCO), and Pittsburgh International Airport (PIT).

Serve Robotics also launched a pilot food delivery service in Los Angeles, California, USA, in 2022 through a partnership with Uber Technologies (San Francisco, CA, USA; www.uber.com/). Customers order food through the Uber Eats app, which also allows them to open the robot’s storage compartment and retrieve their food.

Serve Robotics was spun out of Postmates (San Francisco, CA, USA; https://postmates.com/), a delivery service that Uber acquired in 2022.

Launched in 2014, Starship operates robotic delivery services on college campuses and has launched pilot projects in numerous cities, including Chicago, Illinois, USA; Pleasanton, California, USA; Espoo, Finland; Cambridgeshire, UK; and Northamptonshire, UK. It delivers takeout restaurant meals and groceries. Some of the college campuses where it delivers orders include University of Tennessee Knoxville, Arizona State University, Purdue University, and Missouri State University.

Meanwhile, Grubhub (Chicago, IL, USA; www.grubhub.com) is partnering Kiwibot (Miami, FL, USA; www.kiwibot.com/technology), Starship, and Cartken (Oakland, CA, USA; www.cartken.com/) to deliver food on nearly a dozen campuses.

Outdoor Challenges

However, Wang concludes that before food-delivery mobile-robots, such as these, become a mature use case, there are challenges for the machine vision/imaging technology vendors to overcome.

“The technologies themselves are relatively robust at this stage already, especially for those indoor food delivery robots where the working environment is well controlled. However, for outdoor food delivery robots, IDTechEx believes that the technology is still evolving. This is primarily because the outdoor environments could change rapidly, and there are way more uncertainties for outdoor applications.”

The variables outdoors include the degree of natural light, shadows (e.g., shadows caused by tree canopies), and other unpredictable conditions. “All these present challenges for the robustness of the machine vision technologies,” he says.

About the Author

Linda Wilson

Editor in Chief

Linda Wilson joined the team at Vision Systems Design in 2022. She has more than 25 years of experience in B2B publishing and has written for numerous publications, including Modern Healthcare, InformationWeek, Computerworld, Health Data Management, and many others. Before joining VSD, she was the senior editor at Medical Laboratory Observer, a sister publication to VSD.