October 2016 Snapshots: 360° video for VR, Amazon drone, major autonomous vehicles partnership

3D, 360° video capture for virtual reality applications

Facebook (Menlo Park, CA, USA; www.facebook.com) has developed an open-source, 3D 360° video capture system based on 17 industrial cameras that includes hardware specifications and software code for camera control and post processing, which is available on GitHub (San Francisco, CA, USA; www.github.com).

Called the Facebook Surround 360, it enables developers to leverage the design and code, when content creators use it in their productions. The system uses 17 Grasshopper3 GS3-U3-41C6C-C cameras from Point Grey (Richmond, BC, Canada; www.ptgrey.com). Grasshopper3 GS3-U3-41C6C-C cameras are color USB3 Vision cameras that feature the 4.1 MPixel CMV4000 CMOS image sensor from CMOSIS (Antwerp, Belgium; www.cmosis.com).This sensor is a 1" global shutter sensor with a 5.5 μm pixel size that is capable of achieving a frame rate of 90 fps.

Fourteen of these cameras are arranged with a 50% overlapping field of view around a circle each using a wide-angle, 7 mm f/2.4 lens. One camera is arranged on top with a 2.7 mm focal length fisheye lens, while the last two cameras are arranged on the bottom each with a 2.7 mm focal length fisheye lens.

Facebook has also developed automated stitching software that reduces post-production retouching effort and time. Following a video shoot, users can view the output as a 3D, 360° video with no intervening hand processing or fixing using a Samsung (Suwon, South Korea; www.samsung.com) Gear VR headset. Facebook estimates that if a potential user has all the parts ready for build and an assembly manual on hand, the Facebook Surround 360 should take approximately four hours to assemble, and an additional few hours to set up the operating system.

Partnership to explore drone delivery safety

A partnership between Amazon (Seattle, WA, USA; www.amazon.com) and the UK government is set to explore the steps needed to make the Amazon Prime delivery drone an operational reality. By enabling Amazon to trial new methods of testing its delivery systems, a team led by the UK Civil Aviation Authority (CAA) has provided Amazon with permission to explore three key innovations:

• Beyond line-of-sight operation

• Obstacle avoidance systems

• Flights where one person operates multiple drones

"The UK is a leader in enabling drone innovation – we've been investing in Prime Air research and development here for quite some time," said Paul Misener, Amazon's Vice President of Global Innovation Policy and Communications. "This announcement strengthens our partnership with the UK and brings Amazon closer to our goal of using drones to safely deliver parcels."

Amazon's latest drone model is designed to rise vertically at an altitude of up to 400 ft, then transition into a horizontal flight path where it will travel at speeds of approximately 55 mph, and for distances of up to 15 miles.

In order to navigate safely in the airspace, Prime Air is equipped with "sense and avoid" technologies, meaning it is equipped with some sort of vision system, though Amazon has not released any details on its components.

This research will help Amazon and the Government understand how drones can be used safely and reliably in the logistics industry, and will help identify what operating rules and safety regulations will be needed to help push the drone industry forward, suggested the press release.

"Using small drones for the delivery of parcels will improve customer experience, create new jobs in a rapidly growing industry, and pioneer new sustainable delivery methods to meet future demand," said Misener. "The UK is charting a path forward for drone technology that will benefit consumers, industry and society."

The CAA, the UK's aviation safety regulator, will be fully involved in the project in order to explore the potential for the safe use of drones beyond light of sight. Results of these trials will help inform the development of future policy and regulation in this area.

"We want to enable the innovation that arises from the development of drone technology by safely integrating drones into the overall aviation system," said Tim Johnson, CAA Policy Director. "These tests by Amazon will help inform our policy and future approach." In terms of when the Amazon Prime delivery drone will become a standard customer option, the company notes that it may take time, but is optimistic for the long-term.

"We will deploy when and where we have the regulatory support needed to safely realize our vision," Amazon notes on its Prime Air page. "We're excited about this technology and one day using it to deliver packages to customers around the world in 30 minutes or less."

BMW, Intel, and Mobileye partner on autonomous vehicles

BMW Group, (Munich, Germany; www.bmwgroup.com), Intel (Santa Clara, CA, USA; www.intel.com) and Mobileye (Jerusalem, Israel; www.mobileye.com) have teamed up to bring autonomous vehicles into series production by 2021.

The companies, which represent leaders in automotive, technology, computer vision, and machine learning, share the opinion that automated driving technologies will make travel safer and easier. BMW's iNEXT model will be the foundation for its autonomous driving strategy and will set the basis for fleets of fully autonomous vehicles, not just on highways but also in urban environments for the purpose of automated ridesharing solutions.

The goal of the collaboration, according to a press release, is to develop future-proofed solutions that will enable drivers to reach the "so called "eyes off" (level 3) and ultimately the "mind off" (level 4) level transforming the driver's in-car time into leisure or work time." This level of autonomy would enable the vehicle, on a technical level, to achieve the final stage of traveling "driver off" (level 5) without a human driver inside, which establishes the opportunity for self-driving fleets by 2021.

While BMW lends its automotive expertise to the collaboration, Intel is providing computing power ranging from its Intel Atom to Intel Xeon processors, which deliver up to a total of 100 teraflops of power-efficient performance without having to rewrite code. Mobileye lends its autonomous vehicles expertise, as the company develops software algorithms, system-on-chips, and customer applications based on processing visual information for driver assistance systems.

Mobileye Co-Founder, Chairman and CTO Professor Amnon Shashua commented on the project: "Mobileye is proud to contribute our expertise in sensing, localization and driver policy to enable fully autonomous driving in this cooperation. The processing of sensing, like our capabilities to understand the driving scene through a single camera already, will be deployed on Mobileye's latest system-on-chip, the EyeQ5, and the collaborative development of fusion algorithms will be deployed on Intel computing platforms. In addition, Mobileye Road Experience Management (REM) technology will provide real-time precise localization and model the driving scene to essentially support fully autonomous driving."

The companies have agreed upon a set of deliverables and milestones to deliver fully autonomous cars based on a common reference architecture. In the near future, the partners will demonstrate an autonomous test drive with a highly automated driving (HAD) prototype. In 2017 the platform will extend to fleets with extended autonomous test drives, according to Intel.

All three companies held a press event on July 1 to discuss their collaboration, which will be made available to multiple car vendors as well as other industries who could benefit from autonomous machines and deep machine learning.

From left: Klaus Fröhlich of BMW; Ziv Aviram of Mobileye; Amnon Shashua of Mobileye; Harald Krüger of BMW; Brian Krzanich of Intel; and Doug Davis of Intel. production by 2021.

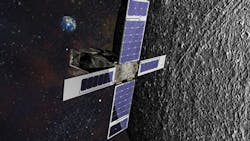

Infrared camera will launch to the moon

Lockheed Martin (Bethesda, MD, USA; www.lockheedmartin.com) has announced that it has signed a contract with NASA (Washington, DC, USA; www.nasa.gov) to develop and deploy SkyFire, a CubeSat with new infrared camera technology, for a 2018 moon launch with Orion's Exploration Mission-1 (EM-1).

Once deployed, Lockheed Martin's 6U-sized small satellite, SkyFire, will perform a lunar flyby, taking images of the lunar surface and its environment, performing observations to help address strategic knowledge gaps (SKG) related to surface characterization, remote sensing, and site selection observations. Data collected on thermal environments, according to NASA, will help scientists learn about the composition, structure, interaction with the space environment, and interaction with solar particles and the lunar regolith, all contributing to risk reduction for potential future manned missions.

Following the flyby and data collection at the moon, SkyFire will address Mars SKGs related to transit and long-duration exploration missions, by conducting additional CubeSat-based technology demonstrations to learn more about future crewed and robotic deep space missions.

"SkyFire's lunar flyby will pioneer brand new infrared technology, enabling scientists to fill strategic gaps in lunar knowledge that have implications for future human space exploration," said John Ringelberg, Lockheed Martin's SkyFire project manager. "Partnering with NASA for another element of the Orion and Space Launch System EM-1 flight is very exciting."

The infrared camera will capture high-quality images with a lighter, simpler unit.

"The CubeSat will look for specific lunar characteristics like solar illumination areas," said James Russell, Lockheed Martin SkyFire principal investigator. "We'll be able to see new things with sensors that are less costly to make and send to space."

If successful, the infrared system on SkyFire could eventually be used for studies of planet's resources, including analyzing soil conditions, determining ideal landing sites and discovering a planet's most livable areas.

"For a small CubeSat, SkyFire has a chance to make a big impact on future planetary space missions," Russell explained. "With less mass and better instruments, we can get closer, explore deeper and learn more about the far reaches of our solar system."