January 2017 snapshots: Autonomous vehicles, facial recognition software, bio-inspired vision

Autonomous underwater vehicle successfully deployed to destroy harmful starfish

A vision-guided underwater robot designed by the Queensland University of Technology (QUT; Brisbane, Queensland, Australia; www.qut.com) successfully completed field trials. The goal: to autonomously seek out and eliminat the Great Barrier Reef's crown-of-thorns starfish (COTS), responsible for an estimated 40% of the reef's total decline in coral cover. COTSbot, as it's called, successfully navigated difficult reefs, detected COTS, and delivered a fatal dose of bile salts during trails.

"It's always great to see a robot you built, doing the job it's intended for," said Dr Matthew Dunbabin, from QUT's Institute for Future Environments and Science and Engineering Faculty. "We couldn't be more ecstatic about how COTSbot has performed. The next generation will be even better and hopefully we can roll it out across the reef relatively quickly."

The robot spent much of the trials tethered to a WiFi-enabled boat, sending data back to the research team, allowing them to see through the robot's cameras, verify every COTS it identified, and approve injections before they happened. Once it was determined that the robot could work well under human supervision, the team monitored its autonomous efforts.

"We're very happy with COTSbot's computer vision and machine learning system," said QUT's Dr Dayoub, also a Research Fellow with the Australian Centre for Robotic Vision. "The robot's detection rate is outstanding. COTS blend in very well with the hard, corals they feed on. The robot must detect them in widely varying conditions as they hide among the coral. When it comes to accurate detection, the goal is to avoid any false positives - that is, the robot mistaking another creature for a COTS."

COTSbot features a vision system based on a stereo camera setup looking downward and a single camera looking forward. The down-facing cameras are used to detect the starfish, while the front-facing camera is used for general navigation. The cameras used were CM3-U3-13S2C-CS Chameleon 3 color cameras from Point Grey (Now FLIR Integrated Imaging Solutions Inc; Richmond, BC, Canada; www.ptgrey.com) which are 44 mm x 35 mm x 19.5 mm enclosed USB 3.0 cameras that feature ICX445 CCD image sensors from Sony (Tokyo, Japan; www.sony.com). The ICX445 is a 1/3" 1.3 MPixel CCD sensor with a 3.75 μm pixel size that can achieve frame rates of 30 fps.

All image processing is done on-board the robot, and this is performed on a low-power (less than 20W) GPU. The software, according to Dunbabin, is built around the Robotic Operating System (ROS) and is optimized to exploit the GPU.

"Using this system we can process the images for COTS at greater than 7 Hz, which is sufficient for real-time detection of COTS and feeding back the detected positions for controlling the underwater robot and the manipulator," he said.

QUT roboticists trained the robot to recognize COTS among coral by training the system with thousands of still images of the reef, and with videos taken by COTS-eradicating divers. Dayoub, who designed the detection software, said the robot will continue to learn from its experiences in the field.

"Its computer system is backed by some serious computational power so COTSbot can think for itself in the water," said Dayoub. "If the robot is unsure that something is actually a COTS, it takes a photo of the object to be later verified by a human, and that human feedback is incorporated into the robot's memory bank."

COTS are one of the biggest breeders in the ocean, mating in large groups called aggregations. In small numbers, the starfish consume fast-growing hard corals, allowing slower-growing soft corals to thrive, but during outbreaks, they eat everything in their path.

COTSbot is designed to support the existing human efforts to control COTS, and sweeps an area of the majority of the pests and leaves the hard-to-reach starfish for the specialist divers who follow.

Face and iris recognition software targets biometric security on mobile platforms

A suite of mobile face and iris recognition software for biometric security and authentication has been introduced by FotoNation (San Jose, CA, USA; www.fotonation.com).

FotoNation based the solutions on its deep learning neural network and MIRLIN Iris recognition technologies, to deliver authentication in outdoor conditions. The company's Facial Recognition product combines a smartphone's front-facing camera and pairs that with deep learning and computer vision algorithms to identify subjects in various light conditions and poses at a false acceptance rate (FAR) of 1 in 10,000, according to the company.

The Iris Recognition product uses patented MIRLIN technology to identify a subject, even while wearing glasses. With a FAR of 1 in 10,000,000, Iris Recognition uses biometric feature extraction and matching algorithms. De-noising and image stability techniques are used for iris detection and capture, and a small template size enables logic-based matching, while proprietary liveliness and spoof detection functions are also used.

FotoNation works with camera module makers to ensure that the right lens and filter are used. Though FotoNation's final product is software, the product offered can be considered as a reference design or prototype that acts as an enabler to the software.

Iris Recognition technology is currently being used in commercial buildings, European airports, and military bases around the world, according to the company. Development kits for the company's biometrics solutions are also available.

Autonomous vehicles for factory floor being developed by Colorado company

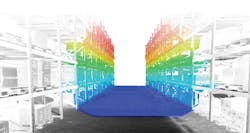

Canvas Technology, (Boulder, CO, USA; http://canvas.technology) a team of engineers with robotics experience from Google (Mountain View, CA, USA; www.google.com), Toyota (Toyota, Japan; www.toyota.com) and Kiva Systems (Now Amazon Robotics; North Reading, MA, USA; www.amazonrobotics.com), is developing technology to put autonomous vehicles into factories and warehouses for purposes of material handling.

"Robotics has traditionally been applied to structured and predictable tasks on the factory floor. The intelligence possible with computer vision enables us to add value in highly dynamic and unstructured scenarios," Jonathan McQueen, CEO of Canvas Technology explained to Vision Systems Design.

The company has yet to reveal the product publicly, but is already demonstrating its capabilities indoors, according to Robotics Business Review (RBR; Framingham, MA, USA; www.roboticsbusinessreview.com).

"We started inside factories and warehouses because there is significant demand for a better system, and it's an easier problem to solve first," McQueen, continued. "The experience we gain indoors will pave the way for a successful launch of outdoor transportation, which our technology also enables."

Much like autonomous vehicles that are on streets, these vehicles require vision systems that can provide navigation and obstacle avoidance. As a result, Canvas has developed its own proprietary camera solution that, when paired with computer vision algorithms, can enable the systems to move autonomously in a safe, accurate, and flexible manner.

"We've developed state-of-the-art cameras to drive vision-based autonomy because it's the best solution at the moment," McQueen said. "But we can incorporate the best sensor technologies over time. If dense 3D lidars come down in cost, size, and power consumption, we can incorporate them."

Canvas also utilizes SLAM (simultaneous localization and mapping) techniques and cameras that are continuously mapping, in order to constantly watch the environment and act accordingly, according to RBR.

The company's technology is reportedly operating in pilots and will launch commercially soon. Many headlines out there today involving autonomous vehicles pertain to cars on the road or drones in the sky, but the technology can be applied in an array of different applications, with the factory floor being one of them.

Bio-inspired vision company receives $15M funding led by Intel

A developer of biologically-inspired vision sensors and computer vision solutions has raised $15 million in Series B financing led by lead investor Intel Capital (Santa Clara, CA, USA; www.intelcapital.com) along with iBionext (Paris, France; http://ibionext.com), Robert Bosch Venture Capital GmbH (Stuttgart, Germany; www.rbvc.com), 360 Capital (Paris, France; http://360capitalpartners.com), CEAi (Paris, France; www.cea-investissement.com) and Renault Group (Boulogne-Billancourt, France; www.group.renault.com).

The Paris-based start-up company Chronocam (Paris, France; www.chronocam.com) was founded by an international group of scientists, entrepreneurs, and venture capitalists whose exclusive goal is to develop and commercialize neuromorphic event-driven vision technology, which is described as a brain-inspired way of acquisition and processing visual information for computer vision.

Chronocam wants to become the leading computer vision solution provider for autonomous navigation by human-eye inspired vision sensing and processing. The company develops CMOS image sensors for two 3D cameras, the CCAM 3D, and a smaller, embeddable camera, the CCAM(eye)oT. Additionally, Chronocam develops vision sensors based on the same technology.

Each pixel of a CCAM sensor array controls its own sampling based on input received from the scene. Chronocam notes in its technical paper, that an ideal image sensor would samples fast changing parts of a scene at high sampling rates, and slow changing parts at slow rates, simultaneously, with the sampling rate going to zero if nothing changes. In order to do this, using one common sampling rate (frame rate) for all pixels is not an option. Furthermore, one needs as many sampling rates as there are pixels in the sensor, and each pixel's sampling rate must adapt to the part of the scene it sees.

To accomplish this requires abandoning a common frame rate and putting each pixel in charge of adapting its own sampling rate to the visual input it receives. Thus, each pixel defines the timing of its own sampling points in response to its visual input by reacting to change of the amount of incident light. As a consequence, the entire sampling process is no longer governed by a fixed (artificial) timing source, but by the signal to be sampled itself, or more precisely by the variations over time of the signal in the amplitude domain. The output generated by a camera such as this is no longer a sequence of images, but a time-continuous stream of individual pixel data, generated and transmitted conditionally, based on changes happening in the scene.

"The advent of bio-inspired event-driven vision has started introducing a paradigm shift in machine vision based on the asynchronous acquisition and processing of visual information beyond Nyquist," states the conclusion of the company's technical paper.

With the investment, Chronocam will continue to build a team to accelerate product development and commercialize its products, as well as expand into key markets, including the US and Asia. In addition to autonomous navigation, the company says the technology can be deployed into biomedical, scientific, industrial, and automotive imaging applications.

"Conventional computer vision approaches are not well-suited to the requirements of a new generation of vision-enabled systems," said Luca Verre, CEO and co-founder of Chronocam. "For example, autonomous vehicles require faster sensing systems which can operate in a wider variety of ambient conditions. In the IoT segment, power budgets, bandwidth requirements and integration within sensor networks make today's vision technologies impractical and ineffective."

He added, "Chronocam's unique bio-inspired technology introduces a new paradigm in capturing and processing visual data, and addresses the most pressing market challenges head-on."