Machine vision system fuses robotics and object recognition in logistics application

A vision-guided robot took over picking and packing duties for a manufacturer and distributor of plastic products for medical use, allowing the company to reduce a team of 44 operators to 4 while maintaining the same level of shipping output.

Plastimation LLC (Torrington, CT, USA; www.plastimation.com), an Epson (Los Alamitos, CA, USA; www.epson.com) integrator that specializes in custom factory automation, was commissioned by the high-end medical plastics processing and molding company to build an application that could carry out pick-and-place operations for a product line of cylindrical plastic tubes built for medical testing in centrifuges.

While all the tubes, constructed from PET clear or polypropylene translucent plastic, have a standard length of 2.5 inches, their diameters vary, and some have open ends while others have a nozzle, making for a total of 15 different varieties.

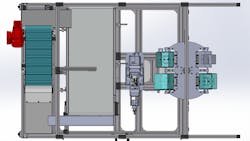

The largest component of the solution (Figure 1), an Epson C4 six-axis robot with 180° of motion, sits in the middle of a 6-foot 4-inch rectangular work cell set on casters for ease of repositioning. Steve Sicard, systems integrator at Plastimation, says the C4 model best fits the application owing to its 600-mm reach and its speed. Hard guarding, a polycarbonate wall about 7 feet from ground level and mounted on the sides of the work cell, surrounds the robot.

A backlit horizontal flat-belt conveyor belt from Plastimation’s conveyor partner mk North America Inc. (Bloomfield, CT, USA; www.mknorthamerica.com), built with translucent material, stands on one side of the robot. The conveyor features a 400-mm square with white LED strips sitting beneath the area where the robot makes its picks.

A Basler (Ahrensburg, Germany; www.baslerweb.com) ACA1600-60GM monochrome camera, featuring a 2-MPixel CMOS image sensor with 4.5-µm pixel size and with a 25-mm Epson lens, hangs directly above the illuminated portion of the conveyor on a fixed mount attached to an aluminum frame.

The C4 robot runs on the Epson RC-700A robot controller that includes an Intel CPU, 1 GB of memory, and an Ethernet port and runs on Microsoft Windows. Plastimation selected an optional, second Ethernet port for the controller.

The Basler camera, controlled by Epson’s CV2-S vision controller, connects to the RC-700A via its Ethernet port. The RC-700A connects to an Allen Bradley by Rockwell Automation (Milwaukee, Wisconsin; www.ab.rockwellautomation.com) PLC via Epson’s Ethernet/IP option card.

Related content: Automated food packaging system uses vision systems to read/inspect packages for palletization

The 2D and rules-based application runs on Epson’s Vision Guidance software. Placing a sample of each tube variety allows Sicard to train the software. Sicard utilizes a geometric search tool in the software and draws a bounding box around the tube, the Vision Guidance software looks for a geometric pattern, in this case the edge of the plastic tube, within the bounding box.

For the software to successfully detect an edge of an object, the programmer has to set the appropriate pixel tolerance settings. “Because it’s grayscale, you can set the tolerance for how gray, how white, or how black a pixel needs to be to define it as a good or a bad one,” says Sicard, with good representing a pixel that defines part of an edge. An operator must dial in the pixel tolerance values after the first object scan.

Sicard also needed to set the software’s tolerance for how many pixels in the same orientation as that in a training image the software needs to see before identifying another image as matching a training image. This tolerance determines the software’s ability to recognize the same part from varied orientations, i.e. varied positions relative to the camera.

Once he set these tolerance values while scanning the first tube, Sicard says it was easy for the software to learn the other 14 varieties simply by placing them under the camera one at a time and drawing the bounding box around the tube in the frame. “The trick—it really always comes down to lighting in vision,” Sicard continues.

By acting as a diffuser, the back-lit conveyor belt material eliminates almost all shadows in the picking area and distributes the light evenly across the surface of the conveyor. This creates the stark contrast required for accurate edge detection, allowing the camera to identify the type of tube to be picked based on the templates saved in the vision software and the precise location of the tube relative to the camera, allowing the robot to make the pick successfully.

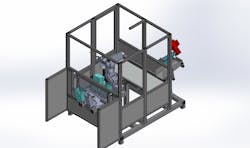

A two-station rotary dial stands on the other side of the robot. Half of the dial sits inside the guarding, facing the robot, and the other half stands outside the guarding (Figure 2). Each side of the dial features two mounts for stainless steel “nests” that resemble metal boxes with open ends. Each nest matches the dimensions of a specific variety of packing box for the plastic tubes. Five different kinds of nest accommodate the varied tube widths.

System operation begins when the operator selects a tube variety on an Allen Bradley PanelView 5000 touchscreen mounted outside the guarding. This information is delivered to the PLC. The operator then places two nests into the mounts outside the guarding. The bottom of each nest features a steel plate with drilled holes. Three LTR-M05MA-NMS-403 photoelectric sensors from Contrinex (Corminboeuf, Switzerland; www.contrinex.com) sit below each nest mount. The photoelectric sensors can identify the nest type based on the pattern of holes drilled into the steel plate.

This information is also delivered to the PLC. If the nest type does not match the selected tube variety, a warning message appears on the HMI to inform the operator that the wrong nest type is loaded.

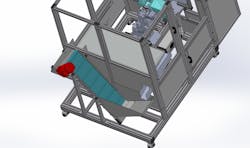

The operator then empties a bag of plastic tubes into the chute of a flex feeder on the side of the machine outside the guarding (Figure 3). A 1-foot-wide conveyor with cleats, mounted at a 55° angle, lifts the tubes to a chute that dumps them onto the flat-belt conveyor belt inside the guarding and next to the robot.

If the operator places the wrong variety of tube into the machine, i.e. a variety that does not match the initial selection on the HMI, such that the camera cannot locate the anticipated variety of tube, the system pauses, an alert message appears on the HMI, and a red warning light flashes to alert the operator about the problem.

The conveyor moves until the software registers a minimum number of parts, which varies depending on the size of the tube variety within the camera’s FOV. The robot then begins picking at a rate of 40 picks per minute using a wand fabricated by Plastimation with an SMC vacuum cup end effector and places the parts into the metal nests. When the number of parts in the camera’s FOV decreases below the minimum, the conveyor moves again to deliver more tubes into the picking area.

Related content: Machine vision system ensures accurate Girl Scout cookie shipments

The robot controller software is programmed to recognize the number of tubes to place in the loaded nest type and counts placements. When the first nest registers as full, the PLC switches the robot to fill the second nest. When both nests are filled, the dial rotates again such that the full nests stand outside the guarding and empty nests are situated inside the guarding for the robot to continue loading.

LCA-4TR-S1-14-1060 and LCA-4TR-M-14-460-AP-156591 light curtains from Euchner (East Syracuse, NY, USA; www.euchner-usa.com) mount on either side of the operator’s work area. If an employee stands within the work area, the curtain is disrupted and dial movement is disabled to protect the employee.

The operator fits a packaging box like a sleeve over the full nest. The nest mounts have hinges that allow them to swing down 180°. The tubes fall out of the nest and into the packaging box when the operator slides the box down and off the nest. The box is then sealed and palletized.

The employee swings the nests back up in preparation for the robot to fill them again. When the employee steps back out of the work area, he presses an Opto-touch button from Banner Engineering (Minneapolis, MN, USA; www.bannerengineering.com) mounted on the outside of the work cell to re-enable dial rotation.

Previously, three employees working one shift per day hand sorted and boxed the tubes with a throughput of between 40 and 60 packages per hour. The robot-based automated system produces roughly 60 loaded packages per hour with a single attending operator and runs three shifts per day.

About the Author

Dennis Scimeca

Dennis Scimeca is a veteran technology journalist with expertise in interactive entertainment and virtual reality. At Vision Systems Design, Dennis covered machine vision and image processing with an eye toward leading-edge technologies and practical applications for making a better world. Currently, he is the senior editor for technology at IndustryWeek, a partner publication to Vision Systems Design.