Deep learning tools improve inspection of terminal spot welding

John Petry, Director, Deep Learning, at Cognex

Electromechanical parts in an automotive system have dozens of terminal spot welds, and reliable visual inspection of them is vital. This is a difficult task because of the inherent variations in the appearance of good parts and parts with defects. Automated solutions that use deep learning can combine the best qualities of human visual inspection and computerized systems.

Traditional machine vision solutions for automated product inspection are defined using explicit rules-based programming and are used in smart factories for precision measurement and alignment of consistent features.

For inspection tasks where defects can be hard to find or the appearance of good parts varies significantly, writing a pattern-finding tool with a traditional vision system is not practical. This is especially true during final assembly check, where every possible upstream defect needs to be caught to avoid shipping bad parts. Alternatively, final inspection can be done visually by human quality technicians, but this work is slow and inconsistent.

Deep learning solutions, on the other hand, leverage datasets composed of real-world images that have been evaluated and labeled by expert quality inspectors. For example, the images may be labeled “good” or “bad” to differentiate between images of products that would pass inspection and those that would not. If the “bad” images are also labeled with the nature of the defect presented in the image, deep learning systems may learn how to identify the specific problem with a product that fails inspection.

Deep learning systems are trained with these datasets rather than being explicitly programmed based on expected rigid visual patterns. Thus, deep learning-based inspection systems, unlike traditional rules-based systems, can make subjective quality judgments based in a wide range of potential anomalies.

For these reasons, final assembly is a good place to start with deep learning. This can result in immediate cost savings as well as improved inspection performance. In addition to a simple pass/fail decision, the deep learning system can often provide information on the type of defect. This can be used as a process control measure to correct problems upstream.

Whether by calibrating a robot or adding a preventive maintenance step, rapidly identifying root causes of failures can result in immediate savings and improved product quality. Deep learning inspection can be used to implement a true smart manufacturing process combining automated and manual inspection data.

This ability to identify variable patterns in complex systems makes deep learning machine vision an exciting solution for inspecting objects with inconsistent shapes and defects—such as inspecting terminal spot welds in the automotive electronics industry.

Terminal Spot Welding Inspection

Inspecting terminal spot welds is an emerging use for deep learning technology. Spot welding is the process of joining two terminals or a terminal and a wire by fusing them without the use of solder. Starters, coils, and alternators have dozens of these welds. While automated electrical tests will catch gross defects, bad welds may still pass electrical testing and prove brittle in the field. Adding a reliable visual inspection check is critical.

The types of spot welds seen on these parts include hairpin (pin-to-pin) welds, which are often used for motor stators and alternators and are created through laser or resistance welding; wire-to-pad welds, which are seen in starter switches, solenoids, and sensors and are done mainly through resistance welding; and wire-to-wire welds, using a resistance welding process that is ideal for sensors.

By its nature, spot welding creates a slightly variable three-dimensional metal object with reflective surfaces. The resulting images frequently contain shadows, reflections, colored regions, and surface texture even on perfectly good parts. These optical effects are often of the same magnitude as true defects, which include blowholes, burns, cracks, pitting, scratches, wrinkles, disconnection, and excessive or missing welds.

Developing a reliable, rules-based automated system to distinguish good parts from bad has been difficult because of these natural variations. Deep learning-based systems, however, are far superior in detecting a wide range of anomalies, as long as the system is trained with enough images that demonstrate what the anomalies look like.

Defect Detection Solutions

One of the biggest hurdles in deep learning is collecting enough examples of bad parts to adequately train a system. In any high-quality manufacturing process, the number of good parts produced dramatically overshadows the bad. It can be challenging to collect enough examples of each type of potential defect for a supervised neural network in which each defect must be explicitly, manually identified to train the model.

An unsupervised mode allows the neural network to be trained solely from images of good parts. As long as the parts span the range of acceptable appearance variation, the system learns which parts to pass on to the next manufacturing step and which must be pulled from the production line.

With an unsupervised mode, programming is simpler, and examples of defective parts aren’t required to begin training. Over time, as companies collect a large set of images of defective parts, they can use those to program and test through a supervised mode and compare both modes to see which works best.

Classification for Process Control

Pass/fail final assembly inspection is a good initial place to integrate deep learning into a manufacturing process. A further step is to evaluate each bad part to determine the cause of failure by classifying the defects found during inspection. This information allows companies to implement immediate corrective action to prevent additional bad parts from being manufactured.

There are two types of classification, and each offers advantages. One examines whole images (scenes) to make an overall assessment of a part. The other looks at the specific regions (features) of a part that were flagged by the inspection tool to determine what type of defect they represent.

Feature classification is good if the upstream inspection tool accurately captures the specific regions of the part that are defective and renders an accurate pass/fail judgment. The process can also handle parts with multiple types of defects.

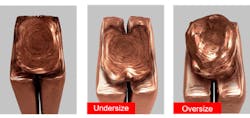

In spot welding, scene classification is normally preferred. Some types of defects, such as oversized and undersized welds, don’t lend themselves to easy isolation of specific defect regions. For these cases, scene classification offers a more robust approach. In cases with multiple types of defects, only the dominant one will be reported, but this is usually a small and acceptable trade-off for greater reliability.

In either case, the training process for classification is quite simple. Users present the system with scenes or feature regions of good parts and of each type of defect. No special labeling steps are required. The key, however, is to ensure that enough examples of defect types exist, typically a few hundred of each.

With a well-trained classifier, manufacturers can quickly take immediate corrective action. For example, oversized or undersized welds might be caused by an incorrect temperature setting on the welding hardware. Misaligned welds might require a robot to be recalibrated. Classification data can also be used for long-term process improvements, such as adding a preventive calibration step at the start of each shift if the system is prone to drift. As companies adopt smart manufacturing techniques, vision classification data can be tied into myriad datasets from the factory floor to improve every aspect of the process.

Validation for Production

The rate of correct defect detection using deep learning tools indicates that they are much more accurate and consistent than manual inspection. However, many quality assurance teams and production managers are justifiably concerned about adopting the latest technologies, which may look great in the lab but aren’t ready for real-world manufacturing conditions.

The first step toward mitigating these concerns is to plan a “burn-in” period where the deep learning system overlaps full manual inspection. Results of both types of inspection are recorded, and any differences are highlighted. Offline, a project expert reviews any images where the two approaches disagree for two purposes. First, this establishes a clear performance baseline for measuring overkill and underkill, which is critical in determining the project ROI. Second, any images that the deep learning system misjudged can be added to its training database to provide incremental performance improvement.

Even when a project is validated, companies may choose to retain a small number of their best human inspectors. In this two-tiered inspection model, the automated vision system inspects each part and determines if it is good, bad, or ambiguous. Any ambiguous part is sent for further review by the manual inspectors.

This adds an additional level of confidence in the system, and the performance of the inspectors often improves as well. In normal production, the overwhelming majority of parts are good, which makes human inspectors’ work boring and inconsistent. With two-tiered inspection, every part inspectors review calls for their expertise, leading to more consistent engagement in the process.

Bottom Line Benefits

According to research from Deloitte and the Manufacturer’s Alliance for Productivity and Innovation (MAPI), all U.S. manufacturers are likely to adopt smart factory processes by the end of 2030. Smart factories can achieve measurable business results, with the potential to transform the way work is done.

Adopting a deep learning inspection system can provide both direct and indirect savings. Direct labor savings include wages, hiring, and training expenses. In addition, direct savings come from the very tangible overkill/underkill improvements provided by the system.

Indirect savings can take several forms. They might stem from quality reports provided to end customers or used for field failure and product recall analysis. They might come from a better understanding of failure points in the manufacturing process, opening the door to additional improvements.

Deep learning tools for inspection of terminal spot welding offer the first truly viable alternative to inconsistent manual inspection and insufficient electrical testing, addressing an unsolved pain point in today’s automotive electronics industry.