Deep Learning at the Edge Simplifies Package Inspection

By Brian Benoit, Senior Manager Product Marketing, In-Sight Products, Cognex

Machine vision helps the packaging industry improve process control, improve product quality, and comply with packaging regulations. By removing human error and subjectivity with tightly controlled processes based on well-defined, quantifiable parameters, machine vision automates a variety of package inspection tasks. Machine vision tasks in the packaging industry include label inspection, optical character reading and verification (OCR/OCV), presence-absence inspection, counting, safety seal inspection, measurement, barcode reading, identification, and robotic guidance.

Machine vision systems deliver consistent performance when dealing with well-defined packaging defects. Parameterized, analytical, rule-based algorithms analyze package or product features captured within images that can be mathematically defined as either good or bad. However, analytical machine vision tools get pushed to their limits when potential defects are difficult to numerically define and the appearance of a defect significantly varies from one package to the next, making some applications difficult or even impossible to solve with more traditional tools.

In contrast, deep learning software relies on example-based training and neural networks to analyze defects, find and classify objects, and read printed characters. Instead of relying on engineers, systems integrators, and machine vision experts to tune a unique set of parameterized analytical tools until application requirements are satisfied, deep learning relies on operators, line managers, and other subject-matter experts to label images. By showing the deep learning system what a good part looks like and what a bad part looks like, deep learning software can make a distinction between good and defective parts, as well as classify the type of defects present.

The Democratization of Deep Learning

Not so long ago, perhaps a decade, deep learning was available only to researchers, data scientists, and others with big budgets and highly specialized skills. However, over the last few years many machine vision system and solution providers have introduced powerful deep learning software tools tailored for machine vision applications.

In addition to VisionPro Deep Learning software from Cognex (Natick, MA, USA; www.cognex.com), Adaptive Vision (Gliwice, Poland; www.adaptive-vision.com) offers a deep learning add-on for its Aurora Vision Studio; Cyth Systems (San Diego, CA, USA; www.cyth.com) offers Neural Vision; Deevio (Berlin, Germany; www.deevio.ai) has a neural net supervised learning mode; MVTec Software (Munich, Germany; www.mvtec.com) offers MERLIC; and numerous other companies offer open-source toolkits to develop software specifically targeted at machine vision applications.

However, one common barrier to deploying deep learning in factory automation environments is the level of difficulty involved. Deep learning projects typically consist of four project phases: planning, data collection and ground truth labeling, optimization, and factory acceptance testing (FAT). Deep learning also frequently requires many hundreds of images and powerful hardware in the form of a PC with a GPU used to train a model for any given application. But, deep learning is now easier to use with the introduction of innovative technologies that process images “at the edge.”

What Is Edge Learning?

Deep learning at the edge (edge learning), a subset of deep learning, uses a set of pretrained algorithms that process images directly on-device. Compared with more traditional deep learning-based solutions, edge learning requires less time and fewer images, and involves simpler setup and training.

Edge learning requires no automation or machine vision expertise for deployment and consequently offers a viable automation solution for everyone—from machine vision beginners to experts. Instead of relying on engineers, systems integrators, and machine vision experts, edge learning uses the existing knowledge of operators, line engineers, and others to label images for system training.

Consequently, edge learning helps line operators looking for a straightforward way to integrate automation into their lines as well as expert automation engineers and systems integrators who use parameterized, analytical, rule-based machine vision tools but lack specific deep learning expertise. By embedding efficient, rules-based machine vision within a set of pretrained deep learning algorithms, edge learning devices provide the best of both worlds, with an integrated tool set optimized for packaging and factory automation applications.

With a single smart camera-based solution, edge learning can be deployed on any line within minutes. This solution integrates high-quality vision hardware, machine vision tools that preprocess images to reduce computational load, deep learning networks pretrained to solve factory automation problems, and a straightforward user interface designed for industrial applications.

Edge learning differs from existing deep learning frameworks in that it is not general purpose but is specifically tailored for industrial automation. And, it differs from other methods in its focus on ease of use across all stages of application deployment. For instance, edge learning requires fewer images to achieve proof of concept, less time for image setup and acquisition, no external GPU, and no specialized programming.

Standard Classification Example

Developing a standard classification application using traditional deep learning methodology may require hundreds of images and several weeks. Edge learning makes defect classification much simpler. By analyzing multiple regions of interest (ROIs) in its field of view (FOV) and classifying each of those regions into multiple categories, edge learning lets anyone quickly and easily set up sophisticated assembly verification applications.

In the food packaging industry, edge learning technology is increasingly being used for verification and sorting of frozen meal tray sections. In many frozen meal packing applications, robots pick and place various food items into trays passing by on a high-speed line. For example, robots may place protein in the bottom center section, vegetables in the top left section, a side dish or dessert item in the top middle section, and some type of starch in the top right section of each tray.

Each section of a tray may contain multiple SKUs. For example, the protein section may include either meat loaf, turkey, or chicken. The starch section may contain pasta, rice, or potatoes. Edge learning makes it possible for operators to click and drag bounding boxes around characteristic features on a meal tray, fixing defined tray sections for training.

Next, the operator reviews a handful of images, classifying each possible class. Frequently, this can be done in a few minutes, with as few as three to five images for each class. During high-speed operation, the edge learning system can accurately classify the different sections. To accommodate entirely new classes or new varieties of existing classes during production, the tool can be updated with a few images in each new category.

When to Use Deep Learning

For complex or highly customized applications, traditional deep learning is an ideal solution because it provides the capacity to process large and highly detailed image sets. Often, such applications involve objects with significant variations, which demands robust training capabilities and advanced computational power. Image sets with hundreds or thousands of images must be used for training to account for such significant variation and to capture all potential outcomes.

Enabling users to analyze such image sets quickly and efficiently, traditional deep learning delivers an effective solution for automating sophisticated tasks. Full-fledged deep learning products and open-source frameworks are well-designed to address complex applications. However, many factory automation applications entail far less complexity, making edge learning a more suitable solution.

When to Use Edge Learning

With algorithms designed specifically for factory automation requirements and use cases, edge learning eliminates the need for an external GPU and hundreds or thousands of training images. Such pretraining, supported by appropriate traditional parameterized analytical machine vision tools, can vastly improve many machine vision tasks. The result is edge learning, which combines the power of deep learning with a light and fast set of vision tools that line engineers can apply daily to packaging problems and other factory automation challenges.

Compared with deep learning solutions that can require hours to days of training and hundreds to thousands of images, edge learning tools are typically trained in minutes using a few images per class. Edge learning streamlines deployment to allow fast ramp-up for manufacturers and the ability to adjust quickly and easily to changes.

This ability to find variable patterns in complex systems makes deep learning machine vision an exciting solution for inspecting objects with inconsistent shapes and defects, such as flexible packaging in first aid kits.

Edge Learning Tools and Hardware

For the purposes of edge learning, Cognex has combined traditional analytical machine vision tools in ways specific to the demands of each application, eliminating the need to chain vision tools or devise complex logic sequences. Such tools offer fast preprocessing of images and the ability to extract density, edge, and other feature information that is useful for detecting and analyzing manufacturing defects. By finding and clarifying the relevant parts of an image, these tools reduce the computational load of deep learning.

For example, packing a lot of sophisticated hardware into a small form factor, Cognex’s In-Sight 2800 vision system runs edge learning entirely on the camera. The embedded smart camera platform includes an integrated autofocus lens, lighting, and an image sensor. The heart of the device is a 1.6-MPixel sensor.

An autofocus lens keeps the object of interest in focus, even as the FOV or distance from the camera changes. Smaller and lighter than equivalent mechanical lenses, liquid autofocus lenses also offer improved resistance to shock and vibration.

Key for a high-quality image, the smart camera is available with integrated lighting in the form of a multicolor torchlight that offers red, green, blue, white, and infrared options. To maximize contrast, minimize dark areas, and bring out necessary detail, the torchlight comes with field-interchangeable optical accessories such as lenses, color filters, and diffusers, increasing system flexibility for handling numerous applications.

With 24 V of power, the In-Sight 2800 vision system has an IP67-rated housing, and Gigabit Ethernet connectivity delivers fast communication speed and image offloading. This edge learning-based platform also includes traditional analytical machine vision tools that can be parameterized for a variety of specialized tasks, such as location, measurement, and orientation.

Training Edge Learning

Training edge learning is like training a new employee on the line. Edge learning users don’t need to understand machine vision systems or deep learning. Rather, they only need to understand the classification problem that needs to be solved. If it is straightforward—for instance, classifying acceptable and unacceptable parts as OK/NG—the user must only understand which items are acceptable and which are not.

Sometimes line operators can include process knowledge not readily apparent, derived from testing down the line, which can reveal defects that are hard for even humans to detect. Edge learning is particularly effective at figuring out which variations in a part are significant and which variations are purely cosmetic and do not affect functionality.

Edge learning is not limited to binary classification into OK/NG; it can classify objects into any number of categories. If parts need to be sorted into three or four distinct categories, depending on components or configurations, that can be set up just as easily.

Edge Learning in Packaging Applications

To simplify factory automation and handle machine vision tasks of varying complexity, edge learning is useful in a wide range of industries, including medical, pharmaceutical, and beverage packaging applications.

Automated visual inspection is essential for supporting packaging quality and compliance while improving packaging line speed and accuracy. Fill level verification is an emerging use of edge learning technology. In the medical and pharmaceutical industries, vials filled with medication to a preset level must be inspected before they are capped and sealed to confirm that levels are within proper tolerances.

Unconfused by reflection, refraction, or other image variations, edge learning can be easily trained to verify fill levels. Fill levels that are too high or too low can be quickly classified as NG, while only those within the proper tolerances are classified as OK.

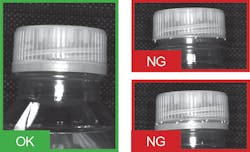

Another emerging use of edge learning technology is cap inspection in the beverage industry. Bottles are filled with soft drinks and juices and sealed with screw caps. If the rotary capper cross-threads a cap, applies improper torque, or causes other damage during the capping process, it can leave a gap that allows for contamination or leakage.

To train an edge learning system in capping, images showing well-sealed caps are labeled as good; images showing caps with slight gaps, which might be almost imperceptible to the human eye, are labeled as no good. After training is complete, only fully sealed caps are categorized as OK. All other caps are classified as NG.

While challenges for traditional rule-based machine vision continue to arise as packaging application complexity increases, easy-to-use edge learning on embedded smart camera platforms has proved to be a game-changing technology. Edge learning is more capable than traditional machine vision analytical tools and is extremely easy to use with previously challenging applications.