High-speed vision checks food cans concisely

A novel printing code coupled with decision tree-based recognition software team up to check alphanumeric codes on canned goods.

Andrew Wilson, European Editor

With the advent of high speed canning lines, the risk of mislabelling and the task of checking that every single can has the correct label and the correct product in the can, becomes ever more complex. To do so, the ‘Product Code’ (ink-jet printed alpha numeric code on the end of the can), must be read independently of the cans rotation as well as the barcode on the can’s label. Failure to check every single can leaves the manufacturer open to mislabelling resulting in crippling fines and product recalls.

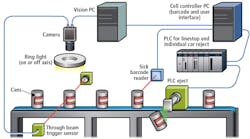

Figure 1: Machine Vision Technology has developed a vision-based system that can read both alphanumeric characters printed on canned foods and linear barcode labels at speeds of up to 800 cans/min. While one PC is used to interface to the vision system to inspect the end of the cans, the other (the Cell Controller) interfaces to a label barcode reader, control when the line automatically stops and provide a GUI for the operator to change products. This second PC system sends digital I/O pass/fail results to a PLC that tracks the cans and controls the reject mechanism.

For printed codes on cans, this poses a challenging task because of the specular nature of such surfaces that must be compensated for by specialized illumination systems. Not only must different printed alphanumeric codes be read at multiple orientations, the linear bar codes on each label must also be checked to ensure that the correct label has been applied to the correct canned food.

This was the problem faced by Princes Limited (Liverpool, UK; www.princes.co.uk) after purchasing the canning divisions of Premier Foods (St Albans, Hertfordshire, UK; www.premierfoods.co.uk) located at Long Sutton, Lincolnshire and Wisbech, Cambridgeshire, UK.

Canned heat

“At present,” says Brian Castelino, Managing Director of Machine Vision Technology Ltd. (Leamington Spa, UK; www.machine-vision-technology.co.uk), “most of the canned food sold in the UK such as fruit, soup and vegetables are produced by Princes in standard size cans. Because a large variety of products are produced, the production run on any given line may be required to be changed every four hours necessitating a modular approach to the canning systems, ink-jet alphanumeric printing and labelling systems and the machine vision systems used to inspect them.” Indeed, with over 3,000 different products being manufactured, any errors in this process can prove to be very expensive.

In each facility, after a can is filled and sealed, an ink-jet alphanumeric code is applied to the can and then it is steam cooked before the label is placed on the can. Should any jamming of the line occur, this backs-up the production line, causing the cans to be over-cooked. To prevent this from occurring, cans must then be removed from the production line, palletized and then re-introduced into the system (hopefully on to the correct production line running the same batch). This is just one of the many potential sources of mislabelling. At this stage, the cans are still un-labeled as such labels would not survive the process of steam cooking.

At the point of labeling, the Product Code on the can is read using high-speed, rotation independent optical character recognition (OCR) to ensure the can is correct. Further, the linear barcode on the can’s label is scanned and read with a barcode scanner to ensure the label is correct.

“Should an incorrect label be applied to the can, large grocery chains in the UK can impose fines – often as large as $1M – to their suppliers, in addition to the added cost of product recalls and potential loss of future business. Needless to say, then, that developing a fool-proof method to overcome such possible outcomes is of great importance,” says Castelino.

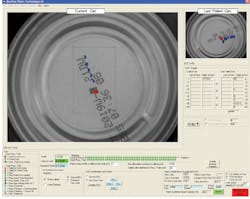

Figure 2: In this example, two alphanumeric lines are printed on the base of a can using a high-speed ink-jet printer. While the first indicates the best before date and a code to describe the contents of the can, the second references the time the can was produced and the production line.

For this reason, Machine Vision Technology was tasked with developing such a vision-based system that could overcome these challenges (Figure 1). In operation, the system is capable of reading both inkjet printed code and linear bar-code labels at speeds of up to 800 cans/minute with a false read reject rate of less than 0.3% (i.e. 3 cans in every 1,000 cans inspected). A video of the system in operation can be found at http://bit.ly/VSD-MVTOCR. False fails are ‘failure to read’ all four characters, usually due to poor print quality caused by such factors as water on the cans during the printing process that cause missing or badly distorted characters to occur.

Alphanumeric codes

“After cans are filled and capped with a lid, an alphanumeric code is printed on the top or bottom of the can (in the case of ring pull cans) using a high-speed ink-jet printer from Videojet Technologies (Wood Dale, IL,USA: www.videojet.com). This code includes two lines of text. The first indicates the best before date and a code (the Product Code) that describes the contents of the can. The second has a code to reference the time the can was produced and the production line on which it was produced (Figure 2). The can then passes into a steam cooker to cook and sterilize the food. No label exists on the can at this stage since it would peel off due to the steam,” explains Castelino.

“There were several challenges to achieving a reliable system with an extremely low false reject rate. Among them were a high linear speed (2m/s), 12 cans/s, the random orientation of the Product Code on the can end when viewed from the top, the variability of printing with eight different printing lines with potentially sixteen different printers, and the variability in print quality due to the nature of the environment (water, steam, slippage of the can on the line past the printer). This variability causes compressed print and print head rotation resulting in italicised characters.

“One of the main obstacles in interpreting these alphanumerics 800 times per minute,” says Castelino, “is that the cans can be presented to the imaging system in different orientations making high-speed reading difficult.” To do so, images of each can could be imaged and rotated to discern these markings, a task that would place a heavy burden on any machine vision software. To overcome this, Castelino and his colleagues worked with Princes Limited to develop a novel alphanumeric code to solve this problem.

To overcome the random orientation of the print on the top of the can, a ‘locator’ is used to find the Product Code in both position and orientation. This locator consists of a hyphen followed by a rectangular block consisting of seven dots tall by five dots wide. This pattern is referred to as an ‘Icon’ that ‘points to’ the four-digit Product Code to its right. Using software running on the PC, the coordinate frame is then rotated to align with the orientation of the print to enable the OCR software to read the code (Figure 3).

Specular reflection

Overcoming specular reflection also posed a problem in obtaining images from which the alphanumeric code could be identified. In the initial design of the system, a CV-A11 monochrome progressive scan 1/3in CCD camera from JAI (San Jose, CA, USA; www.jai.com) with an analog video output was mounted at a 350mm stand-off distance from the canning line. Mounted with a 25mm lens from Cosmicar, the camera’s exposure time is set at 1/10,000s and triggered by a photoelectric sensor to capture images of the cans as they move under an approximately 3in square FOV at 2m/s.

Figure 3: To overcome the random orientation of the print on the top of the can, a ‘locator’ is used to find the Product Code in both position and orientation. This locator consists of a hyphen followed by a rectangular block consisting of seven dots tall by five dots wide. This Icon ‘points to’ the four-digit Product Code to its right. Using software running on the PC, the co-ordinate frame is then rotated to align with the orientation of the print to enable the OCR software to read the code.

“To enhance and make use of the specular reflection on shiny cans” says Castelino, “an on-axis fluorescent ring light from StockerYale – now ProPhotonix (Salem, NH, USA; www.prophotonix.com) mounted on-axis was used while for cans with a matte finish, a larger diameter off-axis white ring light, also from StockerYale was employed.” Images from the camera are then transferred from the camera to the system’s 19in rack mount industrial PC using a PC2Vision analog frame grabber from Teledyne DALSA (Waterloo, ON, Canada; www.teledynedalsa.com).

Reading codes

To read the codes from the images, Machine Vision Technology chose to deploy the MINOS decision tree-based pattern recognition tools, part of the Common Vision Blox software package from Stemmer Imaging (Puchheim; Germany; www.commonvisionblox.com).

“Before deploying this software in a run-time environment, the Icon and the complete OCR font needs to be trained. This is a onetime procedure where the discrete grey-level features within the image are automatically extracted into a training set,” says Castelino. This involves gathering images of all the different characters from numerous types of Videojet printers and different scaled versions of the proprietary ‘Icon’ used to determine the position and orientation of the code. Then the Minos software uses a neural network approach to build a classifier.

As well as detecting features of each trained object type, CVB MINOS also uses negative examples in the sample images to improve the correct detection and classification of the alphanumeric code and identify characters with overlapping extremities or partially hidden characters. “In runtime, Minos uses the classifier to read, often poorly defined characters with damage or reflections across them, with uncanny accuracy,” says Castelino.

Tracking codes

Approximately 50ms after image capture, the result of the OCR is determined and pass/fail data for each can moving along the conveyor is passed to a PLC which tracks the can to a pneumatically-operated rejection mechanism that can eject individual cans. The can then passes on to a labeller to have the label applied. The label contains a linear barcode often using UPC, Code 39, Code 128 or UCC/EAN-128 formats. In the case of a UPC code, for example, the information includes the manufacturer and item number of the product which can be used by point of sale systems (POSs) to, for example, price the product.

A laser-based scanner from SICK (Minneapolis, MN, USA; www.sick.com) was installed within the labeller. This sends the raw data back to the second PC in the system (the Cell Controller) over an RS232 interface. Should a label fail to read an individual can (for example due to a flapping label) then the system is set to allow ten such ‘fail to reads’ before automatically stopping the line. However, if just one can contains the wrong data, the system automatically stops and requires a supervisor to intervene and correct the problem before the line can be restarted using the system’s GUI.

User friendly

“This user interface has two distinct functions,” says Castelino. “First, it displays the status of each can as it passes through the imaging system and shows the last can that has failed inspection. Secondly, the user interface is used to configure the system for the numerous products that Princes Limited produces.” This is accomplished by allowing the operator to scroll through the product list and select the new product. In this way, with a single click of a mouse, the operator can tailor the barcode scanner and the vision system to accommodate the new product without the need to ‘train’ the first can in the new batch.

At present, the vision system has been configured on eight canning lines at Princes foods production facility in Wisbech and 16 lines at the company’s Long Sutton facility. At a cost of approximately $75,000 per system, the systems save Princes typically £2.5M annually in potential fines from UK food retailers.

Castelino has a few wise words for other systems integrators tasked with developing such systems. “A number of larger machine vision companies believe that machine vision systems should be regarded as “fix and forget items,” he says. “However, this could not be further from the truth. Although the cost of the hardware to accomplish such a task may be minimal, developers should realize that a successful installation will demand an intimate knowledge of how the customer’s products may evolve over time and how to accommodate these changes. Just as important is an understanding of the time taken to provide extensive customer training and impart a knowledge of external peripherals (such as high-speed ink-jet printers) that will affect the outcome of how well such systems perform.”

Companies mentioned

JAI

San Jose, CA, USA

www.jai.com

Machine Vision Technology

Leamington Spa, UK

www.machine-vision-technology.co.uk

Premier Foods

St Albans, Hertfordshire, UK

www.premierfoods.co.uk

Princes Limited

Liverpool, UK

www.princes.co.uk

ProPhotonix

Salem, NH, USA

www.prophotonix.com

SICK

Minneapolis, MN, USA

www.sick.com

Stemmer Imaging

Puchheim, Germany

www.commonvisionblox.com

Teledyne DALSA

Waterloo, ON, Canada

www.teledynedalsa.com

Videojet Technologies

Wood Dale, IL, USA

www.videojet.com