“OPC Vision” release candidate presented

Image processing to become integral component of industrial automation

Martin Cassel

In the future, the two worlds of image processing and industrial automation will interlock seamlessly and in real time without the use of proprietary interfaces. At least, that’s what the “OPC UA Companion Specification for Machine Vision” (OPC Vision for short), portends to enable. A new specification candidate for release was positively received when recently presented at the IVSM (International Vision Standards Meeting) standardization meeting and at the automatica 2018 trade show. If adopted, OPC Vision promises to make image processing an even more important facet of Industry 4.0 within networked production.

OPC Unified Architecture (Open Platform Communications OPC UA) is an interoperability standard and communication protocol for the secure and reliable—as well as manufacturer-, platform- and hierarchy-independent—exchange of information from the smallest sensor up to the enterprise IT level and the cloud. The standard targets factory floor and general industrial markets and is designed to be adapted for specific industries and technologies, such as OPC Vision for image processing.

Two years ago at the automatica trade fair for automation and robotics in Munich, Germany, work on OPC Vision began, as a memorandum of understanding between the VDMA Machine Vision Group and the OPC Foundation was signed. Then, during the May 2018 International Vision Standards Meeting in Frankfurt, the working group presented a release candidate, which was met with support from attendees. At automatica 2018, the release candidate—which offers Industry 4.0 and GenICam compatibility— was made available to the public, with a focus put on the software model’s semantics.

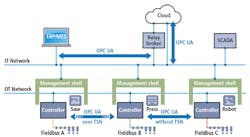

The proposed standard’s goal is to integrate anything from image processing components up to entire image processing systems into industrial automation applications, with the hope of enabling machine vision technologies to communicate with the entire factory and beyond (Figure 1). With the standard, a generic interface is being developed for image processing systems at the user level, including semantic description of image data.

Figure 1: The OPC Unified Architecture integrates OT and IT network.

Uniform communication of decentralized components

During production inspection, for example, many image processing systems communicate with programmable logic controllers (PLCs). Typically, a sensor connected to the PLC signals the PLC when the part to be inspected enters the field of view of the camera. The PLC then triggers the camera to acquire an image of the part. After the vision system analyzes the image, it communicates pass/fail results to the PLC, which then initiates some action such as a reject mechanism, if necessary. PLCs also communicate process information and display operational status and readiness. Under OPC Vision, communication methods that were previously based on different protocols would be standardized.

Furthermore, hierarchical structures and interfaces are being disbanded in favor of horizontally and vertically integrated Gigabit Ethernet IT networks that function as decentralized cyber-physical systems that—in the future—will be able to replace central controls. OPC UA is designed to supplement existing interfaces such as field buses, and perhaps even replace them completely at a later point. It is based on object-oriented information modeling, service-oriented architecture, inherent data security, and rights management, as well as a platform-independent communication stack that extends to the user level.

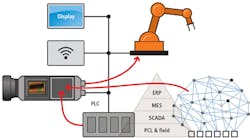

OPC UA describes data, functions, and services of embedded devices and machines as well as the data transport as client-server architecture of machine-to-machine communication (Figure 2). For data modeling, OPC Vision contains industry-specific semantics as universally-valid definitions, similar to the GenICam Standard Features Naming Convention (SFNC), for images where data forms are precisely defined, which offers the potential of simplifying the use of multimodal sensor data and communication improvements.

Figure 2: Network-capable image processing devices communicate directly with factory floor and IT system components.

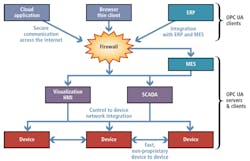

The data described semantically via OPC UA can be understood and used by any OPC UA-capable device. A single server—such as an image processing system—would be able to administer production and process data, alarms, events, historical data, and program calls. A client such as a PLC, supervisory control and data acquisition (SCADA), manufacturing execution system (MES), or enterprise resource planning (ERP), would also be able to directly call a server’s content or service function and receive a return value, such as the number of saleable products or ordering requirements, as a result.

Here, an ERP system would be able to determine frame grabber properties, or the status of the entire image processing system and ongoing image processing system streams could be retrieved by clients via events. With the standard, every image processing system function would be abstracted in the information model, and manufacturer-specific applications could be added as manufacturers provide additional services and capabilities.

Real time-capable OPC UA

client-server network

OPC Vision was designed to provide a framework in which image processing systems within a production environment take on various control statuses with regard to other network components, in particular PLCs, and implement consistent data for communication to these components (transfer of tasks and confirmation of results). Control status changes are either communicated by the client or generated internally and communicated back to the client. In the event of errors during image processing, the image processing system issues an error or warning alert which is represented in the specification as a general regulation (structure of the alert and its interaction with the control status).

The division between previous IT and real-time capable machine networks is nullified by two OPC UA expansions: In the publisher-subscriber model, a publisher (server) sends information to the network which can be “subscribed to” by several clients. The combination with time-sensitive networking (TSN) for industrial ethernet networks makes this information real time-capable (Figure 3).

Figure 3: Data described semantically via OPC UA can be understood and used by any OPC UA-capable device.

Time-critical data can thus be transferred via a common network, to transfer camera images as a stream—for example—as well as to operate cameras, frame grabbers and a PLC concurrently via OPC UA. Currently, communication nets are not designed to transmit large amounts of image data, but at the protocol level, the building blocks have already been laid in this regard for the future.

Which advantages will OPC Vision provide for the future? For one, network components such as image processing devices would be based upon a single communication protocol, enabling faster device and software integration into the factory floor network, PLC software, human-machine interfaces (HMI) and IT systems. The protocol can be expanded to the specific manufacturer. In addition, uniform semantics would encourage development of generic HMI interfaces for image processing devices.

Another benefit would be simplified integration of embedded cameras and sensors into the production line and in novel applications such as autonomous robots in warehouses, collaborative robots (cobots), or HMI. For embedded devices, image processing applications can be quickly programmed using the VisualApplets FPGA graphical development environment to equip them with adaptable and versatile intelligence (including deep learning and machine learning).

The potential advantages of such on-board image pre-processing includes reducing the need to transmit large volumes data, and direct monitoring of actuators.

Martin Cassel,

Editor, Silicon Software (Mannheim, Germany; https://silicon.software)