February 2019 snapshots: Chain drive inspection, machine learning cars, automated grocery checkout, drone wind turbine inspections, wheelchairs driven by face recognition, new thermal imaging capability added to line of drones

Take your autonomous driving test with new DeepRacer from AWS

Thanks to Amazon Web Services (Seattle, WA, USA; https://aws.amazon.com/) you can design your own machine learning environment and test it with a grey robot car that looks like something out of a Pixar (Emeryville, CA, USA; https://www.pixar.com/) movie.

The AWS DeepRacer is a 1/18th scale, radio-controlled, 4WD car with an onboard Intel (Santa Clara, CA, USA; https://www.intel.com) Atom processor running Ubuntu 16.04 LTS, the Robot Operating System (ROS), and the Intel OpenVino computer vision toolkit; a 4 MPixel 1080p camera; 802.11ac WiFi; multiple USB ports; and two hours’ worth of battery power.

AWS DeepRacer is also a machine learning tool that works off the principles of reinforcement learning. Where supervised learning is a technique involving facts and inferences, reinforcement learning allows an agent to learn in an interactive environment via trial-and-error. The agent processes feedback from actions and learns how to achieve a predetermined goal.

In this case, the AWS DeepRacer is the agent and the feedback involves crashing into things. Developers who work with the DeepRacer can take advantage of virtual training courses, however, before sending their cars careening into the real-life test environment.

AWS DeepRacer comes equipped with a fully-configured cloud environment that uses the reinforcement learning feature in Amazon SageMaker and a 3D simulation environment run in AWS RoboMaker. Developers can run an autonomous driving model through simulated race tracks for virtual evaluation or download the race tracks to the AWS DeepRacer and try running the real-life version.

Attendees at re:Invent (https://reinvent.awsevents.com/) 2018 were invited by Amazon Web Services to attend a reinforcement learning workshop and learn how to use the AWS DeepRacer car. Developers who want to take advantage of this autonomous driving development kit at home can order the DeepRacer online.

All users of this new machine learning tool can enter the AWS DeepRacer league, compete in live racing events at AWS global summits, and participate in virtual events and tournaments. Top competitors will be invited to the AWS DeepRacer 2019 Championship Cup at the next re:Invent conference, in 2019.

Artificial intelligence and 3D camera enable facial expression-controlled wheelchair

Developed by Brazilian company HOOBOX Robotics (São Paulo, Brazil; www.hoo-box.com), the Wheelie motorized wheelchair kit uses artificial intelligence and a 3D camera to enable users to move the wheelchair using simple facial expressions.

According to the National Spinal Cord Injury Statistical Center, it is estimated that there are approximately 288,000 people in the United States living with spinal cord injuries, and about 17,700 new cases each year. Additionally, a 2018 study (http://bit.ly/VSD-SPSTUDY) showed that physical mobility has the largest impact on the quality of life for people with spinal cord injuries. For people dealing with such injuries, mobility is often enabled through caregivers or through a motorized wheelchair with sensors placed on the body that require special education to operate, according to Intel (Santa Clara, CA, USA; www.intel.com), with whom HOOBOX Robotics has partnered.

Company CEO Paulo Pinheiro first developed the idea for the Wheelie a few years back when he noticed a girl in an airport who could neither move her hands nor legs and was entirely dependent on someone else to move her wheelchair. At one point, according to a press release, the girl in the wheelchair had a big smile on her face and Pinheiro was inspired to consider the idea of developing a wheelchair that lets people use facial expressions to move the wheelchair.

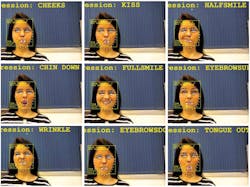

HOOBOX Robotics’ Wheelie 7 kit allows users to pick from 10 facial expressions to control their motorized wheelchair moving forward, turning and stopping.

At the Consumer Electronics Show 2019, HOOBOX Robotics and Intel showcased

the wheelchair kit, which detects 11 different facial expressions—including smiles, frowns, kisses—and translates them into motorized wheelchair controls. Used to detect facial expressions is an SR300 Intel RealSense 3D depth camera.

The SR300 camera features a 640 x 480 monochrome infrared camera, a 1920 x 1080 color camera, and a class 1 laser-compliant, coded-light infrared projector system. To generate a depth frame, the infrared projector illuminates a scene with a set of predefined, increasing spatial frequency coded infrared vertical bar patterns. These patterns are warped by the scene, reflected back, and captured by the infrared camera. The infrared camera pixel values are then processed by the imaging ASIC to generate a depth frame, and subsequent depth frames create a video stream that is transmitted to a client system, according to Intel.

Facial expressions are captured with the camera and interpreted by AI algorithms run on an Intel NUC Mini PC mounted to the chair. Interpretation of the facial expressions is done in real time using the Intel Distribution of OpenVINO toolkit and Intel processors, and is reportedly fast enough to execute a responsive motion change for both a comfortable experience and user safety. Also included in the kit is “The Gimme” gripper, a navigation sensor, Wheelie software, electrical accessories, and a camera gooseneck.

“The Wheelie 7 is the first product to use facial expressions to control a wheelchair. This requires incredible precision and accuracy, and it would not be possible without Intel technology,” said Pinheiro. “We are helping people regain their autonomy.”

Wheelie 7 reportedly takes only seven minutes to install in any motorized wheelchair available on the market.

Machine vision system detects stretched chain links on moving conveyor drives

The Cognex (Natick, MA; http://www.cognex.com/) In-Sight 2000 vision sensor is the heart of a new system that could automate inspection of one of the automobile manufacturing industry’s most important industrial automation elements.

The chain links of a conveyor belt system inevitably stretch, warp, or break after extended use and require replacement during maintenance cycles. Chain links that break prior to scheduled maintenance cycles require immediate replacement, effectively shutting down the related conveyor system until the replacement is completed.

Identifying problematic chain links is historically a manual process of visual inspection and marking for replacement. By automating the process with In-Sight 2000 vision sensors, General Motors of Canada (Oshawa, ON, Canada; https://www.gm.ca/en/home.html) must no longer slow or stop production to measure chain link stretching.

A red LED illuminator backlights the chain, and a proximity sensor triggers the camera as each link passes into the camera’s field of view. Image processing software identifies the edges of the link and measures the distance between them. If the variation between nominal chain link length and the length of the link being scanned is 4% or more the link is identified as problematic by the vision system. An optional Cognex I/O module also counts how many chain links fall within each category and the number of rotations for a 1,680-foot chain.

The In-Sight 2000 vision sensor from Cognex features a 1/3” CMOS image sensor available in color or monochrome versions that captures 640 x 480 images at speeds of up to 75 fps. In-Sight vision sensors also feature the In-Sight Explorer EasyBuilder software interface, which enables the setup of the application, and can also be configured for in-line and right-angleinstallation.

In the future, the PLC will direct a downstream paint sprayer to mark problematic chain links for replacement during the next maintenance cycle, thus fully-replacing manual inspection methods.

Latest DJI drone incorporates thermal imaging from FLIR

DJI’s (Shenzhen, China; www.dji.com) Mavic 2 Enterprise Dual Drone will feature FLIR (Wilsonville, OR, USA; www.flir.com) cameras that will bring thermal imaging, as well as visible imagery, to users such as first responders, industrial operators, and law enforcement personnel.

Mavic 2 Enterprise Dual—which was on display at the Consumer Electronics Show 2019 in Las Vegas—pairs a FLIR thermal camera with a visible camera, along with FLIR’s patented MSX Technology, or multispectral dynamic imaging, which the company says, “embosses high-fidelity, visible-light details onto the thermal imagery to enhance image quality and perspective.” The drone is a compact, foldable quadcopter that measures, unfolded, 214 x 91 x 84 mm and weighs just 899 g. Add-on options for the drone include a spotlight, speaker, and beacon.

For thermal imaging capabilities, the drone uses FLIR’s Lepton camera core, which features a 160 x 120 uncooled VOx microbolometer array and is sensitive in the longwave infrared range from 8 to 14 µm. For its visual camera, the Mavic 2 Enterprise Dual features a 1/2.3”, 12 MPixel CMOS image sensor that captures image sizes of up to 4056 x 3040 as well as dynamic zoom (2x optical and 2x digital zoom capability).

“With the Mavic 2 Enterprise Dual, we can offer more commercial drone pilots the additional value of side-by-side thermal and visible imagery in a highly portable drone, enabling more commercial drone operations from utility inspections to emergency response,” said Roger Luo, President at DJI.

The Thermal by FLIR program was created to support OEMs and users interested in deploying FLIR thermal sensors and ensures that they can carry the Thermal by FLIR brand and receive additional product development and marketing support. Additional Thermal by FLIR partners include Cat Phones, Casio, and Panasonic.

In addition to the examples above, FLIR notes that the drone can be used in applications such as firefighting, search and rescue, and the inspection of power lines, bridges, and cell towers.

Vision-guided drones inspect wind turbines

Industrial wind turbine towers can reach over 400 ft. high, making inspection of turbine blades hazardous for human operators. Thanks to machine vision and the utility of unmanned aerial vehicles (UAV), these risks can be eliminated completely.

SkySpecs (Ann Arbor, MI, USA and Amsterdam, Netherlands; https://skyspecs.com/skyspecs-solution/) offers fully-automated drone inspections of wind turbines. According to SkySpecs, one of its drones, based on the Freefly Systems (Woodinville, WA, USA; https://freeflysystems.com/) Alta drone, can complete an inspection in 15 minutes or less, detecting the location of cracks or erosion on individual blades by scanning each blade from meters above its surface.

Once activated, the drone takes off, locates the wind turbine, flies to the top of the tower, and identifies the blade locations. The drone then plans the most efficient route to complete its inspection using a combination of sensors, including LiDAR. These are not pre-programmed routines based on turbine make and model, according to SkySpecs.

The company does not provide camera specs for its drones but says that a 2 mm-wide crack would be detected during the inspection. Low-light conditions are accounted for by the camera’s proximity to the blade being inspected and by adjusting the settings for each picture taken. Images are set to overlap to make sure every surface is fully inspected, and each image is correlated with laser and GPS data to ensure the accuracy of damage size and relative position data. The precise location on the blade of each image is also tagged to allow for comparing images after future inspections.

Users view inspection data and reports on SkySpec’s Web portal. Inspection results are not automated and require expert analysis, so automatic analysis algorithms are being developed. The company also plans to offer inspection routines other than for wind turbines.

Trigo Vision makes deal to deploy automated checkout retail system

Shoppers at Israel’s largest supermarket chain should be able to make long checkout lines a thing of the past if plans for a new camera system that leverages artificial intelligence and machine vision meet with success.

Trigo Vision (Tel Aviv, Israel; https://www.trigovision.com/) has developed an automated retail platform that is scheduled to be installed in the 272 stores of Israel’s largest supermarket chain, Shufersal (Central District, Israel; https://www.shufersal.co.il/). Trigo’s retail platform works along the same principles as the technology that drives Amazon Go’s (Seattle, WA, USA; http://bit.ly/VSD-AMGO) grocery stores, which offer “Just Walk Out” technology, where users can enter the store with the Amazon Go app, shop for products, and walk out of the store without lines or checkout.

While less information is provided on the Trigo Vision system, Amazon patent filings previously showed that the cameras used in Amazon Go may include RGB cameras, depth sensing cameras, and infrared sensors. In addition, the system utilizes image processing and facial recognition technologies.

The Trigo Vision platform is based on a network of ceiling-mounted cameras that register the identity of items as they are chosen by shoppers. Artificial intelligence and machine vision algorithms help provide accuracy for item tracking. An automated checkout system allows the consumer to pay for the items before they leave the store. The platform is GDPR-compliant and collects data anonymously.

Trigo Vision’s founders have extensive experience in artificial intelligence and algorithm development and have worked on numerous projects for Israel’s military intelligence. The patent-pending computer vision technology they have developed requires “significantly fewer cameras compared to other solutions on the market today,” and offers a “unique data collection process” for the precise identification of products.

Additionally, Trigo Vision says that its retail platform is easily scalable for deployment in stores of varying sizes without the need for altering store layouts. The company says that its new platform can also be used to provide real-time inventory updates and serve as an anti-shoplifting measure, and requires fewer cameras than other, similar systems.