Choosing a camera for a vision system

Imaging performance is determined by matching camera characteristics to system requirements.

By Jerry Fife

There are, of course, many issues to consider when selecting a camera for a vision system, but a few questions can help identify the key imaging characteristics required of the camera:

- What do I need to see and measure within the image?

- What do I want to improve over the existing imaging or measuring system?

- How does the imaging system need to interact with the rest of the system?

- What type of light source or wavelengths are used for illumination and imaging?

After answering these questions, you can evaluate camera options in terms of the camera types, features, and performance characteristics: area- and linescan cameras; interlaced and area-scan cameras; resolution and resolving detail; sensor size; sensitivity and wavelengths; minimum illumination specification; and signal-to-noise ratio.

Area- and linescan cameras

Two types of cameras are commonly used in machine-vision applications: area-scan and linescan. Area-scan cameras use an imaging sensor with width and height (for example, 640 × 480 or 1280 × 960 pixels). Linescan cameras use a sensor that is long and very narrow (for example, 2000 × 1 or 8000 × 1 pixels). To create a two-dimensional image with a linescan camera, the scene or object moves across the narrow axis of the sensor, with output one line at a time. The clocking, or timing, of the linescan camera must be precisely coordinated with the movement of the scene or object to create a useful image.

Area-scan cameras are best used when the entire image is collected at once or when it is difficult or undesirable to precisely control the movement of the scene relative to a linescan sensor. Area-scan cameras are preferred when

- The output is a video stream to be displayed directly on a monitor

- The scene is contained within the sensor's resolution

- The moving object will be illuminated with a strobe light to stop motion

- The image will be collected with a single triggered event

- The user desires the simplicity of area-scan configuration.

Linescan cameras are best used when imaging continuously moving scenes or objects, or when very high continuous resolution is required. Linescan cameras are preferred when

- The resolution desired is greater than area scan sensors provide

- The image can or must be processed one line at a time in a continuous process

- The scene is moving relative to the sensor and can be timed with the camera.

Interlaced and progressive scan

There are two types of area-scan cameras: interlaced and progressive scan. Interlaced cameras output a signal that is compatible with old Vidicon TV standards and can be viewed directly on analog video monitors. With interlaced cameras, an image consists of two fields (odd and even) that are exposed and output sequentially. The odd field is the odd-numbered lines and the even field is the even-numbered lines of the output image. This output sequence was originally created to reduce transmission bandwidth and provide less jerkiness in the displays. It works well for humans but not for machine-vision applications. Progressive-scan cameras expose the entire image at once and output the entire image at once.

Interlaced cameras are preferred when

- Output will be viewed directly on a monitor

- Lowest-cost camera and/or frame grabber is desired

- Object is still or moving slowly relative to exposure time

- One output field/trigger input is acceptable.

Progressive scan cameras are preferred when

- Object is moving quickly and one short exposure is desired

- Full frame exposure and output on trigger input is desired

- Full frame exposure and output with strobe illumination or long exposures is desired.

Resolution

The resolution of the camera determines the detail that can be extracted from the image. To reliably detect or measure a feature in the image, you need at least two pixels on that feature. Stated another way, the pixel size should be no larger than half the size of the feature to be measured. For example, a typical VGA camera will have a resolution of 640 × 480 pixels. The smallest feature that can be detected is therefore 1/320 or 0.31% horizontally and 1/240 or 0.42% vertically. Higher-resolution sensors can resolve smaller features within a given field of view (FOV) and/or increase the FOV.

Care must be taken when using sensors with smaller pixels that the optics in the system have the resolving power to match the sensors. The advantages of a higher-resolution sensor or sensors with smaller pixels can be lost in the blur of low-resolution optics. With high-magnification optics, the wavelength of the light becomes important in determining minimum effective pixel size.

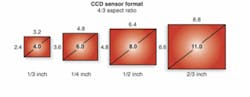

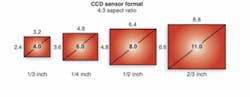

Sensor size

Sensor size and the optics determine the FOV. For machine vision the most popular sensor sizes are 1/2 and 1/3 type (also called 1/2 or 1/3 in.). The 1/2 type refers to the active image area of an old 1/2-in. imaging tube. When selecting a lens, make sure that it is compatible with sensor size equal to or greater than the sensors in the camera (see figure on p. 23).

Pixels (or cell) size or pitch is typically reported in microns. The pixel size is always measured from pixel center to pixel center. The active imaging size of a sensor is therefore the pixel size times the number of active or effective pixels.

Sensitivity and wavelengths

The sensitivity specification of a camera is its response to white visible light. For monochrome cameras, it is usually reported in terms similar to 400 lux,f/5.6, 0 dB. This means for a fixed visible light intensity of 400 lux and a camera gain set to 0 dB, a lens aperture of f/5.6 is needed to get a specific target level on output video signal. The higher the aperture number (smaller aperture) the more sensitive the camera, since it takes less light to achieve the target output level. Keep in mind that aperture number and aperture area are not in a linear relationship.

It is useful to compare the sensitivity specification between cameras of similar types from a single camera manufacturer. Comparing specs among different camera types and different camera manufactures can be difficult because of varying evaluation methods and camera characteristics.

For many machine-vision environments, the imaging is done at specific wavelengths instead of with white light. Most camera manufacturers will provide a spectral-response or quantum-efficiency chart for their cameras. This chart is usually a relative response (highest point of sensitivity is 100%) between the range of 400 to 1000 nm. Most color cameras and some monochrome cameras have an infrared-cut filter that significantly reduces the sensitivity above 650 nm.

Minimum illumination specification

The minimum illumination specification is a reflection of the low light sensitivity and gain of the camera. It is usually reported in terms similar to "0.1 lux atf/1.4 with AGC ON without IR filter." This statement means that with the lens aperture wide open (f/1.4) and automatic gain control on (gain will be at maximum AGC range), a target output level will be achieved at 0.1 lux. The lower the lux readings, the more capable the camera is of imaging a low light levels.

Care must be taken when using this specification to compare different types of cameras and different camera manufacturers. This specification is very dependent on the maximum gain of the camera, exposure time, and target output level. Very high gains are useful to obtain an image but may be too noisy for the application. Every 6 dB of gain is a doubling of the signal amplification. This measurement is typically done at video rates (exposure time of 33 ms). Very low minimum illumination specs are a reflection of very high gains and/or much longer exposure times.

Signal-to-noise ratio

The signal-to-noise ratio is the ratio of the maximum signal and the minimum noise the camera can achieve within an output image. This is usually expressed in decibels (dB) in a video camera. The higher the decibel spec, the better the contrast between signal and noise from the camera. In machine vision, a digital image is needed, so the image is digitized either in a frame grabber or in the camera. Since 6 dB represents a doubling of the ratio, 48 dB is equal to 8 bits, and 60 dB is equal to 10 bits. The effective number of bits will depend on analog-signal transmission losses and the frame grabber.

JERRY FIFE is senior project engineer at Sony Electronics, Park Ridge, NJ, USA; www.sony.com/videocameras.