New human research defines human visual processing

Scientists know that the eye forwards several parallel representations of the world to the brain, but what these are and how they are produced have until now been a mystery. Though it is well known that the eye does not simply send digital inputs to the brain, it had been thought by scientists that images detected by the retina's photoreceptors were sent to the brain after relatively simple processing.

Recent studies by Botond Roska and Prof. Frank S. Werblin of the University of California (Berkeley, CA), however, have shown that retinal cells extract only the essence of images sent to the brain. In their research, Roska and Werblin have discovered that the eye sends between 10 and 12 output channels from the eye to the brain, each of which carries a different, stripped-down representation of the visual world.

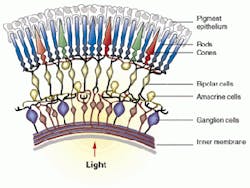

In the human retina, there are three layers of nerve cell bodies and two layers of synapses. Researchers at the University of California have now determined that amacrine cells allow the layers to interact. This crosstalk allows layers to process the visual data and extract 12 levels of sparse information that the ganglion cells send to the brain.

In the eye, light that impinges on photoreceptors in the retina fires signals to a layer of horizontal cells and then to bipolar cells. These bipolar cells funnel signals down their axons and relay them to dendrites or inputs of ganglion cells, which send the processed information to the brain. These cell types are arrayed in unique layers stacked on top of each other. Axons from bipolar cells synapse with or touch the dendrites of the ganglion cells in a tangled region called the inner plexiform layer.

Over a three-year period, Roska measured signals from 200 ganglion or output cells in a rabbit's retina. From these, he and Werblin determined that there are approximately 12 different populations of ganglion cells, each of which produces a different output. One group of ganglion cells only sends signals when a moving edge is detected. Another group fires only after a stimulus stops. Another sees large uniform areas, and yet another detects only the area surrounding a figure.

"Each representation emphasizes a different feature of the visual world—an edge, a blob, a movement—and sends the information along different paths to the brain," Werblin says. The researchers shared these findings with software-designer David Balya in Hungary; he modeled this visual processing method on a computer. It was a preliminary step before programming a neural-network IC to simulate the image processing that is performed in the eye.

In his research, Roska discovered that this region of tangled axons and dendrites is structured in an orderly way. By staining the cells being recorded, he discovered that bipolar cell axons converge on 12 or so well-defined layers, where they synapse with the dendrites of the ganglion cells. Each layer of dendrites belongs to a specific population of ganglion cells. Another type of cells—amacrine cells—send processes to the various layers of dendrites and allow the layers to interact. This crosstalk allows layers to process the visual data and extract the sparse information that the ganglion cells send to the brain.

"By interacting, layers within the retina make comparisons, subtractions, and differences," Werblin says, "to determine essential image features." The resulting 12 or so data channels are then sent to the brain. Roska's experimental technique allowed him to measure the output of ganglion cells, the excitations they received from bipolar cells, and the inhibitions they received from amacrine cells. With this information he is now reconstructing the interactions between layers that result in the final output to the brain. "The question that remains is why certain layers interact," he says.

Because Balya's model mimics the output of the ganglion cells of the retina, it can show the difference between the observed world and the information sent to the brain. "We now are comparing the model's predictions when viewing natural scenes with data measurements from retinal cells," Roska says.