Deep learning at the edge with on-camera inference

Using traditional machine vision inspection methods to make decisions on subject flaws such as those that may be found on industrial camera casings can prove difficult. Deep learning techniques, however, offer an effective method for inspection applications such as this.

To test this out, engineers from FLIR Systems (Richmond, BC, Canada; www.flir.com/mv), put together a demonstration setup that used cost-effective components for purposes of testing the feasibility and effectiveness of thesystem.

The demonstration involved the inspection of camera cases for scratches, unevenness in paint, or defects in printing. To avoid this, manufacturers of industrial cameras must inspect camera cases to ensure a perfect lookingproduct.

Programming a traditional machine vision system to identify a range of potential defects and decide what potential combinations of defects are acceptable and what are not would be very difficult. While one small defect might have been acceptable on its own, are two small defects acceptable? What about one larger defect and one small one? This kind subjective inspection is where deep learning technology can be effectivelydeployed.

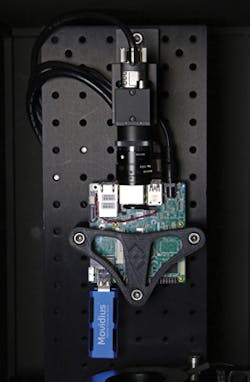

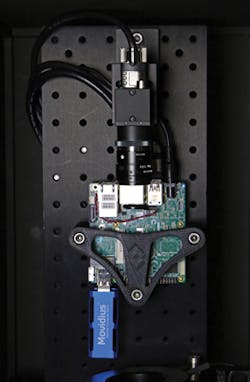

Figure 1: To test the system, FLIR engineers used an industrial camera, an AAEON single-board computer, and an Intel Movidius Neural Compute Stick.

To test how deep learning technology could solve this problem, FLIR engineers used one of its industrial machine vision cameras, the 1.6 MPixel Blackfly S USB3 camera, along with an AAEON (New Taipei City, Taiwan; www.aaeon.com) Up Squared single-board computer with a Celeron processor and 4GB of Memory running Ubuntu 16.04 and an Intel (Santa Clara, CA, USA; www.intel.com) Movidius Neural Compute Stick (NCS) (Figure 1.) Released in 2017, the NCS is a USB-based “deep learning inference kit and self-contained artificial intelligence accelerator that delivers dedicated deep neural network processing capabilities to a range of host devices at the edge,” according toIntel.

The Neural Compute Stick features the Intel Movidius Myriad 2 Vision Processing Unit (VPU). This SOC is equipped with two traditional 32-bit RISC processor cores as well as 12 unique SHAVE vector processing cores. These vector processing cores can be used for accelerating the highly-branching logic used by deep neural networks. Popular deep learning frameworks including Caffe, TensorFlow, Torch and Theano can be used to build and train neural networks for theNCS.

MobileNet V1 was selected as the neural network for its combination of high accuracy and small size and optimization for mobile hardware. FLIR used the TensorFlow framework to build and train a network with a 0.63 weight, where 1 is the largest MobileNet possible. An input image size of 224x224 was selected. This resulted in a network with approximately 325 million Mult-Adds and 2.6 millionparameters.

Figure 2:An Intel Movidius Myriad 2 VPU is integrated directly into the new Firefly camera, which enables users to deploy trained neural networks directly onto the camera and conduct inference on the edge.

A small data set of 61 known good cases and 167 bad cases was used. By careful design of a test environment to maximize consistency across images, the only changes between images were defects. To eliminate false results in the transition states between cases, a third “waiting” state was added. This was achieved by training a waiting class on 120 images of the stage with the camera case partially out of frame, occluded ormisaligned.

Training was conducted on a desktop PC and accelerated with an NVIDIA (Santa Clara, CA, USA; www.nvidia.com) GTX1080 graphics processing unit (GPU). The network was optimized and converted into the Movidius graph format using Bazel. This tool was initially developed by Google (Mountain View, CA, USA; www.google.com) and allows for automation of building and testingsoftware.

Success was declared when classification ran at 25 fps with an accuracy of 97.3%. This is much faster than the 10 cameras every three-to-four seconds, which is possible using manualinspection.

FLIR took the next step toward enabling deep learning by integrating the Intel Movidius Myriad 2 VPU directly into the new Firefly camera (Figure 2), which was on display at the company’s booth at VISION 2018 in Stuttgart, Germany from November 6-8.

FLIR enables users to deploy trained neural networks directly onto the camera and conduct inference on the edge with the same speed and accuracy as a system relying on a separate camera, host and Neural Compute Stick. The initial model features a monochrome 1.58 MPixel Sony (Tokyo, Japan; www.sony.com) IMX296 CMOS image sensor, which offers a 3.45 µm pixel size and frame rates up to 60 fps through a USB 3.1 Gen 1 interface. Inference results can be output over GPIO, or as GenICam chunkdata.

For machine vision applications which fall within the capability of the Myriad 2 VPU and MobileNet, this $299 USD camera can eliminate the need for a host system. At just 20 g and less than half of the volume of the standard “ice-cube” camera (27 mm x 27 mm x 14 mm), the FLIR Firefly camera can dramatically reduce the size and mass of an embedded vision system.

A FLIR Firefly camera consumes 1.5 W of power while imaging and performing inference. For more complex tasks requiring additional processing or a combination of inference and traditional computer vision techniques, the Firefly camera still eliminates the need for a separate Neural ComputeStick.

The Firefly camera makes it easy, according to FLIR, to get started with deep learning inference. Since it uses the familiar GenICam and USB3 protocols, it is possible for users to plug it into existing applications and use it as a standard machine vision camera. Inference-generated metadata such as object classification or position data can be output as GPIO signals or as GenICam chunk data.