July/August 2019 snapshots: Memories for AI, LiDAR scanning in traffic, fusion reactor camera systems, and extreme-distance photography.

Nuclear fusion reactor monitored by ten-camera multispectral imaging system

Working with embedded vision cameras from XIMEA (Münster, Germany; www.ximea.com), Dutch scientists have developed a multispectral imaging system to study the inside of nuclear fusion reactors during experiments with superheated plasma.

The Plasma Edge Physics and Diagnostics group at the Dutch Institute for Fundamental Energy Research (DIFFER) (Eindhoven, Netherlands; www.differ.nl) studies fusion plasmas, the superheated material that allows scientists to fuse the hydrogen isotopes of deuterium and tritium into helium. This fusion reaction also releases energy and neutrons. The plasma is created by heating hydrogen gas to 150 million °C.

Scientists use a device called a tokamak to control the plasma using powerful magnetic fields. The neutrons created during the fusion reaction strike the walls of the tokamak. This transfer of kinetic energy heats the walls of the tokamak, the heat is used to produce steam, and the steam drives a turbine and creates electricity. For fusion reactions to be sustainable as a long-term source of energy, the walls of a tokamak must be able to survive the tremendous forces released during the fusion reaction.

The researchers at DIFFER use a linear plasma generator named Magnum-PSI to generate plasma and study the interaction between the outer edge of the plasma field and the walls of the plasma generator. To assist in these studies, the researchers developed a multi-camera system called MANTIS (Multispectral Advanced Narrowband Tokamak Imaging System), that is composed of ten MX031 embedded vision cameras and an XS12 switch from XIMEA.

The XIMEA PCIe driver is event-based. The MANTIS system must synchronize the event triggers of all ten cameras, which required the research team to alter the PCIe driver. Using the camera’s Direct Memory Access technology that delivers raw sensor data directly to PC memory, buffers were created to predict the next event and reduce latency between event and image capture.

Light from the plasma enters each camera and strikes a corresponding image sensor. Each sensor is set to detect a different spectral range. By studying the images, the researchers can study the temperature and density of the plasma and the physical and chemical processes that take place in the “scrape-off layer,” or the area at which the plasma interacts with the surface of the generator.

The researchers at DIFFER hope to use this information to assist in reactor control design strategies and to better understand power exhaust physics. The MANTIS system has also since been used in conjunction with the Swiss Plasma Center’s (Lausanne, Switzerland; www.spc.epfl.ch) fusion reactor, the Tokamak à Configuration Variable.

LiDAR-based vehicle detection and classification system operates in free-flowing traffic

SICK’s (Düsseldorf, Germany; www.sick.com) Free Flow Profiler is a LiDAR-based system that can detect, measure, and classify vehicles while they drive freely down roadways. The system consists of SICK LMS511 eye-safe 2D LiDAR sensors that use 905 nm lasers, have an aperture angle of 190°, and an operating temperature range of -40° to 60° C, and a CPU called the Traffic ControllerFPS.In the default setup for basic vehicle profiling, two LiDAR sensors are mounted above the road on either side. These sensors scan the upper and side contours of the vehicle, which produces point clouds of 2D profile sections. The width LiDAR sensors also generate measuring points for the vehicle lane. If those measuring points change, the Profiler system can tell that the width sensors are not properly aligned.

A third sensor above the road in the middle of the lane scans the front and roof of the vehicle as it approaches, which calculates the length and speed of the vehicle. The Traffic Controller uses this information to track the relative locations of the 2D profile sections, combine them into a 3D point cloud, and calculate the vehicle dimensions and class with profiling software.

As with other LiDAR-based systems, the accuracy of the Profiler can be affected by weather conditions. The Profiler filters out measuring points generated from weather like rain or snow, versus points generated from vehicle scans, however.

“While looking at all of the data points, the irrelevant points in the pattern can be filtered away,” said Monika Bantle, Product Manager at SICK. “Of course, there are verification algorithms applied to the overall point cloud, as well. To distinguish rain or snow from the side mirrors or large spindrifts from a trailer are the more difficult parts, especially when it comes to dimension measurements with high accuracy requirements.”

Proper installation of the sensors and finding a location for the system where the road condition is constant are important for the Profiler to function properly. If there are large piles of snow or leaves within the scanning area, or if the road surface is extremely dark as a result of fresh paving, or if the road is extremely wet, the measuring points for the vehicle lane taken by the width sensors can be thrown off. If static objects like lane barriers, guardrails, or bumps in the road are within the field of view of the length sensor, length and speed measurements can become less accurate. Users are made aware of these challenges.

The Free Flow Profiler can be used to scan multiple lanes of traffic, depending on the number of sensors hooked into the system. Vehicle classification can be accomplished at speeds up to 250 km/h (approx. 155 mph). For vehicle measurement, slower speeds provide more accurate results. Horizontally-placed sensors can be used to count axles, which can assist in the operation of automated toll systems.

“The Profiler can be adjusted to provide the optimal results based on application and site conditions,” said Bantle.

The Profiler’s vehicle classification functionality was the basis of the system’s deployment at the Tallinn Passenger Port in Tallinn, Estonia, where the Profiler calculates vehicle dimensions as they enter the port and assigns vehicles to specific boarding lanes in order to assist with efficient ferry loading procedures.

Vision system used to study development of memories for artificial intelligence

A team of computer scientists from the University of Maryland (College Park, MD, USA; www.umd.edu) have employed a new form of computer memory that could lead to advances in autonomous robot and self-driving vehicle technology, and possibly in the development of artificial intelligence as a whole.

Humans learn naturally how to tie information about their position in the world with the information they gather from the world and learn to act based on that information. For instance, if a ball is thrown at a person enough times, they learn to gauge where they are in relation to the ball and raise their hands to catch the ball. This process is called “active perception,” and allows humans to anticipate future actions based on what theysense.A human being’s sensory and locomotive systems are unified, which means our memories of an event contain this information combined. In a machine like a robot or drone, on the other hand, cameras and locomotion are separate systems with separate data streams. If that data can be combined, a robot or drone can create its own “memories,” and can learn more efficiently to mimic active perception.

In their study, titled “Learning Sensorimotor Control with Neuromorphic Sensors: Toward Hyperdimensional Active Perception,” (http://bit.ly/VSD-APC), the researchers from the University of Maryland describe a method for combining perception and action—what a machine sees and what a machine does—into a single data record.

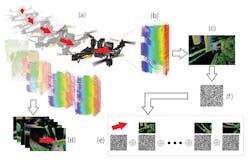

The researchers used a DAVIS 240b DVS (dynamic vision sensor) from iniLabs (Zurich, Switzerland; www.inilabs.com), that only responds to changes in a scene, similarly to how neurons in the human eye only fire when they sense a change in light, and a Flight Pro board from Qualcomm (San Diego, CA, USA; www.qualcomm.com), attached to a quadcopter drone.

Using a form of data representation called a hyperdimensional binary vector (HBV), information from the drone’s camera and information on the drone’s velocity were stored in the same data record. A convolutional neural network (CNN) was then tasked with remembering the action the drone needed to take, with only a visual record from the DVS as reference. The CNN accomplished the task in all experiments with 100% accuracy by referencing the “memories” generated by combining the camera and velocity data.

The principle behind this experiment, allowing a machine vision system to reference event and reaction data more quickly than if the two data streams were separate, could allow robots or autonomous vehicles to predict future action taken when specific visual data is captured, i.e. to anticipate action based on sensory input. Or, to put it another way, to imagine future events and think ahead.

Single-photon LiDAR research accomplishes 3D imaging at extreme distances

Researchers from the University of Science and Technology of China (Shanghai, China; www.ustc.edu.cn) have demonstrated the ability to take photographs of subjects up to 45 km (28 miles) away by using single-photon LiDAR. When coupled with a computational imaging algorithm, the new system can take high-resolution images at tremendous distances, potentially at hundreds of kilometers away.

Single-photon LiDAR (SPL) operates by acquiring one photon per ranging measurement instead of hundreds or thousands of photons, which gives SPL increased efficiency. Use of 532 nm (green) lasers allows SPL systems to penetrate semiporous elements like vegetation, ground fog, and thin clouds, and enables superior water depth measurements compared to traditional methods.

These advantages make SPL technology attractive for large-area applications like geospatial mapping. The intensity of data gathered by SPL can lead to increased high and low noise points that must be filtered to provide accurate imaging, however.

The researchers from the University of Science and Technology of China achieved their results by designing a high-efficiency coaxial-scanning system that is better able to collect the weaker photons returned from longer-distance ranging measurements, and a computational algorithm designed specifically for long-range 3D imaging to filter out background noise.

The scanning system was built from a Cassegrain telescope with 280 mm aperture and high-precision two-axis automatic rotating stage, assembled on a custom-built aluminum platform. A 1550 nm laser with 500 ps pulse width at 100 kHz repetition rate, and average power use of 120 mW, was used for ranging. The laser output was polarized vertically, projected into the telescope via a small hole in the mirror, and expanded.

An InGaAs/InP single-photon avalanche diode detector registered the returning photons after they were spectrally filtered, and the custom-designed algorithm used global-gating and a 3D matrix to provide super-resolved image reconstructions.

The researchers placed their imaging equipment on the 20th floor of a building and targeted the Pudong Civil Aviation building at 45 km. The experiment was conducted at night, and a 128 x 128 image was produced. A second experiment targeted a skyscraper named K11, that was 21.6 km away, to produce a 256 x 256 image. This experiment was conducted at day and night. In all cases, the custom algorithm was able to detect features of the buildings that other, established algorithms could not.

The researchers concluded that their system could be adapted for rapid data acquisition of moving targets and fast imaging from moving platforms, and that refinements of their system could make image acquisition of objects a few hundred kilometers away feasible.