Deep learning powers chimpanzee face recognition research

Deep learning software based on a convolutional neutral network (CNN) has learned to identify individual chimpanzees in video footage captured in the wild, where image quality varies greatly. The breakthrough could provide conservationists a potent tool for tracking animal populations.

Scientists have accumulated decades’ worth of video of wild animals gathered from camera traps set up in the wild that are activated by a trigger, commonly by motion, to automatically capture footage of passing animals. Coders must review the footage and tag it with labels, for instance whether a certain species of animal is present in the footage, for scientists to glean relevant data from the footage.

Researchers can use platforms like Zooniverse (www.zooniverse.org)—a website where citizen scientists can volunteer to help classify video data for researchers, among other tasks—to generate data for training datasets that can teach CNNs how to automatically identify animal species in camera trap footage (bit.ly/VSD-CTZ).

A more difficult task than identifying the individual species, even trained observers find correctly coding individual animals within the captured video footage to be a challenging task. Citizen scientists with much less training will likely find it even more arduous to create image sets robust enough to train CNNs in how to identify individual animals in large amounts of video footage.

A coalition of scientists from Great Britain, Japan, and Portugal, working with researchers from Gorongosa National Park in Mozambique, have collaborated to address these issues and created an automated process for detecting, tracking, and recognizing individual chimpanzees as described in their paper, Chimpanzee face recognition from videos in the wild using deep learning, (bit.ly/VSD-CMP).

Previous efforts at face recognition methods applied to primates rely on cropped images of faces viewed from the front, and/or images taken of animals in captivity. Developing training datasets for face recognition in camera trap footage requires dealing with much more variation in image quality, such as lighting conditions, image quality, motion blur, and occlusions.

The researchers solved this challenge by creating a unique face detection, tracking, and identification system. Taken from 14 years’ worth of longitudinal video footage gathered at a field site in the Bossou forest, in southeastern Guinea, West Africa, the CNN training dataset consisted of almost 50 hours of video randomly sampled from six field seasons. The videos featured varied lighting conditions, motion blur, and occlusion due to other chimpanzees and vegetation, among other quality issues.

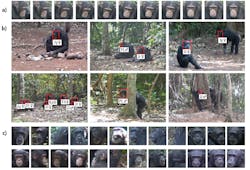

Frames were extracted every ten seconds from a piece of video shot in 2008. Researchers drew bounding boxes around chimpanzee faces present in the footage, for a total set of 5570 detection boxes. This data trained a deep single-shot detector (SSD) model, created using the MatConvNet machine learning library, trained on two Titan X graphics processing units (GPU) from NVIDIA (Santa Clara, CA, USA; www.nvidia.com), and based on the VGG16 CNN architecture (bit.ly/VSD-VGG16), to detect chimpanzee faces.

Face tracking algorithms implemented in MATLAB from MathWorks (Natick, MA, USA; www.mathworks.com) created “face tracks,” or frame-by-frame face detections from single shots of video. If three chimpanzees appear in one shot of footage, for example, three face tracks are generated from that single shot.

Assigning identity labels to each face track created millions of annotated images to use in face recognizer training. Twenty-three individual identity classes, corresponding to the 14 female and 9 male chimpanzees present in the training dataset, were created. An additional identity class, nonface, for false positives, eliminated the need to manually remove false positive face tracks.

Finally, an identity class called negatives, composed of chimpanzee faces gathered from sources outside the Bossou field site footage, made the identity classifier more discriminative for the 23 identity classes representing the 23 chimpanzees. This three-step detection, tracking, and recognition pipeline ran against the 50 hours of footage from which the dataset was selected. Face identification accuracy was 92.47%. When only frames with full frontal face views were considered, the face identification accuracy rose to 95.07%.

To further test the validity of their method, the researchers ran the pipeline against two datasets of Bossou video footage taken from two years outside the six field seasons of the test dataset. The appearance of some of the individuals in the chimpanzee community observed by the camera had changed with age, in the new footage. Identity recognition accuracy for the two tests was 91.81% and 91.37%. These results suggest a robust model that can maintain accuracy despite changes in the wildlife population under observation in longitudinal studies.

Human annotators with experience at coding faces of chimpanzees in footage from the Bossou site specifically were given 100 random face images from the training test set. Each annotator also received 50 images and a table with three side-by-side examples of each chimpanzee to assist in identifying the chimpanzees within the 100 randomly drawn face images.

No time limits were given to the annotators. The more expert annotators scored an average identity classification accuracy of 42% and took around 55 minutes to complete the task. Running on a single Titan X GPU, the pipeline analyzed the same set of 100 images in 60 ms and achieved an accuracy rate of 84%.

The researchers define the pipeline as agnostic to the species being tracked, though the adaptability of the pipeline for other animals needs to be researched with appropriate datasets.

About the Author

Dennis Scimeca

Dennis Scimeca is a veteran technology journalist with expertise in interactive entertainment and virtual reality. At Vision Systems Design, Dennis covered machine vision and image processing with an eye toward leading-edge technologies and practical applications for making a better world. Currently, he is the senior editor for technology at IndustryWeek, a partner publication to Vision Systems Design.