BLIP-TIA System Increases Speed and FOV of 3D Imaging

Researchers from the Centre Énergie Matériaux Télécommunications, at Institut National de la Recherche Scientifique (INRS, Quebec, Canada; www.inrs.ca) developed a new method to increase the imaging speed and FOV for high-speed 3D imaging involving simultaneous use of two high-speed cameras and a new algorithm to match pixels in the acquired images.

Using two cameras for illumination profilometry—projecting patterns of light onto an object and recording the deformation of the patterns to translate into 3D coordinates—increases the amount of data acquired and hence increases the detail of the resulting 3D images. This introduces two challenges.

First, in current multi-camera methods, each camera captures the entire sequence of projected patterns. This generates redundancy in data acquisition and the requisite image processing time slows down imaging speeds. This usually means reducing the FOV to eliminate some of the redundant data.

Second, placing a camera on either side of the pattern projector, the usual approach, introduces differences in light intensity on individual points, based on the angle the lights strike a surface relative to the camera’s orientation. This also introduces occlusion issues. Both problems reduce the accuracy of reconstruction and pose challenges in imaging specularly reflective surfaces.

The new technique, named dual-view band-limited illumination profilometry (BLIP) with temporally interlaced acquisition (TIA), as described in the research paper, “High-speed dual-view band-limited illumination profilometry using temporally interlaced acquisition” (bit.ly/VSD-BLIPTIA), uses two cameras and a new algorithm to match 3D coordinates captured from different views. TIA enables each camera to capture half a sequence of phase-shifted patterns, thus decreasing the data throughput generated by each camera, with cameras placed as close as possible on a single side of the projector to mitigate differences in light intensity.

An MRL-III-671 continuous-wave laser from CNI Lasers (Changchun, China; www.cnilaser.com) with an AF-P DX NIKKOR 18–55 mm focal length lens from Nikon (Tokyo, Japan; www.nikon.com) projected patterns, which were generated by a AJD-4500 digital micromirror device controller from Ajile Light Industries (Ontario, Canada, www.ajile.ca) with an optical low-pass filtering system, onto the imaged objects. Two CP70-1HS-M-1900 cameras from Optronis GmbH (Kehl, Germany; www.optronis.com), placed approximately 12 cm from one another and each with an AZURE-3520MX5M 35 mm focal length lens from Azure Photonics (Fuzhou, Fujian, China; www.azurephotonics.com), and a computer equipped with two Cyton-CXP frame grabbers from Bitflow (Woburn, MA, USA; www.bitflow.com) acquired and translated the images into 3D representations.

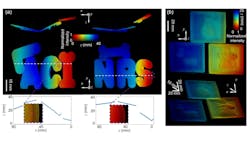

The researchers tested the new system by imaging various objects like 3D letter toys and cube toys with fine structures on the surface, dynamic objects like moving hands and bouncing balls, and a glass cup made to vibrate with a single-frequency sound signal and then broken with a hammer. The BLIP-TIA system successfully produced 3D images in every test.

“BLIP-TIA mitigates the tradeoff between the imaging speed and the FOV using a paradigm applicable to many 3D imaging systems. We can reach a higher imaging speed with a fixed FOV, or a larger FOV with a fixed imaging speed,” explains Jinyang Liang, an assistant professor at INRS and a researcher on the project.

In the case of the vibrating glass cup, according to the researchers, existing 3D imaging methods cannot provide sufficient imaging speed to capture the glass vibration at its resonant frequency. The BLIP-TIA system, on the other hand, possessed the requisite speed to accomplish the task. In the tests involving moving hands and bouncing balls, “With a fixed 3D imaging speed at 1 kfps, we have surpassed the existing pattern-projection methods, which could only image at 512 × 512 or 768 × 640 pixels, whereas the BLIP-TIA method imaged at a resolution of 1180 × 860 pixels, which represents an increased FOV,” says Cheng Jiang, a doctoral student at INRS and lead author of the paper.

About the Author

Dennis Scimeca

Dennis Scimeca is a veteran technology journalist with expertise in interactive entertainment and virtual reality. At Vision Systems Design, Dennis covered machine vision and image processing with an eye toward leading-edge technologies and practical applications for making a better world. Currently, he is the senior editor for technology at IndustryWeek, a partner publication to Vision Systems Design.