Neural nets help security systems

Neural nets help security systems

By Dave Wilson

Identification systems based on magnetic cards and chip cards, PINs, and passwords certainly provide some security for the user. But do they provide enough? Or is the traditional approach of using codes and passwords consisting of numbers or letters too complicated or inconvenient to use? If so, using the faces of individuals to verify their identity is an alternative with some apparent benefits.

Face-recognition systems verify or identify individuals. For example, verification systems used in automatic teller machines (ATMs) at banks compare the facial image on the user`s smart card with a captured image. By comparing the two images, ownership of the card is verified.

In image identification, the identity of an unknown person is determined by comparing his or her face to previously stored images. Images of individuals are again captured, but no additional data are provided to the system. Such identification systems are being used as airport security systems where facial images are compared with those in databases of wanted criminals.

Verification system software is simpler than recognition system software because information presented to the computer on a smart card limits database searches. Recognition systems must extract data from many individual face images and recognize similarities between captured and stored images.

Applying neural networks

In image-recognition systems, images may be presented at angles that are different from the angle at which the original image was taken. Also, original facial images may change; for example, hairstyles may change or glasses may be replaced with contact lenses.

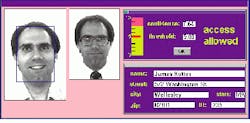

The TrueFace Access System developed by Miros (Wellesley, MA) allows systems developers to capture images using off-the-shelf CCD cameras, frame grabbers, and personal computers (PCs). Running on the PC, the TrueFace package compares a facial image with a previously recorded image to determine if the faces are identical (see Fig. 1).

To develop the software, Michael Kuperstein, president of the company, turned to neural networks. "Applying rule-based algorithms to recognize faces does not work," he says. "Rules cannot capture the richness of a complex pattern such as the human face. But artificial neural networks (ANNs) are ideally suited to the task because they can learn by distributing images or features across a network that comprises many highly interconnected processing units or artificial neurons.

Feedforward or feedback

Processing elements in a neural network can be connected by feedforward (or multilayer) networks, feedback or recurrent networks, or cellular networks.

In feedforward networks, each neuron can receive input from other neurons but there is no feedback. A feedforward network calculates an output pattern in response to an input pattern. Once the neural network has been trained (with fixed connection weights between the nodes), the output response to a given input pattern will always be the same. By presenting data to the input of the network and having a result output, the neural network learns to reduce the error in predicting event likelihoods. In an image-recognition system, it learns to get better at matching faces.

Of the several ANN approaches that have been used, much effort has been placed on multilayer perceptrons (MLPs), a class of feedforward neural networks with neurons arranged in layers. But, according to Ming Zhang of the department of computing and information systems at the University of Wollongong in Western Australia, such simple models have not been very successful in solving complex problems, such as face recognition. That may be why, to date, no automatic human face-recognition system has been developed that is capable of operating in real time under variable lighting conditions.

One issue in developing more accurate ANN systems is dealing with faces presented to the system either rotated or shifted in some way that is different from the original reference images. To solve this, researchers are developing "translation invariant" face-recognition techniques.

In a third-order neural network, shaded squares between input and output layers show where the products of the incoming signals are computed and passed on (see Fig. 2). For face recognition, this type of neural network seems most promising.

At the department of computer engineering at Florida Atlantic University (Boca Raton, FL) it has been shown that third-order neural networks can be used effectively for both face recognition and image enhancement of digitized images. It is claimed that the most significant advantage of the third-order networks over their first-order counterparts is that invariance to geometric transforms can be incorporated into the network and need not be learned through iterative weight updates.

To test this theory, developers based an algorithm for the neural network on the three-dimensional (3-D) characteristics of the face. The 3-D structure of each face in the database was captured by identifying and isolating regions of constant intensity in the image and transforming those regions into binary images. Binary images of the single-density regions were then used to train an ANN. Simulation results show that the model performed well both in translation, scale, and rotation-invariant recognition, and in reducing the learning time over other neural-network designs such as the multilayer perceptron.

Question of invariance

At the University of Wollongong, Ming Zhang`s solution to the problem of invariance has been addressed by presenting a system with all shifted and rotated faces in two dimensions. After training, the ANN recognizes shifted and rotated faces in two dimensions.

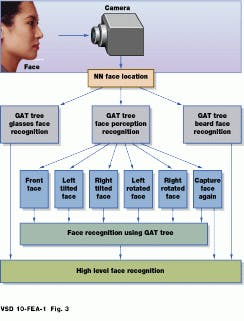

This face-recognition system does not use just high-order neural networks. Zhang`s system makes use of ANN group-based adaptive tolerance (GAT) trees. GAT tree nodes are a group of neural networks that function as a complex pattern classifier. Each node comprises a group of neural networks that are used to classify a particular characteristic of the face. The complete system acts as a pattern classifier.

Using GAT trees, Zhang has developed a face-recognition system specifically for airport security (see Fig. 3). After images are captured, faces are located within them using neural-network techniques. The GAT tree is then used for face perception and recognition.

At the first level of the GAT tree structure, the neural network is trained to recognize if a face is straight on or not. If it is not, then the second-level neural network in the tree will attempt to distinguish if the face is tilted, rotated, or in another direction. At the third level, a node distinguishes between right and left tilted faces. If this first classification step fails, the GAT tree requests the system to capture the face a second time.

A higher level of the system uses neural-network training databases, face databases, rule bases, knowledge bases, and reasoning networks to perform more intelligent recognition. So far, results have been good. In a laboratory simulation using a GAT tree for face recognition, the GAT-tree-based recognition system approach made no mistakes in recognizing 80 facial images (10 different views of eight people) out of 780 different faces (10 different views of 78 people).

Because of the diversity of approaches used, it is difficult to compare ANN-based systems. But the result that all system developers are striving for is 100% re cognition under all circumstances.

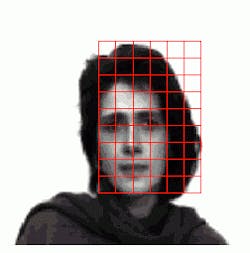

ZN GmbH (Bochum, Germany) has developed a face-recognition system for ATMs dubbed ZN Face, which uses a combination of wavelet-matching and neural networks to achieve face recognition. In operation, the PC-based system captures images and uses an elastic graph-matching algorithm to store face images as flexible graphs or grids (see Fig. 4). Facial features are obtained by convolving images with Gabor wavelets to produce graphical representations of facial features that can be correlated with unknown faces.

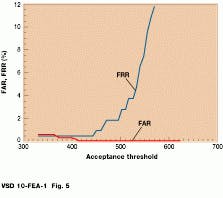

According to Stefan Fuhrmann of ZN, research into face-recognition al- gorithms should not concentrate only on the false rejection rate (FRR), or the recognition rate that is achieved by the algorithm. Often, a more important consideration is the false acceptance rate (FAR), or how successfully the system rejects an individual whose image is not in the database (see Fig. 5). Of course, there is a trade-off between these error types, and consequently, it is more difficult to build a system with a high recognition rate and a low FAR than a system with a high recognition rate alone.

ZN Face balances the FAR and FRR. By using a simple neural network, the system can optimally discriminate between acceptance and rejection by simultaneously minimizing FAR and FRR. In the verification process, the acceptance value is compared against a threshold, and the user can shift this acceptance threshold in the direction of a lower FAR at the expense of a higher FRR.

No single solution

At the present time, an increasing number of systems are being built for face recognition. In the future, it is likely that there will not be one neural-network solution that covers all the recognition application bases. More likely, there will be specific neural networks and algorithms running on them tailored for certain price/performance applications.

One problem that has not been solved is discriminating between identical twins. When such systems proliferate, many potentially angry customers could be waiting in line to discuss with their bank managers how it is that their sibling was allowed to use their VISA card for longer than they should.

Further Reading

O. A. Uwechue et al., SPIE Vol 2568, 255 (1996).

M. Zhang et al., IEEE Transactions on Neural Network 7(3), 555 (May 1996).

Dave Wilson is a science writer based in London, England.

Using off-the-shelf CCD cameras, frame grabbers, and PC and neural-network software, face-recognition systems can be developed.

FIGURE 1. Face-recognition packages can compare captured images from PC-based systems with a database or library of facial images. Although captured images may differ slightly, such systems have a high likelihood of correct recognition.

FIGURE 2. Third-order neural networks can be used to achieve translation, scale, and rotation-invariant recognition faster than other neural-network architectures (Courtesy SPIE).

FIGURE 3. At the University of Wollongong, a face-recognition system has been built using artificial-neural-network group-based adaptive tolerance (GAT) trees or groups of neural networks that function as a complex pattern classifier. Each node`s neural network classifies a particular characteristic of the face, and the complete system acts as a pattern classifier (Courtesy IEEE).

FIGURE 4. Elastic graph matching stores faces as graphs or grids. To obtain features from these grids, Gabo wave-lets are convolved with the images to produce feature data about the image. These data can then be used to pattern match unknown and known image data sets.

FIGURE 5. While false rejection rate (FRR) provides the recognition rate that is achieved by the algorithm, the false acceptance rate (FAR) shows how successfully persons are rejected whose image is not in the database.