Researchers field an intraoperative display system to navigate the brain

Patients with seizures can now be assured more exact neurosurgery thanks to the marriage of computer graphics and imaging.

By R. Winn Hardin,Contributing Editor

Uncontrolled seizures can become a way of life for many people. And, in cases where drugs cannot break the cycle of atypical brain activity, neurosurgery represents the best chance for patients to experience normal lives.

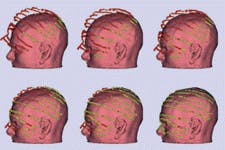

FIGURE 1. Series of video cameras and a laser scanning device, developed at MIT, provide 3-D tracking of a patient's head during neurosurgery, enabling the surgeon to visually navigate through the brain and hidden tissue using 3-D model overlay on real-time video (left). The MIT neuroimaging system overlays, tracks, and registers 3-D models of internal brain structures onto real-world video feeds taken during an operation.

Traditionally, this surgery is conducted in two steps. First a grid of electrodes is placed directly onto the brain to monitor which areas of the organ are acting abnormally, and the results are recorded on an electroencephalograph (EEG). Once the foci are identified, the physician cuts the neural pathways from the foci to the surrounding brain tissue. In many cases, magnetic resonance imaging (MRI) or computed tomography (CT) is used in conjunction with the EEG for preoperative surgical planning.

PHOTO 2 To register MRI three-dimensional (3-D) models with real-time video of a patient's head for navigation during surgery, imaging systems bring together 3-D graphics and video cameras. Such a system, developed at the Massachusetts Institute of Technology (MIT; Cambridge, MA) in cooperation with Brigham and Women's Hospital (Boston, MA), has undergone more than 100 trials during neurosurgery—a dozen for the treatment of epilepsy in children.

With MIT's augmented reality imaging system, MRI models are overlaid on top of real-time video showing the surgeon's hands and instruments in relation to hidden tissues within the brain (see Fig. 1). According to Michael Leventon of MIT, this allows surgeons to preplan each step of surgery and results in faster surgical procedures, reduced medical costs, and more-effective treatment.

MRI preparation

Before the MIT imaging system is used, MRI scans from a 1.5-Tesla MRI unit from GE Medical Systems (Waukesha, WI) are imported into a SPARC 20 workstation from Sun Microsystems (Mountain View, CA). The MRI system at Brigham and Women's Hospital uses an automated segmentation technique that views each pixel's intensity within the spatial context of the brain and compares it to a database of anatomical structures. Pixel by pixel, the two-dimensional (2-D) image is tagged with various tissue types. In the case of tumors, physicians oversee the segmentation process and highlight lesions from their surrounding tissue.

FIGURE 2. MIT laser scanner system provides the mechanisms for triangulating the 3-D position of a patient's head. The associated Pulnix camera captures the operation while providing imagery data for tracking specially designed probes that allow the surgeon to identify hidden tissues below the brain's surface. The FlashPoint optical digitizer system tracks the patient's head in 3-D space through the use of the triangular LED probe attached to a cranial clamp. The Ultra-10 workstation provides display and control of the system. By collecting data from the FlashPoint system, which tracks movements of the patient's head during surgery, the Ultra-10 can adjust the MRI 3-D model overlay accordingly, enabling the surgeon to identify hidden structures within the brain to within 1-mm accuracy even when the patient's head has moved in response to the surgical procedure.

Once segmentation is complete, 2-D images are stacked to form a 3-D model. To do so, the workstation runs a marching-cubes algorithm that generates a set of connected triangles to represent the 3-D surface for each segmented structure.1 These surfaces are then displayed by selecting a "virtual" viewing camera location and orientation in the MRI coordinate frame.

This rendering process removes hidden portions of the surface, shades the surface according to its local normal, and can vary the surface opacity to allow glimpses of internal structures. Once this model is complete, it is transmitted to a Sun Ultra-10 workstation to control the intraoperative navigational system.

Running with a Sun Creator 3D graphics accelerator card and LAVA 3D-display software, the Ultra-10 workstation provides the user interface and controls the navigational display. According to Leventon, the majority of the computation is performed in C/C++, while a user interface written in Tcl/Tk from Kitware (Clifton Park, NY) manipulates anatomical models and generates the 3-D display.

Fusing modalities

Once the model from the MRI is completed and transmitted to the Sun Ultra-10 that runs the imaging system, the surgical procedure can begin. The system consists of a portable cart containing a Sun Ultra-10 workstation and the hardware to drive an optical digitizer from FlashPoint (San Jose, CA) with three linear CCD arrays, a video camera from Pulnix (Sunnyvale, CA), and a laser scanner from MIT. The joint between the articulated arm and scanning bar has three degrees of freedom to allow easy placement of the bar over the patient (see Fig. 2).

BFIGURE 3. Before an overlay of MRI 3-D modeling can be performed on the real-world video feed, the patient's head position must be determined in real-world coordinates. First, the MIT laser scanner scans the operational area. The operation is captured by the Pulnix camera and displayed on the Sun Ultra-10 workstation. The operator then uses the mouse to select the region of interest and directs the program to erase all laser points outside that area . Three-dimensional coordinates are determined for the laser points in the region of interest and then a two-step algorithm brings the model data into registration with the video feed. Green points indicate less than 1-mm registration between the MRI and video in real-world coordinates. Once the MRI model and video feed are registered in real-world coordinates, any part of the MRI model—including the skin—can be displayed at the same time as the video overlay.

Before surgery, the cart is rolled to the operating table, with the articulating arm approximately 1.5 m (4.92 ft) above the table. Prior to surgery, the laser scanner is calibrated by scanning an object with known dimensions. Control electronics for the laser scanner and stepper motor are connected to the workstation through a serial port that constantly feeds motor position data into the computer. The 768 x 484-pixel color progressive scan video camera is attached to the workstation through a Sun Video Plus frame grabber that captures the image of the scanned laser plane as it reflects off of the calibration object. By knowing the dimensions of the calibration object, the Ultra-10 triangulates the position of the laser and video camera in real-world coordinates. Once the arm is in position over the table, the frame grabber captures a picture of the table and surrounding environment for later reference.

At this point, the patient is placed on the table and a triangular LED marker is attached to a clamp affixed to the patient's head. The flashing light from the LED marker is constantly tracked by the FlashPoint system through the three linear-array CCDs. A pair of 1k x 1k linear arrays is positioned along the axis of the arm, while the third array is orthogonal. The arrays, operating at 30 frames/s, are connected to three separate FlashPoint digital-signal processing boards in a 486 computer. A fourth FlashPoint board triggers the triangular LED marker attached to the patient's head.

FIGURE 4. Pulnix camera tracks a probe with attached LEDs. Because the system knows the length of the probe and the location of the LEDs in real-world coordinates, it can extrapolate the position of the probe tip, even when hidden by tissue. The surgeon's navigation window can show the real-time video (top), probe location within the MRI 3-D model (bottom), and tip location in saggital and axial MRI slices (right).

By detecting the position of all three LEDs in the array along both axis, the computer constantly determines the position of the patient's head in real time and feeds it via a serial cable to the workstation. If the patient should move during the surgery, the change in the positions of the LEDs at each corner of the triangle will allow the Ultra-10 to calculate new reference points for the MRI model overlay (see Fig. 3).

Next, the laser scanner begins to scan the operational area surrounding the patient's head. From these pictures, the system subtracts the original background image from each frame, leaving only those portions of the picture that have changed namely the patient and the laser lines. The Ultra-10 workstation triangulates the real-world coordinates for the patient's head based on the known positions of the camera and scanner and the contours of the reflected laser light. Once the patient's head, skin, and features are located in real-world space, the operator roughly aligns the MRI model with the real-time video picture of the patient's head. After rough alignment, the computer evaluates the alignment for submillimeter precision. By matching the model coordinates with the real-world coordinates of the patient, a relationship is constructed whereby real-world probes and instruments can be displayed in model coordinates.

Intraoperative navigation

Although the overhead Pulnix camera shows the surgeons hands and instruments, a camera alone cannot allow the surgeon to see where a probe is located inside the brain. For this task, a special LED probe developed by FlashPoint makes use of the relationship between model and real-world coordinates. The probe has two LEDs located along its length, with the probe tip at a set distance from these two lights. By converting their position to model coordinates, the probes' position inside the brain can be revealed in real time on the surgical navigation display.

According to MIT researchers, the intraoperative surgical navigator has performed well. An early analysis indicates that use of the system could reduce the cost of neurosurgical operations by $1000 to $5000 per operation, mainly due to reduced operating times. Leventon adds that the system could benefit from several enhancements, including the use of two cameras during the registration/alignment step to get a larger picture of the patient's face.

Facial features such as eyes, nose, and mouth are helpful factors when registering the MRI model to the real-time video. This feature would also allow cranial scanning during the procedure, permitting the system to adjust to brain swelling that naturally occurs when the skullcap is removed. Currently, swelling can introduce coordinate errors of 1 to 2 mm. Also, the current system requires the surgeon to look away from the patient when exploring tissue below the surface. Flat-panel displays that could swivel into the surgeon's field of view or headset displays would help avoid this, Leventon says. By incorporating a head tracking system for the surgeon into these features, parallax could also be eliminated.

REFERENCE

- W. E. Lorensen and H. E. Cline, Computer Graphics 21(3), 163 (March 1987).

Company Information

FlashPoint

San Jose, CA 95112

(408) 795-4900

Fax: (408) 795-5050

Web: www.FlashPoint.com

GE Medical Systems

Waukesha, WI 53188

(414) 544-3011

Fax: (414) 544-3384

Web: www.ge.com/medical

Kitware

Clifton Park, NY 12065

(518) 371-3971

Fax: (518) 371-3971

Web: www.kitware.com

MIT

Department of Electrical Engineering and Computer Science

Cambridge, MA 02139-4307

(617) 253-4600

Fax: (617) 258-7354

Web: www-eecs.mit.edu/

Pulnix America

Sunnyvale, CA 94089

(408) 747-0300

Fax: (408) 747-0880

Web: www.pulnix.com

Sun Microsystems

Palo Alto, CA 94303

(650) 960-1300

Fax: (650) 969-9131

Web: www.sun.com