Precision Agriculture: AI-Enabled Embedded Vision Systems Automate Weeding

What You Will Learn

· Companies like John Deere, Dimensions Agri Technologies, Aigen, and Carbon Robotics are using AI and machine vision to develop precision weeding systems that reduce or eliminate herbicide use, improving efficiency, sustainability, and profitability in agriculture. Some also incorporate vision-guided robotics.

· The embedded vision solutions rely on deep learning to produce and analyze images quickly and accurately enough to be useful in the field.

· Despite the promise of these technologies, adoption remains limited (only 27% of U.S. farms use precision agriculture tools) due to high costs, data privacy concerns, and limited equipment interoperability. But experts expect adoption to increase over time in reaction to rising input costs, environmental concerns, and evolving pest pressures.

Whether it’s a small farm or large-scale agricultural operation, controlling weeds is a necessary but expensive and time-consuming task that often produces mixed results.

Viewing this problem as an opportunity, numerous innovators—from industry heavyweights to startups—have developed solutions using machine vision and other advanced technologies to improve weeding efficiency. The solutions include methods to deliver herbicides more precisely, deploy mechanical weeders, or attack weeds with alternative technologies such as lasers. Some involve the use of vision-guided robotics.

Nonetheless, this type of technology is not yet common in farming. In a January 2024 report1, the U.S Government Accountability Office (Washington, DC, USA), citing data from the U.S. Department of Agriculture, said that only 27% of U.S. farms use precision agriculture technologies to improve the efficiency of weeding or other activities such as yield monitoring and crop fertilizing.

There are several challenges to widespread adoption of precision agriculture: high acquisition costs; lack of interoperability standards, which can hinder integration among different technologies; and concerns about sharing farm data or ownership of the data, according to the GAO report.

Cost Pressures Driving Adoption of Precision Agriculture

However, significant cost pressures may cause farmers to embrace precision agriculture, according to a 2023 report2 from McKinsey & Company (New York, NY, USA). The management consultancy describes percent cost increases over the “last few years” and through 2023 of between 9% and 71% for inputs such as fertilizer, insurance, and crop protection. In addition, severe weather events and new invasive pests also put pressure on crop yields and thus profitability.

Related: Machine Vision System Tracks Vineyard's Crop Yields

As David Mulder, product marketing manager at Deere & Company (Moline, IL, USA), which markets itself as John Deere, explains, “Let's face it, our commodity prices aren't doubling or tripling, but it seems like everything else is. So, we have got to help our customers manage their costs.”

While there are many farming tasks that lend themselves to automation, weeding is ripe for new approaches. The idea is to use deep learning-enabled vision systems to reduce or eliminate the use of herbicides in commercial agriculture.

Detecting Weeds with Embedded Vision and Deep Learning

“These systems are often deployed on embedded platforms to enable real-time weed detection and classification directly in the field. Cameras, typically mounted on agricultural vehicles, continuously scan crop rows to detect and differentiate weeds from crops. Depending on the farm layout, either a single global shutter camera or a multi-camera setup can be used to monitor multiple rows simultaneously. Global shutter sensors are preferred for their ability to capture fast-moving scenes without distortion, a crucial feature when operating on moving machinery,” explains Ram Prasad, head of industrial and surveillance camera business unit at econ-Systems (Fremont, CA, USA), a developer of industrial cameras.

The commercial weeding machines can be self-propelled, mounted to the front of a tractor, or pulled behind a tractor.

Related: 3D Imaging—What's Ahead?

The goal is to not only improve efficiency and plant yields but also reduce super weeds, or those that have genetically mutated and can survive the usual herbicides. Of course, any reduction in the use of herbicides is also good for the environment.

Dimensions Agri Technologies Develops Embedded Vision System

In addition to John Deere, another innovator is Dimensions Agri Technologies (Ski, Norway), which developed DAT Ecopatch, an edge-based vision system that uses deep learning algorithms and camera technology to find weeds and then target them with herbicide. The solution is designed to integrate with many brands of commercial spraying systems.

Related: Focus on Vision: Precision Agriculture, Vision-Enabled Robot Control | August 8, 2025

Founded in 1999, the company began work on precision spraying of weeds in 2015 with hard-coded algorithms and more recently focused on AI-based algorithms in addition to traditional vision models. The commercial kickoff of DAT Ecopatch was in 2021. “Now we’re in the process of scaling up and starting on new geographies in Europe,” explains Erlend Kaurstad Morthen, chief technology officer for Dimensions Agri Technologies.

In addition, Kverneland Group (Klepp, Norway), a manufacturer of farm equipment, installs the Ecopatch on its self-propelled sprayers and mentions the capability on its website.

Withstanding Harsh Outdoor Environments

The system includes a camera, lens, circuit board, and LEDs inside a weatherproof enclosure that is attached to the boom of a spraying device. The usual setup includes between 6 and 12 of these devices attached to the boom at regular intervals. The cameras are hooked into a processing device, also located on the sprayer. The processor handles image analysis tasks using AI-enabled algorithms and then signals the nozzles when to spray using ISOBUS, which is a communications interface standard for agriculture equipment.

Related: Aerial Imaging Aids Precision Agriculture

The camera inside each enclosure is from LUCID Vision Labs (Richmond, BC, Canada). It is a Phoenix PHX051S-CC camera with Sony’s IMX568 5 MPixel sensor. The lens is from VS Technology (Tokyo, Japan).

The system not only distinguishes weeds from crops but also decides if herbicide use is warranted. “For most weeds, it actually makes sense to not spray if you do not have high weed density. If you have a little bit of weeds, it doesn’t damage your yield. If you keep on year after year applying the same chemicals, well, the survivors will over time be resistant to that chemical,” Kaurstad Morthen says.

The Ecopatch system uses a combination of deep learning models for image analysis and hard-coded algorithms to determine whether to spray. The hard-coded model is based on a cost-versus-benefit analysis that considers weed density and other factors.

John Deere’s Approach to Precision Weeding

Industry heavyweight John Deere also has developed solutions to cut down on herbicide use.

The company’s See & Spray line includes several models incorporating machine vision and machine learning. It has different algorithms for identifying corn, soybean, and cotton. They are as follows:

- See & Spray Ultimate, which was introduced in 2022 and detects weeds among soybean, cotton, and corn plants. It is a self-propelled sprayer with two tanks for herbicides, allowing farmers to do both targeted and broadcast applications at the same time.

- See & Spray Premium, which is available as an upgrade kit that can be installed on some sprayers (model years 2018-2024 R-series and 400/600 series) with a 120-ft steel boom. It also is available factory-installed on select Hagie and 400/600 series sprayers.

To capture images of a field, these versions of See & Spray utilize 36 industrial cameras spaced 1 m apart and mounted on a 120-ft sprayer boom. The cameras scan over 2,000 square feet per second. The cameras use stereoscopic imaging to create 3D point clouds from 2D images.

Vision-Based Real-Time Decision-Making

The machine learning algorithms differentiate row crops from “everything else,” including weeds and crops that do not belong where they sprouted. These are called “volunteer” plants because they were not planted purposely for a growing season, explains Mulder.

Related: John Deere Unveils New Autonomous Machines

For example, a corn plant might sprout in a field in which a farmer rotates the crop between corn and soybean. If John Deere’s embedded vision system is running the soybean algorithm, it will spray the corn plant, but if it is running the corn algorithm, it won’t, he says.

Once a weed or volunteer plant is detected, the software sends a command to the appropriate nozzle, also mounted on the boom, to spray the weed. Separate software modules calculate boom height, length, and speed “to ensure that when that droplet leaves that nozzle target, it actually hits the actual weed on the ground,” Mulder says.

Aggregated data from the use of the system is collected centrally in the cloud at John Deere’s operations center. Farmers can tap into their data to help them make business decisions. For example, Mulder explains, “If I have a weed patch that keeps coming up year after year, and I'm putting on the same chemistry year after year, I’ve got to change something.”

The sprayers connect to the remote operations center using Wi-Fi, cellular connectivity or satellite signals.

Alternative Approaches Aided By Vision-Guided Robotics

Other automated approaches to weeding eliminate the use of herbicides entirely. In an article3 for the Penn State Extension, researchers report on a bevy of emerging approaches including those involving mechanical systems, thermal energy, or lasers. In addition, they write, the use of robotic systems is exptected to "revolutionize" weed control. "Robotic weed removal systems can perform self-guidance, weed detection, mapping, and control, all while ensuring the surrounding crops remain untouched," they write.

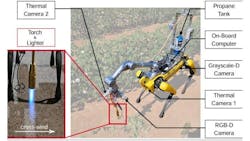

For example, researchers at Texas A&M University (College Station, TX, USA) developed an experimental weed-control system for farmers using a robotic mobile dog equipped with a robotic arm, cameras, AI software, and a propane-powered flamethrower.

Related: Weed Control System Uses Robotic Dog, Machine Vision, and Flamethrower

The robotic arm is attached to the robotic dog, and the arm grips the flamethrower. The dog/arm combination moves into position near a weed and then triggers the flame. The system doesn’t burn down weeds. Instead, the flame heats a weed’s core, which stunts its growth.

AI-Enabled Vision and Precision Mechanical Weeding

Another example of a robotic system is from Aigen (Kirkland, WA, USA). Launched in 2020, Aigen is developing Element, which is an autonomous robot that uses a combination of cameras, AI-enabled algorithms and mechanical weeding techniques to recognize and remove weeds between rows of crops. The mechanical approach, which is similar to working with a hoe, cuts off weeds at their roots. Element also is eco-friendly as it runs entirely off solar and wind energy, according to the company’s website.

The company, which is one of the 2024 winners of Amazon Web Services’ Compute for Climate Fellowship, is currently testing the technology at farms in various U.S. states.

Aigen’s goal is to sell the robots as a service in which Aigen delivers, runs, and maintains a fleet of robots throughout the growing season.

Laser-Based Weed Zappers for Commercial Agriculture

Carbon Robotics (Seattle, WA, USA) takes a different approach. It uses lasers to weed fields. The latest-generation product is LaserWeeder G2, a pull-behind robot that combines AI, embedded computer vision, robotics, and laser technology.

The robot, which comes in numerous sizes to fit different crop situations, includes multiple fully sealed weeding modules. Each one includes two 240W lasers, three cameras, two NVIDIA (Santa Clara, CA, USA) GPUs, and 20 LEDs. The lasers have a 21-in shoot width.

LEDs, which work in strobe mode, are triggered simultaneously, and allow for day or night operation of the weeder.

Related: Carbon Robotics Launches Autonomous Tractor Kit

Neural networks running on the edge distinguish between weeds and plants and target precise locations for the lasers to hit. Cameras in each module are divided between a “predict” camera and “target” cameras.

The neural networks’ predictions are based on a dataset of more than 40 million labeled plants, built on images collected from three continents.

On its website, Carbon Robotics says its system targets weeds with sub-millimeter precision and is capable of processing 4.7 million images per hour.

But no matter the approach, executives at these precision agriculture solutions companies envision a future in which automation using embedded vision systems and AI is more common.

The analysts at McKinsey & Company agree, concluding in their report, “If farm economics and sustainability continue to apply pressure on farmers, we anticipate that adoption of automated technology will accelerate dramatically. As more growers realize the triple win that farm automation can represent—greater agricultural productivity and profits, improved farm safety, and advances toward environmental-sustainability goals—excitement about these technologies will spread.”

References:

1.“Precision Agriculture: Benefits and Challenges for Technology Adoption and Use,” U.S. Government Accountability Office, January 31, 2024. https://www.gao.gov/products/gao-24-105962

2. Bland R, Ganesan V, Hong E, Kalanik J. “Trends Driving Automation on the Farm,” McKinsey & Company, May 31, 2023. https://www.mckinsey.com/industries/agriculture/our-insights/trends-driving-automation-on-the-farm

3. He L, Brunharo C. "An Overview of Advanced Weed Management Technologies for Orchards," Penn State Extension, March 28, 2024. https://extension.psu.edu/an-overview-of-advanced-weed-management-technologies-for-orchards

About the Author

Linda Wilson

Editor in Chief

Linda Wilson joined the team at Vision Systems Design in 2022. She has more than 25 years of experience in B2B publishing and has written for numerous publications, including Modern Healthcare, InformationWeek, Computerworld, Health Data Management, and many others. Before joining VSD, she was the senior editor at Medical Laboratory Observer, a sister publication to VSD.