Hardware-based image compression surpasses software

Hardware-based image compression surpasses software

R. Winn Hardin

Contributing Editor

Image-storage and transmission techniques are primary components of many image-processing applications, especially for medical and military vision systems. Moreover, many image-processing systems offer some type of image compression to reduce bandwidth consumption or increase the capability of storage systems.

In many non-time-critical applications, image-compression algorithms are routinely implemented. However, for embedded or high-speed image-processing applications or networks, solid-state image-compression techniques often offer the best performance.

Because CPUs are continuing to provide improved imaging-system performance, the number of manufacturers producing image-compression microchips has dwindled. Despite this, several hardware compression vendors have experienced success by targeting their products to niche applications in the military, medical, and large-volume document-handling industries, where hardware-based image-compression chips outperform software compression techniques.

For facsimile and document image compression, the Joint Bi-level Image Experts Group (JBIG) standard holds potential for handling large, 12-bit-encoded medical x-rays for which distortionless and lossless compression is crucial. This standard is implemented as a sequential/ progressive codec that can encode or decode images in sequential or progressive modes.

Originally developed to operate on black-and-white images, JBIG techniques also can be used to compress gray-scale and color images. They offer higher compression ratios than lossless JPEG (Joint Picture Experts Group) techniques for pictures with up to six or more bits/pixel and provide an alternative for applications, such as medical imaging, for which distortion caused by lossy JPEG compression is unacceptable. In progressive mode, applying JBIG techniques involves the use of a template to create a base resolution version of an image. The pixel with the highest probability of occurrence within a designer-established template area defines that area. In other words, the algorithm uses surrounding pixels to predict the pixel value within the template area.

Progressively higher-resolution images are created by adding the difference between one bit plane and the next. For example, 8-bit/pixel gray-level images are encoded as eight separate bit planes. Coding the differences between the predicted pixel value and the actual value is usually more efficient in imaging. Because the base value for a particular pixel is known from the lower resolution data, only the differences need to be coded and delivered. This mode is commonly used for Web-based applications or wherever image transmission and viewing are key parts of the application.

In sequential mode, the image comes across at one resolution level and is processed in a unique strip. This mode is mainly used for facsimile bi-level applications. Bi-level refers to two possible values: 0 or 1 or black or white and requires one bit per pixel for storage.

A third mode, the JBIG sequential-compatible progressive mode, merges the two previously described methods and breaks the image into horizontal strips of a designer`s defined length. Each strip is then compressed as an original image, allowing the interleaving of each plane of a multiplane image strip per strip. This allows the designer to decode a gray-scale or color image one horizontal band at a time as opposed to having to decode the entire image before viewing or printing any portion of it. Gray-scale or color images are compressed in this manner.

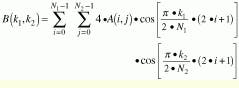

Using the JBIG algorithm and a Q-encoder, which was originally developed by IBM Corp. (Poughkeepsie, NY) and includes the arithmetic encoder and other portions of the JBIG encoding algorithm, Tak`Asic (Guyancourt, France) has developed the IPB SG3 compression chip that offers either sequential or sequential-compatible progressive alternative in full-duplex mode (see Fig. 1). Whereas the JBIG step-resolution approach benefits database searches and image transmissions where the operator can stop the process after adequate resolution is reached, compression comes from the Q-encoder.

Similar in structure to a Huffman encoder, arithmetic encoders search a template area for similar values and create a probability chart for each value based on the number of occurrences within the template area. Encoded values are real numbers between 0 and 1, with high bit values set aside for uncommon pixel values and shorter bit values used for repeated values. By adding the difference values between bit planes instead of actually adding additional pixels to increase resolution, most difference values can be set to zero.

Designed to handle up to 800-dpi images on 64,000 lines (one horizontal line in the image by one bit vertical up to 10 x 80 in.), the IPB SG3 66-MHz clock speed provides up to 256-Mpixel/s compression rates and up to 400-Mpixel/s decompression rates. The chip also includes a typical predictor, which deduces the most probable bit value per bit plane and is part of the JBIG encoding algorithm, an arithmetic coder, and two adaptive templates that can shift to produce the best possible template for each portion of the image. Internal memory (2 kbytes) allows three lines of buffering (one is the string undergoing compression); therefore, no external memory is required.

"This is a very different approach to the compression problem," says Aziz Makhani, director of sales for Advanced Hardware Architectures (AHA; Pullman, WA). "Although the IPB SG3 is targeted at document compression, it can be used in medical applications where lossless compression is required." AHA markets and distributes these devices in the United States and Asia for Tak`Asic.

Hardware libraries

JBIG hardware compression is also used in the IBM Corp. (Waltham, MA) Blue Logic core offerings. In addition to JBIG techniques, however, IBM and AHA also offer the Adaptive Lossless Data Compression (ALDC) method. Unlike JBIG, which is mainly designed for bi-level image data, ALDC uses a proprietary version of the general-purpose Lempel-Ziv (LZ) algorithm (originally LZ77) called IBM LZ1.

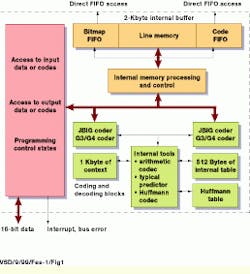

The LZ algorithms deal with symbolic data such as text and imagery. The LZ1 algorithm simultaneously compares incoming bytes against a history, also called a sliding window buffer, of data (see Fig. 2). The IBM core library design allows designers to determine how large a history is needed, ranging from 512 to 2048 bytes. Increasing the size of the history results in greater compression ratios (usually 2:1 or higher).

As each byte is read, it also passes into the history cache. After the history is full, the oldest byte is removed from the cache to make way for incoming data. For imaging applications, this approach ensures that incoming data are compared against data in the nearby context, therefore increasing the chances of a match and successful compression. If an incoming byte does not match against the history, the byte is passed on directly to the output stream.

In the LZ1 algorithm, compression is achieved by replacing a string of incoming bytes that matches part of the history buffer with a code word that includes pointer and length information (larger histories can degrade compression performance due to longer code words). A pointer string location tells the decoder where to begin copying data from a "matched" portion of the data that has already been received; the length tells the decoder where to end the copy. The theory is that the code word, pointer, and length data are smaller than the original matched string, resulting in compression. Thus, LZ compression works best on data streams that share some similarity. Purely random data would result in a 12.5% increase in the original file size due to the addition of pointer and length marks.

IBM offers three versions of LZ compression devices: the ALDC1-5S, -20S, and -40S, which compress and/or decompress data at 5, 20, and 40 Mbytes/s, respectively. In the encoder section of the chips, the history resides in a content-addressable memory (CAM), whereas the decoder memory buffer is a byte-wide synchronous random-access memory (SRAM) of the same length as the encoder`s CAM. IBM also offers a product that combines both CAM and SRAM on the same chip for simultaneous encoding and decoding of separate data streams, and a content-addressable random-access memory (CRAM) buffer that puts a codec on a single chip. This configuration, however, prevents simultaneous encoding and decoding of two data streams.

IBM is marketing the ALDC core hardware library for medical and military imaging applications and to printing, networking, and storage users. In addition to JBIG and ALDC, the IBM Blue Logic core library also includes MPEG hardware compression cores.

Lossy or lossless

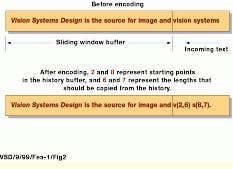

Most of JPEG image compression is lossy, meaning that higher compression ratios are chosen over data integrity. JPEG compression does this by tossing out some of the fine detail from image data that would not normally be noticeable by the human eye. Lossy JPEG compression is performed by breaking down the image into 8 x 8 blocks. A discrete cosine transfer (DCT) function is performed on the blocks,

which converts the image into spatial frequency data. As a result, much of the image data in the high-frequency zones are set to zero. Quantization tables set by the designer when selecting the compression ratio discard the data outside the application-set parameters (see Fig. 3). The remaining nonzero data are then encoded with developer-defined Huffman tables.

Huffman tables scan all the data for the most numerous strings of reoccurring data. The most common strings are placed at the top of a binary tree (each branch only has a left and a right option), while the least-used strings have longer codes. After the tree is built, the sequences at each leaf of the tree are encoded in binary fashion with a zero (0) representing the left leaf of each branch and a one (1) representing the right branch. This method results in a unique encoding scheme for each common stream with the most common strings having shorter binary values; the reverse is true for fewer common strings.

To address both lossy and lossless JPEG compression, Zoran (Santa Clara, CA) offers the ZR36050 compression IC for use with images of 12 or fewer bits per pixel. "This is basically the most flexible solution we have. The compression ratio is completely adjustable," says Kirby Kish, Zoran director of marketing for desktop video products. He adds that in addition to the printing industry, the Zoran codecs are finding growing markets in digital surveillance and medical imaging, where lossless compression is important.

The ZR36050 chip can handle images up to 16k x 16k pixels wide. Its consumer counterpart, the ZR36060, can be used to compress NTSC or PAL images. It also provides 25-frame/s compression of PAL and 30-frame/s compression of NTSC images, but does not offer lossless JPEG mode. Both the ZR36060 and ZR36050 incorporate Zoran`s patented automatic bit-rate control; this control adjusts the compression ratio based upon designer-defined maximum output image size or bandwidth criteria, depending on whether the application is storage or transmission.

Sometimes, lossy JPEG compression is inadequate because lines often appear in the decoded images at the boundaries of the 8 x 8-pixel blocks. In these cases, lossless JPEG is an alternative. In this case, the Zoran ZR36050 chip reads the data by raster lines instead of in 8 x 8 blocks, bypasses the DCT coefficient buffers, and passes the data directly to a Huffman encoder.

Although software image-compression techniques offer algorithm choices beyond simple lossy or lossless modes, cost versus performance clearly gives hardwired image-compression solutions the edge for many stand-alone systems. A chip investment of relatively few dollars can double or triple the capacity of an optical drive or other archiving system that costs tens of thousands of dollars, while ensuring data integrity. Similarly, these same chips can double or better the bandwidth of a factory`s intranet, enabling more automation capabilities without installing new communication lines.

FIGURE 1. The Tak`Asic IBP SG3 uses two codecs (arithmetic and Huffman) and a predictor to create a base resolution version of the picture. It then encodes or compresses the picture based on differences between a base pixel and the next higher version of the pixel. Compression is achieved because the difference requires less data than a value for an entirely new pixel.

FIGURE 2. A typical Lempel-Ziv compression uses a symbolic data stream. A history or sliding window buffer is compared against each byte of the incoming data stream. In this example, as the letter `i` in the word `vision` arrives, it is compared simultaneously against all the byte values in the history and is tacked on to the end of the history; then, the oldest value in the history is removed. As the JBIG algorithm determines that the lower case `i` has a matching value in the history, it sets a pointer value. As more consecutive values match, a second value (that of the counter) continues to increase. In this example, when the incoming `i` first comes into the decoder, the JBIG algorithm knows to begin copying values from the second slot in the history and to continue copying values for a total of six slots. Compression is achieved because the binary code for 2,6 is much less than that of the word `ision` or `ystems.`

FIGURE 3. The JPEG compression technique divides images into 8 x 8 pixel blocks and performs a discrete cosine transform (DCT) of each block. The quantizer rounds off the DCT coefficients according to the quantization matrix. This step produces the "lossy" nature of JPEG, but allows for large compression ratios. The JPEG compression technique uses a variable length code on these coefficients, and then writes the compressed data stream to an output file. For decompression, the JPEG technique recovers the quantized DCT coefficients from the compressed data stream, performs the inverse transforms, and displays the image. (Courtesy University of Purdue)

Company Information

Advanced Hardware Architectures

Pullman, WA 99163

(509) 334-1000

Fax: (509) 334-9000

Web: www.aha.com

IBM Microelectronics

Waltham, MA 02192

(781) 642-5991

Web: www.chips.ibm.com

Tak`Asic

Redwood City, CA 94063

(650) 298-8111

Fax: (650) 568-1776

Web: www.takasic.com

Zoran

Santa Clara, CA 95054

(408) 919-4111

Fax: (408) 919-4122

Web: www.zoran.com