Machines identify images without being taught

Numerous researchers have developed systems that can distinguish one image from another. But for the most part, these systems are trained by being presented with tens of thousands of images that have been labeled according their content.

Recent research, however, suggests that it might be possible to build a system based on artificial neural networks that can identify the content of images from unlabeled data such as random images from the Internet.

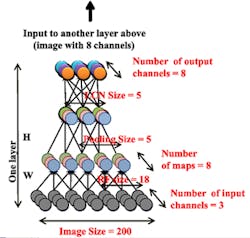

Neural networks have been built for such purposes in the past. However, because of their high computational cost, most machine learning networks have used only 1 to 10 million connections and operate on smaller datasets in order that the network can be trained in a practical amount of time.

With access to Google (Mountain View, CA, USA) data centers, however, researchers at Google and Stanford University (Stanford, CA, USA) have now built a much larger network which they have shown can learn to identify high-level features such as faces.

The artificial neural network itself, which contained three million neurons and one billion synapses, was trained with a dataset of frames sampled from ten million YouTube videos. The computation, which was spread across 16,000 CPU cores in Google data centers, ran for three days.

Contrary to what appears to be a widely-held intuition, experimental results revealed that the Google neural network system could then be used to identify the content of images such as faces without the images being previously labeled.

More information on the research can be found here.

-- Dave Wilson, Senior Editor, Vision Systems Design