LEADING EDGE VIEWS: 3-D Imaging Advances Capabilities of Machine Vision: Part III

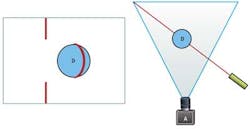

Structured-light 3-D scanners are active stereo vision systems that are commonly used to measure the 3-D shape of an object using projected light patterns and a camera. In the simplest form of structured light system, a single laser stripe is projected onto a 3-D surface (see Fig. 1). Reflected light from the object is then captured by a single camera and used to geometrically reconstruct the shape of a surface in three dimensions.

One early example of such a laser scanning system was developed in the 1980s by Cyberware (Monterey, CA, USA; www.cyberware.com) to image individuals in 3-D for the motion picture The Abyss. The system itself comprised four rigs, each with its own laser and camera. In operation, the four rigs were moved mechanically in the vertical axis while the four lasers projected a laser stripe onto the subject. A set of image sensors on each rig then captured reflected light from the subject.

In a more modern incarnation of this approach, LMI Technologies (Delta, BC, Canada) developed a range of high-speed 3-D scanners that also work on the single-laser-stripe principle. The company's DynaVision system comprises a set of four single-laser-stripe projectors and sensors in a single unit that can be grouped together in series. When used for log inspection applications, a number of laser stripes are projected onto the timber and the reflected light is imaged by the embedded sensors.

In inspection applications like this, either the scanner must be moved across the surface of the object to be imaged or the object must move past the scanner. For assembly line applications where objects are moving under the imaging system, laser stripe scanners can prove extremely effective.

There are few, if any, ambient light issues that must be addressed when using such systems, since the laser produces intensely bright light. Thus it is relatively straightforward to use interference filters to block out ambient light.

White light scanners

White light scanners rely upon the triangulation principle as well, but they do so by projecting a series of time-multiplexed patterns onto an object. These white light scanners typically incorporate a single 2-D projector and a 2-D camera in their design. By projecting multiple patterns onto an object, active triangulation systems eliminate the dense stereo problem found in passive systems where every feature of the target object imaged, within the camera's field of view, can be reconstructed in high resolution since the pixel correspondences between the camera and the projector are guaranteed—a marked difference from the results available with passive stereo vision systems.

Projecting a large number of patterns onto a static object makes it possible to produce highly accurate 3-D scans, such as the 3-D surface of a face. Because the white structured light patterns are projected over short time bursts, it may appear that images of moving objects cannot be captured. However, these systems can capture images at rates as high as 150 frames/sec, so it is possible to reconstruct moving images. In fact, today there are projectors that work in excess of 360 frames/sec with 8-bit grayscale.

The drawback in using white light scanners is their sensitivity to ambient light, which requires controlled lighting environments. Special consideration must also be paid to environments where mirrored surfaces and specularity may exist since these can be detrimental to system performance.

One-shot systems

Instead of using multiple patterns of light, some researchers are developing systems that use one-shot structured light. These one-shot structured light systems attempt to find pixel correspondences between the camera and projector from a single, continuously projected pattern.

One highly successful system that relies on this type of structured light approach is the Microsoft Kinect camera, which uses a pseudo-random dot pattern produced by a near-infrared (NIR) laser source as its single structured light pattern. By imaging surfaces using an NIR camera, it is possible to then generate a depth map of the scene. Using a single light pattern enables the Kinect camera to operate in real time—an obvious benefit in the gaming applications for which it was developed.

However, both the depth accuracy and the lateral resolution of the system are considerably lower than with a multiple-pattern structured light system since it relies on small windows of camera pixels. The Kinect camera, for example, is only capable of 1-cm depth accuracy and a lateral resolution of 3 mm that is comparable to a passive stereo vision system.

Solving problems

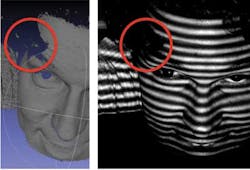

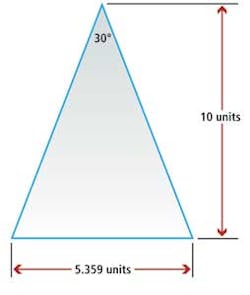

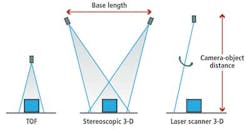

With systems that operate on the principle of triangulation, occlusion problems are typical because certain surfaces may not be visible to both cameras and projectors. Figure 2 shows a typical case where the temple of a head (highlighted in red) would be clearly visible to a camera but not to the structured light source. Therefore, the camera is unable to record the necessary pattern information from the projector. So when deploying a system based on triangulation, it is also important to know how far it is possible to space the two devices (see Fig. 3).

Another challenge is simply geometry. For example, in order to maintain a useful triangulation angle of 30° to image an object that is 10 units away from a dual camera or camera projector combination, for example, the distance between both devices must be more than 5 units apart. Because of this fact, the cameras would need to be more than 25 m apart to image an object 50 m away. Clearly the application of such systems for long-range sensing is limited.

Line-of-sight systems

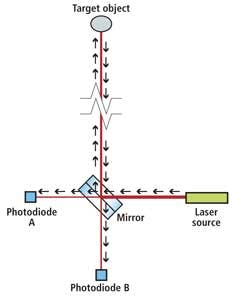

Line-of-sight systems are an alternative approach for long-range 3-D applications. These systems rely on the time-of-flight (TOF) principle of measuring how long it takes laser light to leave a light source, reach an object, and return to a sensor (see Fig. 4).

In TOF systems, the light source is captured by a pair of photodiodes, the first of which (photodiode A) captures light in the direct line of the laser source. The second (photodiode B) captures light returning after it has first been reflected from a mirror and then back from the target. By measuring the delay in arrival time of the light between the two photodiodes, 3-D images of objects can be recreated.

Aside from systems that directly measure TOF, there are number of imaging systems that indirectly measure the TOF of an NIR light pulse spread over a wide field of view (FOV). Much research is being conducted in this field and a number of so-called "flash IR" TOF systems are under development.

Flash TOF systems employ an array of laser diodes that emit modulated light pulses. To achieve TOF measurements, the imaging sensor samples the returned modulated signal in each cycle of the waveform from which the phase of the signal at each pixel is then calculated to produce a distance measurement.

The absolute accuracy of a TOF system—for example, the SR4000 from Mesa Imaging (Zurich, Switzerland; www.mesa-imaging.ch)—is independent of distance from an object (see Fig. 5). In nominal operation, the company claims to achieve an absolute accuracy of less than 1 cm.

Time-of-flight systems feature the advantage of being able to perform measurements in real time. Since the camera provides depth values for each pixel, there is also no need to perform complex calculations to compute these values on a host PC.

Because these systems use laser light sources and not white light, they can operate in bright ambient light environments. However, the sensor resolutions are limited compared to 3-D systems used in machine-vision applications. It is worth noting, though, that since the video game industry is driving the development of flash TOF systems, their prices should continue to fall, making them attractive to developers of industrial systems. Case in point, there is an ever-growing computer vision community devoted to Microsoft Kinect.

Editor's note: Read Parts I (http://bit.ly/KAku7Z) and II (http://bit.ly/KgzkDs) of this Leading Edge series to get details on how 3-D imaging fits into machine-vision applications and how active stereo systems work.