Computer vision takes to the skies with Phantom 4 drone from DJI

DJI's (Shenzhen, China; www.dji.com) Phantom 4 features 4k cameras and on-board vision processing to provide sense and avoid capabilities, along with automated subject tracking.

The Phantom 4 camera features 4k video capture at 30fps and full HD 1080p at 120fps, along with an aspherical lens with a 94° field of view. A forward obstacle sensing system allows the Phantom 4 to stop and hover if it sees an obstacle coming close. In TapFly, ActiveTrack, and Smart Return Home modes, it will avoid an obstacle or hover to avoid collision.

Additionally, the drone will provide warnings so that the operator always knows what is happening. A downward-facing vision positioning module contains dual cameras and dual ultrasonic sensors. ActiveTrack, which provides automated subject tracking capabilities, uses a combination of computer vision, object recognition, and machine learning. While operating, ActiveTrack can continue following an obstacle, avoid it or stop.

Researchers use IR camera to date Rembrandt sketches

A team of researchers at the Fraunhofer Institute for Wood Research, Wilhelm-Klauditz-Institut (WKI; (Braunschweig, Germany; www.wki.fraunhofer.de/en.html) have developed a method to use an IR camera to determine precise dating of Rembrandt art sketches.

The findings are the result of collaboration with the Herzog-Anton-Ulrich-Museum and the Institute for Communications Technology at the Technical University of Braunschweig (Germany). In the research, an IR camera was used to scan was assumed to be sketches from Rembrandt. When imaging the sketches, the team searched for a watermark to provide information about the time period in which the paper and the artwork was produced.

"We didn't scan the papers with visible light, but instead used infrared radiation," says researcher Peter Meinlschmidt at the WKI. "The frequently used iron gall ink is transparent under this light so that the watermark is revealed."

The researchers look for deviations in thermal radiation of the sketches, with the camera determining temperature differences of 15mK. To do this, the researchers clamp the paper in a passe-partout, which is positioned between an infrared heater and the camera. Heat must be evenly distributed, so the paper is kept a suitable distance from the heat source. While the heat is harmless to the paper, speed is of the essence, as the watermark is visible only for a few seconds due to the fact that the longer the paper is exposed to the heat source, the more intensely the ink-darkened areas increase in temperature, which interferes with the temperature variations caused by the watermark. Using this method, the team has been able to date about 60 sketches associated with Rembrandt.

Since the IR cameras currently used are expensive, the team is working with the Saxon State and University Library in Dresden, Germany to lower the system's price from about 80,000 Euros to about 20,000-30,000 Euros using a camera that can determine temperature differences of about 50mK.

"We plan to compensate for this by using Gaussian filters on the captured image to remove noise," Meinlschmidt said.

Engineers develop ICs artificial neural networks for image analysis

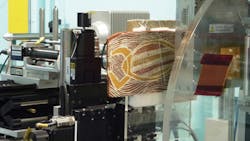

Engineers and researchers from MIT (Cambridge, MA, USA; www.mit.edu), Nvidia (Santa Clara, CA, USA; www.nvidia.com), and the Korea Advanced Institute of Science and Technology (KAIST; Daejeon, South Korea; www.kaist.edu) recently showcased prototypes of low-power ICs designed to run artificial neural networks for image analysis applications. These teams showcased the prototypes of low-power devices that run artificial neural networks at the IEEE International Solid-State Circuits Conference in San Francisco, CA, USA.

Vivienne Sze, an electrical engineering professor at MIT, worked with Joel Emer, also an MIT computer science professor and research scientist at Nvidia, to develop Eyeriss. The Eyeriss device is designed to run a convolutional neural network, consuming 0.3W of power running AlexNet, an image classification algorithm.

In addition, an IC from KAIST was demonstrated at the IEEE conference. This device was developed by Lee-Sup Kim, a professor and head of the Multimedia VLSI Laboratory, along with a team at KAIST. Designed to be a general vision processor, Kim notes that such circuits could be useful in applications such as airport face-recognition systems and robot navigation.

Additionally, Hoi-Jun Yoo, head of the System Design Innovation and Application Research Center at KAIST, described a system for self-driving cars that is designed to run convolutional networks that identify objects in the visual field using a recurrent neural network. The system tracks a moving object and predicts its intention. Consuming 330mW, it can predict the intention of 20 objects at once with only a 1.24ms lag time. Furthermore, the system continues to train while on the road, as it features a deep-learning core.

X-ray technology helps analyze Aboriginal artifacts

A team of researchers from Flinders University (Adelaide, Bedford Park, Australia; www.flinders.edu.au) have used X-ray technology from the Australian Synchrotron Facility (Clayton, Victoria, Australia; www.synchrotron.org.au) to analyze Aboriginal artifacts without the need for sample extraction.

Dr. Rachel Popelka-Filcoff of Flinders University said that while the technique is often used on canvas paintings, this was the first time it has been applied to indigenous objects. "This method provides higher resolution information and an alternative to traditional destructive testing," she said. "In the case of investigating Indigenous objects, this technique has unparalleled resolution over existing techniques."

Compiling the data into elemental maps allowed for further insight into the composition, application and layering of natural pigment on the micron scale, and further cultural interpretation of the objects, she noted.

Along with researchers from South Australian Museum (Adelaide, Bedford Park, Australia; www.samuseum.sa.gov.au) and the University of South Australia (Adelaide, Bedford Park, Australia; www.unisa.edu.au), Popelka-Filcoff used the X-ray fluorescence microscopy (XFM) beamline at the Australian Synchrotron to analyze a boomerang and bark painting from the museum's Australian Aboriginal Culture Collection.

Using the technology, researchers can obtain data about the composition of the mineral pigments without disturbing the object, enhancing the studies of indigenous technology and culture conservation.