Researchers at MIT develop system to assist autonomous vehicles in bad driving conditions

LiDAR technology is a central feature in many autonomous vehicles' vision systems. LiDAR has difficulty dealing with fog and dust. Autonomous vehicles that can't account for poor driving conditions require the intervention of human drivers. Researchers at MIT have made a leap toward solving this challenge.

Sub-terahertz radiation operates on wavelengths between microwaves and infrared on the electromagnetic spectrum. Radiation on these wavelengths can be easily-detected through fog and dust clouds and used to image objects via transmission, reflection, and measurement of absorption, much like how a LiDAR system operates.

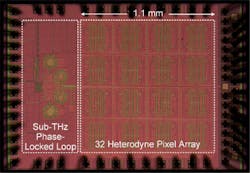

The IEEE Journal of Solid-State Circuits on February 8 published a paper in which MIT researchers describe a sub-terahertz receiving array that can be built on a single chip and that performs to standards traditionally only possible with large and expensive sub-terahertz sensors built using multiple components.

The array is designed around new heterodyne detectors - schemes of independent signal-mixing pixels - much smaller than traditional heterodyne detectors that are difficult to integrate into chips.

Each pixel, called a heterodyne pixel, generates a frequency difference between a pair of incoming sub-terahertz signals. Each individual pixel also generates local oscillation - an electrical signal that alters a received frequency. This produces a signal in the megahertz range that can easily interpreted by the prototype's processor.

Synchronization of these local oscillation signals is required for the successful operation of a heterodyne pixel array. Previous sub-terahertz receiving arrays featured centralized designs that shared local oscillation signals to all pixels in the array from a single hub. The larger the array, the less power available to each pixel, which results in weak signals and low sensitivity that makes object imaging difficult.

In the new sub-terahertz receiving array developed at MIT each pixel independently generates this local oscillation signal. Each pixel's oscillation signal is synchronized with neighboring pixels. This results in each pixel having more output power than would be provided by a single hub.

Because the new sensor uses an array of pixels the device can produce high-resolution images that can be used to recognize objects as well as detect them. The prototype has a 32-pixel array integrated on a 1.2 mm square device. A chip this small becomes a viable option for deployment on autonomous cars and robots.

“Our low-cost, on-chip sub-terahertz sensors will play a complementary role to LiDAR for when the environment is rough," said Ruonan Han, director of the Terahertz Integrated Electronics Group in the MIT Microsystems Technology Laboratories and co-author of the paper.

Related stories:

Novel thermal imagers fill gap in autonomous vehicle sensor suite

Vision-guided robot monitors and inspects hazardous environments

Share your vision-related news by contacting Dennis Scimeca,Associate Editor, Vision Systems Design

To receive news like this in your inbox, click here.

About the Author

Dennis Scimeca

Dennis Scimeca is a veteran technology journalist with expertise in interactive entertainment and virtual reality. At Vision Systems Design, Dennis covered machine vision and image processing with an eye toward leading-edge technologies and practical applications for making a better world. Currently, he is the senior editor for technology at IndustryWeek, a partner publication to Vision Systems Design.