Six Challenges of Integrating Edge AI into Industrial Vision Systems

What You Will Learn

-

While AI-powered vision systems are improving quality control and safety in manufacturing, real-time, privacy-compliant performance requires edge deployment. However, engineers face challenges in model selection (e.g., ResNet, YOLO, MobileNet), hardware compatibility, and dataset curation. Low-code tools and open-source frameworks are increasingly used to lower the barrier to entry.

-

Engineers can develop vision systems that balance performance and maintainability across a fragmented landscape of network processor units (NPUs), toolchains, and inference engines.

-

The importance of designing for long-term viability in a fast-evolving machine learning ecosystem. Success lies in building flexible, scalable edge AI systems that can adapt with minimal revalidation.

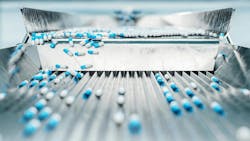

Industrial manufacturers are increasingly turning to machine learning and AI-powered vision systems to improve both safety and quality. In sectors like pharmaceuticals, electronics, and automotive, AI-based inspection detects anomalies, ensures product consistency, and validates compliance with safety protocols.

But to meet the strict latency, bandwidth and privacy requirements of these use cases, inference must happen at the edge. This is where development becomes complex. Edge AI is still maturing, and while interest is high, most companies are struggling to implement it. Manufacturers know they need to modernize, but many don’t know where to begin.

This article explores six common challenges facing industrial organizations as they seek to integrate edge AI into their vision systems and explains how a new wave of embedded processing solutions is helping enable more intelligence at the edge.

Related: Industrial Computing Advances Power Evolution in Robotics, AI, Machine Vision

1. Development Complexity: The Steep Learning Curve

For teams new to edge AI, the ramp-up can be steep. Building an effective AI vision pipeline requires:

- Curating high-quality datasets for model training

- Selecting the right neural network architecture, such as:

- ResNet: A deep convolutional neural network (CNN) architecture that uses residual connections (skip connections) to solve the vanishing gradient problem. These connections let the model learn identity mapping, making it easier to train very deep networks. It’s useful for image classification or feature extraction in vision tasks.

- MobileNet: A family of lightweight CNN architectures optimized for mobile and embedded vision applications. It uses depthwise separable convolutions to drastically reduce the number of parameters and computations. It’s useful for on-device image recognition or object detection on smartphones or edge devices.

- YOLO (You Only Look Once): A real-time object detection system that frames detection as a single regression problem, predicting bounding boxes and class probabilities directly from full images in one evaluation. It’s useful for fast object detection in real-time video, robotics, and surveillance.

- Ensuring model layers are compatible with the chosen hardware and inference engine (the software component—often optimized for specific hardware—that executes a pre-trained AI model to generate predictions, or "inferences", directly on an edge device) without relying on cloud servers.

Each step requires specialized expertise. And even when off-the-shelf models are used, tuning and adapting them for real-time industrial environments can be daunting.

Related: Researchers Create Model for Surface Inspection of Peanuts

To accelerate the process, developers are looking to the efficiency of low-code/no-code development stacks, built on open-source edge AI frameworks, and well-documented APIs. Together, these tools hide much of the complexity of machine learning, enabling teams to build, test, and deploy models more quickly—without requiring deep-learning expertise.

2. Dependency Risks: Managing Interrelated Software Components

The software environment for running edge AI is complex. Each network processor unit (NPU) vendor typically provides its own set of tools, compilers, and runtime libraries, many of which are tightly coupled to specific versions of the host operating system, runtime environment, and model frameworks. Updating just one component can break compatibility with others.

The challenge can be simplified through a containerized architecture, which is a deployment model where AI components—such as inference engines, models, APIs, and preprocessing code—are encapsulated into containers allowing them to run consistently across different environments. AI applications often have complex dependencies: specific Python versions, libraries (e.g., TensorFlow, PyTorch), hardware drivers (e.g., CUDA), and custom code. Containers simplify deployment by packaging these together.

Related: Edge Connectivity in Industrial Applications Using 10BAASE-T1S Ethernet

Pre-integrated and validated software stacks remove the guesswork, accelerating time-to-value while reducing support burden.

3. Optimization Overload: Tuning Models for Edge Hardware

Even after a model is selected, it often needs to be optimized to run efficiently on constrained edge hardware. This involves:

- Quantizing the model (from FP32 to INT8, for example). Quantization is a technique that makes the model smaller and faster by converting its numbers from FP32 (32-bit floating point) to INT8 (8-bit integer)—or sometimes 16-bit formats. Imagine your model is a detailed photo with millions of colors (FP32). On the edge, you don’t need that level of detail—just a simplified version with fewer colors (INT8), which still gets the job done. This results in faster inference, lower power, and a smaller memory footprint.

- Applying pruning and compression. Pruning is the process of removing parts of a machine learning model that aren’t very important—like cutting off small branches from a tree that don’t affect its shape or function much. Model compression is the process of making a machine learning model smaller and more efficient—without significantly reducing its accuracy. It's like zipping a large file so it takes up less space and runs faster.

- Tailoring the inference pipeline to leverage hardware acceleration (NPU, DSP, ISP).

Doing this manually requires experimentation to achieve the best balance of performance and accuracy. This not only requires deep knowledge of machine learning and hardware internals but also time-consuming instrumentation and analysis.

Companies leading the way in helping proliferate AI at the edge provide automated and semi-automated model optimization workflows, including human-in-the-loop (HITL) model tuning (i.e. when a person helps guide the AI—by correcting it, labeling data, or making decisions when the model is uncertain). These workflows maximize inference performance and offer methods to improve inference accuracy, simplifying the creation of custom models that are edge-optimized and production-grade.

Related: A Primer: Developing Embedded Vision Applications

Additionally, AI native embedded processors support hardware-accelerated preprocessing functions via the NPU and ISP, offloading workloads that typically bottleneck the vision pipeline and improving end-to-end speed.

4. System Fragility: Maintaining and Monitoring AI Systems in Production

Once deployed, AI vision systems must be monitored continuously. Field devices require updates, performance tuning, and issue resolution. Many organizations need methods to integrate, manage, and orchestrate devices at scale.

A robust platform includes fleet management tools built atop a mature container ecosystem. These provide:

- Real-time analytics and events via a dashboard and APIs.

- Visibility into device status, system health, firmware versions and configurations.

- Compatibility with orchestration tools for over-the-air (OTA) updates.

- Diagnostics for tracking inference accuracy, system health, and throughput.

5. Deployment Risk: Future Proofing in a Rapidly Evolving Ecosystem

The machine learning ecosystem is evolving rapidly. New model architectures, tool chains, and hardware emerge every year. Solutions tightly coupled to a specific stack become obsolete quickly, introducing risk and increasing long-term maintenance costs.

An approach that addresses this challenge is an architecture that abstracts key machine learning subsystems from the underlying OS. This means separating or isolating the core parts of the machine learning system (like the model runner, inference engine, or data processing pipeline) from the underlying OS, hardware, or software stack. In this way the ML system doesn't depend too heavily on any one specific platform—so it can more easily run across different devices, OS versions, or hardware types.

Related: Federal Package Uses Smart Camera System to Inspect Deoderant Products

This decoupling reduces validation requirements and increases system longevity.

6. Data Privacy and Traceability: Complying with Industry Regulations

Many industrial customers operate under strict regulatory frameworks. They must ensure:

- Inspection data is traceable for audits and compliance.

- Data stays on-premises to meet data sovereignty laws.

- Edge devices resist tampering and unauthorized access.

As demand grows for real-time, intelligent vision capabilities across industries, implementing efficient edge AI systems has become essential. These systems must balance performance, accuracy, and resource constraints while operating independently of the cloud. To achieve this, modern architectures are designed to simplify development, streamline deployment, and ensure long-term adaptability. By abstracting key AI components from the underlying hardware and software stack, organizations can build flexible, scalable solutions that are easier to maintain and optimize—enabling smarter, faster, and more resilient edge applications.

About the Author

Nebu Philips

Nebu Philips is senior director of strategy and business development for Synaptics (San Jose, CA, USA). He is responsible for overseeing strategic initiatives and driving business growth in IoT and edge processor solutions. He holds an MBA in Marketing from Santa Clara University’s Leavey School of Business (Santa Clara, CA, USA), a Master's in Computer Science from the University of Nebraska-Lincoln and a BE in Computer Science from Motilal Nehru National Institute of Technology Allahabad, or MNNIT, (Prayagraj India).

David Steele

David Steele is the director of innovation at Arcturus Networks (Toronto, ON, Canada). He is responsible for edge AI and vision programs. Steele is a 20-year industry veteran who has led programs in disruptive technology areas including embedded Linux, IoT, edge hardware and voice/video communication. He is a graduate of Toronto (Toronto, ON, Canada) Metropolitan University.