What is 3D Machine Vision in Industrial Environments?

What You Will Learn

- Stereo vision relies on passive illumination and is cost-effective, ideal for scenarios like warehouse object sizing and outdoor vision where lighting conditions are unpredictable.

- Structured light offers high-resolution 3D models and is especially useful for detecting surface imperfections.

- Laser triangulation provides precise measurements for moving objects, making it suitable for industrial inspection and pavement analysis, but it requires object or sensor movement for full data capture.

- Time-of-flight cameras are compact and affordable, but they offer less precision than structured light.

It is essential for engineers working in the machine vision and imaging industry to understand how 3D imaging works and when to incorporate it into systems and applications.

Thanks to input from experts in the field, Vision Systems Design will review the basic modalities of 3D imaging in this article. This includes the pros and cons of each method and when to use them in machine vision use cases. As far as the specific 3D methods, we will cover stereoscopic imaging, structured light, and time-of-flight. We also will discuss 3D laser triangulation and touch on LiDAR.

“In all 3D imaging—with a few exceptions like time-of-flight—the cameras start by acquiring just a plain 2D image,” says David Dechow, a machine vision expert and solutions architect for Motion Automation Intelligence (Birmingham, AL, USA).

But how do the 2D images become 3D? Dechow says that most 3D methods use some form of triangulation, meaning that algorithms calculate each pixel’s location in space by matching points in angles from different points of view or lighting directions.

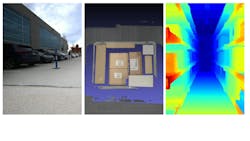

Stereo Vision

A common approach to 3D imaging is stereo vision, which is also called stereoscopic or binocular vision. This method uses “an execution of triangulation the same way that your eyeballs do,” Dechow says. “If you remember the little kid’s trick: hold your finger in front of the eye and focus away from it. You see two different fingers, and it's because the eyes are seeing it from two different planes and that's exactly what happens in stereo.

It starts with precision calibration. The cameras must be calibrated together and then the images are matched. The technical word is rectified. There’s a calculation done to find out for each visible correlated point in the two images what the difference between image A and image B is. The difference between image A and image B is called the disparity,” Dechow explains.

Related: Advanced Visualization Method Improves Understanding of 3D Point Cloud Data

Freya Ma, product manager for Teledyne FLIR Integrated Imaging Solutions (Richmond, BC, Canada), adds, “The challenging part is how to synchronize it and how to match the image you took pixel-by-pixel, point-by-point.”

Advantages of Stereo Vision

This approach to 3D imaging has numerous advantages for a variety of use cases.

Dechow says cameras relying on stereo vision tend to be less expensive than cameras incorporating some of the other modalities. The method also “might have less precision in terms of its X, Y, and Z positional information,” Dechow says. That’s because there may be fewer points to correlate with each other.

Related: Software Basics in 3D Imaging Components and Applications

It is a good choice, he says, when the application calls for “reasonable” precision for a “reasonable” cost. “For example, if I were in a warehouse and I just wanted to see the size of a box that was coming down a conveyor, I might use binocular stereo,” Dechow says.

Ma notes that stereo vision also can be an option for bin picking if the application depends on recognizing the objects in the bin. “For many of my customers, they’re doing pick and place, and they need to recognize the object. They need the 3D information and the 2D information to feed to their AI module.”

She notes that stereoscopic vision works inside or outside because it is less reliant on precise control over the illumination. Sunlight—or the lack of it—is unpredictable, and that is one reason why stereo vision often is incorporated into vision systems that operate in outdoor environments, she adds.

However, stereo vision also is less reliable than other 3D methods in low light conditions, such as in poorly lit indoor environments.

Active Versus Passive 3D Imaging

Stereo vision is a 3D imaging modality that many machine vision experts refer to as passive illumination because light is not actively contributing to the construction of the 3D image.

Related: 3D Imaging: What's Ahead

Other modalities, which we discuss below, rely on illumination to produce the image, and they are often referred to as active illumination.

Structured Light

Structured light is a different approach to 3D imaging than stereo vision. Here’s how it works: A known pattern of light is projected onto an object or scene—usually a series of lines or a grid. Whatever the pattern, it is distorted by specific features, such as curves or depressions, as it hits the object or scene. A 2D sensor captures this information in a series of images. Algorithms produce a 3D model by analyzing the distortions in the pattern of light as depicted in the images.

“The scanning software’s pattern-recognition and reconstruction algorithms understand that when a strip of light is thicker or thinner in places, then that means those points on the surface are respectively closer or farther away from the camera, while other shapes and structures are determined by varying types of deformations in the structured light patterns,” explains Artec 3D (San Diego, CA, USA) on its website. The company develops handheld and portable 3D scanners.

Depending on the complexity of the pattern projected on the scene or object, structured light can produce more detailed images than stereoscopic imaging because more 3D points can be extracted by the camera. The more 3D points you have, the higher the resolution, Dechow says.

Some of the most sophisticated structured light cameras sold today use what is referred to as coded light, meaning that specific regions within the pattern of light are assigned codes, which helps provide precision in calculations, he says.

Advantages of Structured Light Imaging

In addition, structured light is particularly beneficial in imaging flat, featureless scenes, such as a blank beige wall—whereas stereoscopic imaging would be useless. Dechow explains, “The stereo camera relies on what we could call a texture in the images—it relies on having core features that can be correlated.”

Structured light can be a good choice for spotting surface imperfections that are difficult to see with the naked eye, such as tiny scratches or dimples. For example, engineers may use this approach to find minute imperfections in the paint on an automobile body shell.

There are disadvantages to this approach. David MT Chung, business executive for robotic computer vision at Orbbec (Troy, MI, USA), says that structured light “is almost always limited to a very narrow field of view.”

That’s because the pattern of light projected on an object will become less dense as the distance between the sensor and object increases, which then impacts the calculation of the 3D information.

Structured light also typically requires consistency in movement, either a motionless object or one moving at a constant rate.

Related: Artist Gives 19th Century Sculpture 21st Century Perspective with 3D Imaging

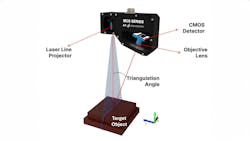

3D Laser Triangulation

3D laser triangulation, or profilometry, has some similarities to structured light. The difference: In laser triangulation, a laser projects a line onto a scene, which is recorded in an image taken by an image sensor. Calculations are based on triangulation—or changes in the angle. 3D models are built line-by-line.

“There’s a known angle, and so it can view height differences because the detector is viewing it from that known angle,” says Gretchen Alper, business director, North America, for AT Sensors (Bad Oldsloe, Germany).

Related: Understanding Laser-Based 3D Triangulation Methods

The method provides accurate dimensional measurements, she adds, making it useful for metrology applications.

A key characteristic of laser triangulation is that “you have to have movement. So, the part has to be moving, or the sensor has to be moving, otherwise it would just get one profile of the object. So that's where it definitely is a little different than some of the other principles,” Alper adds.

She says it is often used to inspect items moving on a conveyor belt. It also can be affixed to a robot end effector to scan objects. In the second case, the movement of the robotic arm provides the necessary motion.

Another application is pavement inspection in which the 3D laser scanner is affixed to a vehicle driving down a road or highway, which provides the motion, and the goal is to assess the condition of the pavement.

Advantages of 3D Scanning for Industrial Inspection

Speaking of inspection use cases, David Wyatt, founder, Automation Doctor (Jacksonville Beach, FL, USA) adds, “If the part is already moving at a fixed rate or at a rate that can be triggered with an encoder, the scanning techniques are wonderful.”

What types of products? Alper says that 3D laser scanning is being applied to EV battery inspection and food inspection, among others.

To control the impact of ambient light indoors or sunlight outdoors, filters are used in front of the detector. Typically, the laser light is colored, such as red or blue, and the color is based on the item being imaged.

For example, Chung says, “If they're going to do a tire and it's black, red is going to have the best response.”

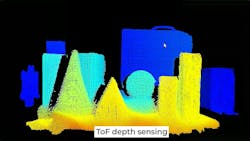

Time-of-Flight

As mentioned earlier, one type of 3D imaging works differently than the others because it doesn’t rely on triangulation, and that method is time-of-flight (ToF) imaging. “Time of flight creates depth information by calculating the time it takes for light projected from a camera to travel to the object and back. So, for every single pixel, I’m calculating how fast it bounced back,” Chung says.

Related: 3D Laser Line Profiling System Details Safety of Road Pavement

Dechow adds, “ToF is closely related to another 3D imaging technique called LiDAR. ToF can also be called ‘flash LiDAR’ where the distance information for all of the pixels is acquired at the same time.”

LiDAR (light detection and ranging) uses laser beams to provide the light hitting the surface of an object and then measures the return time. It is used in a variety of applications such as automated driver assistance systems in vehicles, indoor and outdoor terrain mapping, and industrial quality control.

Advantages of Time-of-Flight

The ToF cameras come in a smaller package and are cheaper than structured light systems, he adds. It is “easy to implement as well. You just hang the camera, and it runs.” You don’t need an external light source, he notes.

Ma says, ToF is “actually faster than stereo vision, but it requires a controlled environment without interference from other light sources, including ambient light.”

Related: How to Handle Optical Design Challenges in 3D Imaging

For example, Ma says if you want to incorporate time-of-flight cameras into mobile robots operating in a warehouse, you could run into issues with uncontrolled light. That’s because all the robots are projecting light and those light sources can interfere with each other.

Dechow adds that the precision of depth measurement is less than what is possible with structured light.

In addition to industrial use cases, Chung says ToF often is used in facial and body scanning applications.

When is 3D Imaging the Correct Choice for a Machine Vision Application?

3D imaging is not always necessary. Often 2D imaging works just fine and often is less expensive. So, when should you consider 3D over 2D?

In his opinion, Wyatt says there are two scenarios.

“The first most important scenario is if you can't light it (the object or scene) with 2D. Because the product changes so much, you cannot find a stable lighting scenario that works for more than a couple of days” he says. “If it's not stable because they have different colors and they have different sizes and you can't find one lighting scenario that in a 2D world will do the job, at that point you really don't have any choice but to do a three-dimensional inspection.”

The second scenario is “when you have a volumetric piece of data that you need to determine,” he says.

For example, he says, when he worked for General Motors, the company added solder dots to electronic components to strengthen the bond with a substrate such as a circuit board.

When inspecting the solder dots using images taken from above, “you don't see the mound. You see the top,” Wyatt explains. But you can’t determine if the solder dot has been applied correctly by examining an image of only the top of the solder ball. In this case, geometric dimensions are necessary.

Precise application of the solder is important in this instance because either too much or too little solder could compromise the strength of the electrical connection.

Conclusion

3D vision is a set of imaging approaches in the machine vision engineer’s toolbox. Stereoscopic, structured light, 3D laser triangulation, time-of-flight, and LiDAR are used in numerous applications in a variety of industries.

Related: Automotive LiDAR: Safety Questions Raised About 1550 nm LiDAR

And sometimes more than one method is used in a single vision system. 3D imaging vendor Zivid (Oslo, Norway) explains on its website that “techniques are often combined to form a complete vision system, and sometimes there exists no clear boundary between techniques.”

As Dechow notes in a recent Vision Systems Design webinar, “This kind of sensor fusion is going to be a way that both commercial and industrial systems see more, gather more data, and provide more value, so it is certainly something that is coming.”

About the Author

Linda Wilson

Editor in Chief

Linda Wilson joined the team at Vision Systems Design in 2022. She has more than 25 years of experience in B2B publishing and has written for numerous publications, including Modern Healthcare, InformationWeek, Computerworld, Health Data Management, and many others. Before joining VSD, she was the senior editor at Medical Laboratory Observer, a sister publication to VSD.