Image sensor technologies continue to advance, offering increased resolution and higher speeds that open up new machine vision and imaging possibilities. Along with these developments, however, challenges emerge, chief among them reliable data transmission — such as transmitting data over long distances while keeping latency and jitter low enough, and well enough controlled, to enable success in some of today’s most challenging imaging applications.

Many manufacturers have invested in high-performance data transmission using Ethernet, the scalable technology that serves as the basis for GigE Vision — the machine vision industry’s leading camera interface technology. GigE Vision allows for the use of off-the-shelf cabling, switches, and network interface cards (NICs) and is supported by Windows, Linux, and all other mainstream computer operating systems.

An Optimized GigE Vision Implementation

Officially ratified by the Association for Advancing Automation (A3) in 2006, the GigE Vision standard uses a UDP (user datagram protocol) to facilitate low-latency, reliable delivery of data using Ethernet. As data rates have risen, some manufacturers have run into issues with achieving high performance with GigE Vision, particularly when data rates approach 10Gbps or higher. This has led some to experiment with TCP (transmission control protocol) or RDMA (remote direct memory access) and RDMA over Convergent Ethernet or “RoCE” protocols to mitigate these difficulties.

The GigE Vision standard uses GigE Vision Streaming Protocol (GVSP) over UDP to transmit across Ethernet. Each frame contains a leader packet, multiple image (payload) packets, and a trailer packet. A high-speed GigE camera sends the packets and leaves the receiver (PC) to place the data in the appropriate destination buffer(s). This unconnected protocol approach eliminates network overhead, delivering the highest possible network performance. As UDP does not guarantee data delivery, the receiver must be properly designed and configured to avoid data or packet loss, but when done properly, this configuration ensures maximum performance, lowest latency, and lowest jitter.

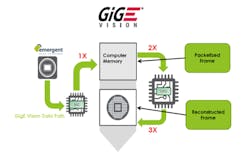

Figure 1: Data path in a conventional GigE Vision + GVSP implementation.

Not all implementations offer equal performance, however. Some manufacturers use a GigE Vision implementation that relies on header splitting in software to strip the headers from the GVSP packets and to place the image data into a contiguous memory buffer. While still technically compliant, the impacts on performance are significant, as CPU usage and memory consumption increase threefold. The resulting inefficiency from a poor receiver design like this greatly impacts system costs and performance, often limiting the performance of 1GigE and 10GigE devices and putting the ability to achieve speeds of 25GigE or 100GigE out of reach. The technical challenges associated with implementing an optimized GigE Vision solution including an optimized receiver have led to some manufacturers proposing alternative methods to using Ethernet for today’s more complex imaging methods.

UDP vs. TCP vs. RDMA and RoCE for GigE Vision

One proposed addition to the GigE Vision standard is TCP, which some say could mitigate the need to design for and manage header splitting. While a TCP approach might simplify design, it offers limited benefits over UDP when it comes to performance. First, while not a streaming protocol, TCP does offer support for data resend and flow control, which offers reliable data transmission, but this comes at a cost to overall system performance. As a connected protocol, TCP overhead — including memory usage — is increased. In addition, TCP relies on data copies, which eliminates the benefits of a zero-copy design and, as a point-to-point technology, traditional GigE Vision benefits like multi-casting or point-to-multi-point transmission are eliminated. TCP-based implementation will remain proprietary until ratified, if/when that happens.

Figure 2: Data path in an optimized implementation of GigE Vision.

Another recently developed proposal is RDMA and RoCE. Similar to UDP, RDMA and RoCE offers zero-copy performance to the image buffer and does not require header splitting. But, similar to TCP, RDMA and RoCE is a connected protocol and supports resends and flow control, which adds overhead to the system and impacts overall performance, latency, and jitter. In addition, RDMA and RoCE has the same limitations as a point-to-point technology and like the zero-copy TCP protocol, RDMA and RoCE will remain proprietary until ratified.

When done correctly, an optimized GigE Vision UDP approach is and will continue to be the best option for achieving low-latency and reliable delivery over Ethernet at 10, 25 and even 100 gigabits per second. Emergent Vision Technologies has a long history of doing this, with 10+ years shipping 10GigE cameras, 5+ years shipping 25GigE cameras, and 2+ years shipping 100GigE cameras, with no data loss.

Take a deep dive into our guide on the use of UDP, TCP, and RDMA for GigE Vision cameras to understand the true benefits of an optimized GigE Vision approach. If you have questions, comments, or would like to speak to one of our technical resources, please contact us today.

![Exo Svs Vistek Pipettenprüfung[1] Exo Svs Vistek Pipettenprüfung[1]](https://img.vision-systems.com/files/base/ebm/vsd/image/2023/05/EXO_SVS_Vistek_Pipettenpru__fung_1_.645d3640bd697.png?auto=format,compress&fit=crop&q=45&h=139&height=139&w=250&width=250)