High-speed cameras enhance automation and measurement applications

Whether they be stand-alone systems or tethered to PC-based systems, high-speed cameras are tackling industrial and scientific measurement and troubleshooting applications.

Today’s CMOS-based cameras have redefined exactly what entails high-speed. In the past, tube-based cameras operating at 25 fps (PAL) or 30 fps (NTSC) were commonly used in the broadcast industry. When tube-based sensors were superseded by first CCD and then CMOS-based imagers, camera designers used the multiple-tap outputs of CMOS imagers to increase the frame rates of their cameras. This has resulted in the introduction of a broad range of camera products that mostly use CMOS imagers to attain speeds of between 250 fps and 200,000 fps.

Within this broad exposure spectrum, the choice of which camera to choose for any given application is highly application-specific. Developers of low-cost industrial factory automation monitoring systems, for example, may only require speeds of between 250-1000 fps depending of the speed of the production line. In such systems there is often a requirement to both monitor the quality and parameters of the product and the integrity of the mechanisms used to convey the product along the line. Both applications can be solved with relatively low-cost imagers tethered to host computers over different types of interfaces.

Since applications for such high-speed cameras span the spectrum from process monitoring to product analysis and automobile and ballistics testing, a number of different considerations must be made before specifying which type of camera is most appropriate. As with any machine vision application, the resolution of the imager used in such cameras will define how much detail the captured image will reveal.

Related: Machine vision inspects solar panels at high-speed

Many commercially-available high-speed cameras use CMOS image sensors that feature pixel arrays from VGA (640 x 480) and 25 MPixels, each with different optical formats—that demand different lens configurations—with pixel sizes between approximately 3 and 20 µm. While the amount of detail that can be resolved is dependent on the pixel size of the imager and the quality of the lens used, systems integrators must take into account the amount of light required to capture images at high-speed.

Here, cameras that employ imagers with larger pixel sizes can capture more photons during any given exposure time than equivalently-sized sensors with smaller pixels. Thus, smaller pixels will not collect as much light and may not be useful in very high frame rate applications or where low-light level images need to be captured. For this reason, the correct amount and type of lighting plays an important role in specifying any high-speed machine vision system or high-speed application.

Figure 1: Quad CXP-12 frame grabbers are now available from (top) Active Silicon, (middle) Euresys and (bottom) Matrox. Euresys specifies that the camera-to-computer distance with this standard is 30 m at CXP-12 speed (12.5 Gbps), 72 m at CXP-6 speed (6.25) Gbps) and 100 m at CXP-3 speed (3 Gbps).

Untethered or tethered?

Whether a lower-cost tethered camera can be used for such applications is equally as important. In higher-speed applications such as automobile testing, it may be necessary to capture images at rates of millions of frames per second. In these cases, such cameras may not be able to transfer captured images to host computers even over the fastest commercially-available interfaces such as 100GigE or CoaXPress. Instead, image sequences must be stored using the camera’s high-speed on-board memory and, after the event has been captured, transferred from the camera to a host PC for image analysis.

Related: High-speed cameras help validate store separation prediction models

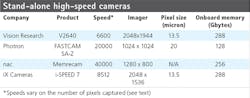

To attain such high-speed, camera vendors such as iX Cameras (Woburn, MA, USA; www.ix-cameras.com), nac Image Technology (Simi Valley, CA, USA: www.nacinc.com), Photron (San Diego, CA, USA: www.photron.com) and Vision Research (Wayne, NJ, USA; www.phantomhighspeed.com) offer stand-alone cameras that incorporate high-speed CMOS imagers, on-board memory, and solid-state drives to store image data. Once captured, this data can then be transferred to a host computer over a standard interface such as 10Gbit Ethernet, HDMI, or SDI (Table 1).

Table 1: Companies such as iX Cameras, nac Image Technology, Photron and Vision Research offer stand-alone cameras that incorporate high-speed CMOS imagers, on-board memory and solid-state drives to store image data.

To support these products, these companies offer software packages that set up the calibration, tracking, and post-processing of captured images. Here, for example, iX Cameras can be used with ProAnalyst from Xcitex (Woburn, MA, USA; www.xcitex.com) to analyze, graph, and output speed, acceleration, and angular motion of high-speed captured events.

To support its range of cameras, nac Image Technology offers its Cortex 3D motion capture software while Photron’s FASTCAM Viewer software (PFV) can be used for image-enhancement and simple motion analysis. For its part, Vision Research’s Phantom Camera Control (PCC) software allows basic measurement functions for motion analysis to be performed.

In all three products up to 288 GBytes of on-board memory can be used to extend the image recording period while on-board solid-state Terabyte drives can be used to store the data until it needs to be transferred over standard computer interfaces to a host computer for further analysis. Like many other high-speed cameras, using CMOS imagers allows region of interest (ROI) windowing to increase the speed of image capture and the duration of the captured image sequence. As an example, while Photron’s FASTCAM SA-Z camera allows 1024 x 1024, 20 µm pixels to be captured at 20,000 fps, windowing the images to 512 x 256 pixels can be used to attain frame rates of 120,000 fps.

Highly deterministic

While the use of such high-speed cameras is useful in very high-speed applications, in many process monitoring and machine vision applications, speeds of between 250 fps and 2,000 fps may be acceptable. In such cases, developers can choose from several cameras ranging in resolutions with different high-speed camera-to-computer interfaces that can meet these demands. Two of the most popular interfaces are currently based on the CoaXPress (CXP) and GigE interfaces.

There are, however, significant differences between them. While the CXP interface is a deterministic, low-latency, low-jitter interface that ranges in speeds from 6.25 Gbp/s per channel (CXP-6) to 12.5 Gbp/s (CXP-12.5), the high-speed interface must be supported with a CXP frame grabber. While this may be a more expensive proposition than using a GigE interface, CXP can support multiple channels from high-speed cameras, bringing the maximum speed using eight CXP-12.5 channels to 100Gbps.

Related: High-speed cameras target machine vision applications

For its latest Coaxlink Quad CXP-12 frame grabber, for example, Euresys (Angleur, Belgium, www.euresys.com) specifies that this distance is 30 m at CXP-12 speed (12.5 Gbps), 72 m at CXP-6 speed (6.25 Gbps) and 100 m at CXP-3 speed (3 Gbps). With four cables and four CXP-12 connections, the maximum data transfer rate is 50 Gbps, or 5 GByte/s.

Other companies that offer such frame grabbers include Active Silicon (Iver, Hants, UK, www.activesilicon.com) that debuted two boards at VISION 2018 in Stuttgart. The first is a four-channel board with a PCIe x 4 interface and the second is a four-channel board with a PCIe x 8 interface that supports four link CXP-12 channels. Another manufacturer offering a similar CoaXPress interface board is Matrox Imaging (Dorval, QC, Canada; www.matrox.com) with its four-link Rapixo CXP frame grabber that was also announced at the VISION show (Figure 1).

According to Chris Beynon, Chief Technical Officer, Active Silicon, the CXP 2.0 standard has yet to be ratified and will go to ballot in April of this year. However, even before a formal standard is ratified, several companies have announced high-speed cameras for the CXP 2.0 standard. Optronis (Kehl, Germany; www.optronis.com), for example, has already announced its Cyclone-2-2000, a CMOS-based camera. With a LUX19HS 1920 x 1080 imager with 10 µm pixels from Alexima (Sunny Island Beach, FL, USA; www.alexima.com) the camera can operate at speeds of 2,158 fps using four CXP-12 channels.

Networking cameras

Unlike CXP, the GigE interface, and variations of it from GigE to 100GigE (100Gbits/s), requires no frame grabber support. Here again, numerous machine vision camera vendors offer cameras that can run at relatively high frame rates.

These include the Mako series from Allied Vision (Stadtroda, Germany; www.alliedvision.com), the uEye Series from Imaging Development Systems (IDS; Obersulm, Germany; https://en.ids-imaging.com) and the Flea series from FLIR Integrated Imaging Solutions (Richmond, BC, Canada; www.flir.com/mv). Depending on the image sensor configurations used in these cameras, frame rates can be over 100 fps, making them useful in high-speed machine vision applications. A complete listing of the industrial camera vendors that now support the GigE standard can be found in the 2018 Vision Systems Design Camera directory at: http://bit.ly/VSD-CD18.

While a frame grabber may not be required to configure GigE cameras, this is not to say that a network card is not needed. While a standard network interface card (NIC) can be used to support a range of GigE-based cameras, using standard NICs may not be the answer to reduce the high CPU usage and latency involved in using the GigE standard.

For this reason, companies such as Mellanox (Yokne’am Illit, Israel; www.mellanox.com) have developed a series of NICs that use what the company refers to as Socket Direct. These employ remote direct memory access (RDMA), a DMA protocol that is used to transfer data from the camera to the memory of the host computer without involving operating system overhead.

Emergent Vision Technologies (Maple Ridge, BC, Canada; www.emergentvisiontec.com) has used these interfaces to support its latest 25GigE cameras that were also announced at last year’s VISION show. The company’s Bolt series uses this 25GigE interface in two products, the HB-50000, which uses a 7920 x 6004 pixel global shutter CMOS image sensor and runs at 30 fps, and the HB-12000, which is equipped with a 4096 x 3000 global shutter CMOS image sensor running at 188 fps (Figure 2).

Figure 2: Emergent Vision Technologies uses computer interfaces from Mellanox to support its 25GigE Bolt series of cameras in two 25GigE products, (a) the HB-50000 that uses a 7920 x 6004 pixel image sensor and runs at 30 fps, and (b) the HB-12000 that is equipped with a 4096 x3000 CMOS imager running at 188 fps.

Implementing systems

Systems integrators wishing to deploy such high-speed camera systems in their applications can choose to develop PC-based image recorders based around standard frame grabbers, high-speed memory, and solid-state drives with which to store image data. Off-the-shelf PC-based software such as StreamPix or TroublePix from NorPix (Montreal, QC, Canada; www.norpix.com) or ProAnalyst from Xcitex, for example, can then be integrated with such PCs to provide an off-the-shelf solution.

Related: High-speed vision checks food cans concisely

Alternatively, developers of high-speed camera systems can purchase configured systems both from software and camera hardware vendors. NorPix, for example, offers a small form factor recorder that can be used to capture images from GigE Vision-based 640 x 480 x 8-bit cameras at 200 fps. Capable of 170-minute recording times, images are recorded to RAM or solid-state drives and can then be analyzed with the company’s StreamPix software.

Similarly, Imperx (Boca Raton, FL, USA; www.imperx.com) has developed an Ethernet/IP Process Video Recorder (EIPVR) event recording system that can be used with the company’s B0620, 640 x 480 GigE cameras with Power over Ethernet (PoE) to record up to 60 seconds of video at 250 fps or longer at slower frame rates. In operation, the recording system automatically saves the images and, using the company’s recording and playback software, can be used to view the recorded events at a user-configurable playback speed (Figure 3).

Figure 3: Imperx’s event recording system can be used with the company’s B0620, 640 x 480 cameras with PoE to record up to 60 seconds of video at 250 fps or longer at slower frame rates.

As can be seen, the choice of whether to use a standalone high-speed camera or a lower-cost tethered camera/computer configuration is highly application dependent. In many very high-speed applications, such as crash test analysis, for example, analysis of captured image data cannot be performed in real-time. However, for slower image analysis and process monitoring functions, tethered camera-to-computer interfaces may prove more effective.

About the Author

Andy Wilson

Founding Editor

Founding editor of Vision Systems Design. Industry authority and author of thousands of technical articles on image processing, machine vision, and computer science.

B.Sc., Warwick University

Tel: 603-891-9115

Fax: 603-891-9297