Advancing Robotics with 3D Vision, AI, and Edge Computing for Smarter Automation

Key Highlights

- Robots are increasingly using AI, 3D vision, and edge computing to navigate complex environments without human intervention.

- Combining classical stereo algorithms with deep learning enhances depth accuracy, especially in challenging scenes, enabling smarter perception.

- Physical AI integrates perception, learning, and motion, allowing robots to transform sensory data into purposeful actions.

Imagine a robot navigating a busy warehouse, weaving between shelves, identifying packages, and adjusting its path without human intervention. Or an outdoor robot threading its way through rugged terrain, dodging obstacles, recalculating routes, and making split-second decisions without a whisper of network connectivity. These are not scenes from science fiction; they are real-world systems powered by the fusion of artificial intelligence (AI), 3D vision, and edge computing.

For decades, motion control in automation meant precision and speed. But as environments grow more unpredictable, whether a warehouse with shifting inventory or an outdoor site battered by weather, machines need more than accuracy. They need intelligence, the ability to adapt and respond to real-time challenges.

Related: AI Machine Vision Boosting Distribution Yard Efficiency

Looking Ahead: Intelligence in Motion

The future of robotics is not defined by faster machines, but by smarter ones. As AI, 3D vision, and edge computing continue to converge, robots are evolving from automated tools into adaptive systems capable of understanding and responding to the world around them.

Stereo vision will remain a cornerstone of this evolution, not because it always produces the densest depth maps, but because it offers flexible, information-rich perception that can scale from real-time onboard processing to advanced host-based algorithms. Combined with physical AI, it enables robots to move beyond automation toward true autonomy.

Ultimately, the systems that succeed will not be those that maximize every metric, but those that balance intelligence with robustness, efficiency, and deployability. Intelligence in motion is defined by adaptability, and that future is already taking shape.

Related: Tips for Improving the Performance of Stereo Vision in Robotic Applications

Why 3D Vision Matters More Than Ever

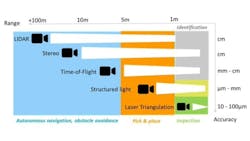

3D vision provides machines with spatial understanding, the ability to perceive the shape, size, and position of objects in their environment. This capability is foundational for tasks such as navigation, manipulation, inspection, and collision avoidance. However, not all 3D sensing technologies are created equal. Each comes with distinct trade-offs in range, accuracy, robustness, cost, and system complexity.

Stereo Vision: Uses two cameras, similar to human vision, to infer depth. It is passive, scalable, and performs well across a wide range of lighting conditions.

Time-of-Flight (ToF): Measures the time light takes to return to the sensor. It is effective at short range but can struggle in bright or outdoor environments.

Structured Light: Projects known patterns onto surfaces to calculate depth. While highly accurate, it is sensitive to motion and ambient light.

LiDAR: Emits laser pulses to map surroundings over long distances. It excels in large-scale environments but is often costly and produces relatively sparse data.

Laser Triangulation: Delivers exceptional accuracy for inspection and metrology but is best suited to static scenes and slower scanning processes.

Importantly, many robotic tasks do not require dense, photorealistic depth maps. For navigation, obstacle avoidance, safety zoning, or coarse inspection, reliable and timely depth information often matters more than maximum spatial density. Choosing the right 3D technology, and the right processing approach is therefore a system-level decision, driven by application needs rather than theoretical capability.

Stereo Vision: Seeing the World Like We Do

Human depth perception relies on two eyes capturing slightly different viewpoints, which the brain fuses into a coherent sense of distance and spatial relationships. Stereo vision applies this same principle to machines. By synchronizing two cameras and analyzing disparities between their images, robots gain a rich understanding of three-dimensional space.

This approach offers a unique combination of scalability, resolution, and environmental resilience. Because stereo vision is passive, it does not rely on projecting light into the scene, making it well suited for both indoor and outdoor applications. For many robotic systems, it provides a compelling balance between performance and practicality.

From Classical Methods to Learning-Based Depth

Traditional stereo matching algorithms have long played an important role in robotic perception. They are deterministic, efficient, and well suited for on-device processing, making them effective for many real-time applications where low latency and predictable behavior are critical. In outdoor robotics in particular, such as navigation, detection, and inspection, these methods can deliver sufficiently accurate depth while operating reliably at the edge.

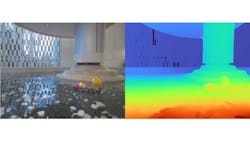

More recently, convolutional neural network (CNN) and vision transformers based stereo matching has expanded what stereo vision can achieve. Learning-based models generate denser and more detailed depth maps, handling low-texture scenes, adapting to lighting variations, and inferring depth in challenging regions where classical methods struggle. When paired with GPUs or other accelerators, they unlock higher-fidelity 3D perception for applications that demand it.

READ MORE: What is 3D Machine Vision in Industrial Environments? | Vision Systems Design

These approaches are not mutually exclusive. In practice, robust robotic systems often benefit from a dual-path strategy: using efficient on-device stereo processing for general perception and real-time operation, while enabling on-host or accelerated processing when applications require higher depth density or specialized algorithms. The ability to select the appropriate processing path allows system designers to balance accuracy, latency, power consumption, and computational cost.

As always, algorithms alone do not define system performance. Mechanical stability, thermal robustness, precise calibration, and long-term reliability remain essential. Advanced models amplify the strengths of stereo vision, but they cannot compensate for weak hardware fundamentals.

Stereo Vision and Physical AI: Turning Perception into Action

High-quality depth perception is a prerequisite for intelligence, but perception alone is not enough. Geometry gives structure. Semantics gives meaning. Together they create spatial awareness, the foundation for real-world interaction. Physical AI, the integration of perception, learning, and motion within embodied systems, transforms sensory data into purposeful action. ‑world interaction. Physical AI, the integration of perception, learning, and motion within embodied systems, transforms sensory data into purposeful action.

Stereo vision provides the spatial foundation for this transformation. Depending on the task, robots may rely on coarse depth cues for navigation and safety, or richer depth representations for manipulation and measurement. Physical AI systems learn to use the level of detail they need— no more, no less—to plan, predict motion, and respond dynamically.

In a modern warehouse, stereo cameras continuously deliver depth information while AI models classify objects, estimate pose, and guide robotic arms—even as layouts change and lighting conditions fluctuate. This tight coupling between perception and action enables machines to operate reliably in environments that were once considered too complex or unpredictable for automation.

Related: Zalando Incorporating Mobile Robots Into Logistics Applications

About the Author

Freya Ma

Freya Ma is a seasoned professional with an MBA and a background in Automation Engineering, specializing in machine vision and industrial automation. Her expertise in depth sensing and advanced sensor technologies, particularly 3D vision (stereo, time-of-flight, and LiDAR), enables her to design and deploy cutting-edge vision systems for robotics and automated solutions across diverse industrial applications.