Vision system used to study development of memories for artificial intelligence

A team of computer scientists from the University of Maryland have employed a new form of computer memory that could lead to advances in autonomous robot and self-driving vehicle technology, and possibly in the development of artificial intelligence as a whole.

Humans learn naturally how to move about the world in order to gain information about the world. Imagine that a person sees a square in front of them. It may be a flat square, or it may be one side of a cube. The person cannot tell the difference unless they view the square from the side. In order to gain more information the person had to change their position. They will remember to replicate the process in a similar, future situation. This process is called “active perception.”

A human being's sensory and locomotive systems are unified, which means our memories of an event contain this information combined. In a machine like a robot or drone, on the other hand, cameras and locomotion are separate systems with separate data streams. If that data can be combined, a robot or drone can create its own "memories," and can learn more efficiently to mimic active perception.

In their study, titled "Learning Sensorimotor Control with Neuromorphic Sensors: Toward Hyperdimensional Active Perception," the researchers from the University of Maryland describe a method for combining perception and action - what a machine sees and what a machine does - into a single data record.

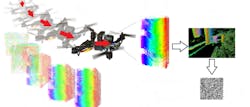

The researchers used a DAVIS 240b DVS (dynamic vision sensor) from iniLabs, that only responds to changes in a scene, similarly to how neurons in the human eye only fire when they sense a change in light, and a Qualcomm Flight Pro board, attached to a quadcopter drone.

Using a form of data representation called a hyperdimensional binary vector (HBV), information from the drone's camera and information on the drone's velocity were stored in the same data record. A convolutional neural network (CNN) was then tasked with remembering the action the drone needed to take, with only a visual record from the DVS as reference. The CNN was able to accomplish the task in all experiments with 100% accuracy by referencing the "memories" generated by combining the camera and velocity data.

The principle behind this experiment, allowing a machine vision system to reference event and reaction data more quickly than if the two data streams were separate, could allow robots or autonomous vehicles to predict future action taken when specific visual data is captured, i.e. to anticipate action based on sensory input. Or, to put it another way, to imagine future events and think ahead.

Related stories:

Trials conducted in UK on AI platform designed to assist with air traffic control

Deep learning brings a new dimension to machine vision

Artificial intelligence trends and predictions for 2019

Share your vision-related news by contacting Dennis Scimeca, Associate Editor, Vision Systems Design

To receive news like this in your inbox, click here.

About the Author

Dennis Scimeca

Dennis Scimeca is a veteran technology journalist with expertise in interactive entertainment and virtual reality. At Vision Systems Design, Dennis covered machine vision and image processing with an eye toward leading-edge technologies and practical applications for making a better world. Currently, he is the senior editor for technology at IndustryWeek, a partner publication to Vision Systems Design.