Deep learning continues growth in machine vision

Having gained quite a bit of traction in terms of popularity and deployment in recent years, deep learning focuses on a subset of machine learning techniques, with the term deep generally referring to the large number of layers in the neural network. As adoption grows, more and more companies offer deep learning tools, from primitive algorithms facilitating general research and development to complete software products executing deep learning for machine vision applications.

Open-source frameworks

Similar to how OpenCV offers an open source computer vision library of programming functions for computer vision applications, numerous open-source frameworks for building and training deep neural networks exist. These include TensorFlow (www.tensorflow.org), Caffe (caffe.berkeleyvision.org, PyTorch (www.pytorch.org), SciKit (www.scikit-learn.org), Keras (www.keras.io), and OpenNN (www.opennn.net), among others.

These software libraries provide complex math tools for developers looking to implement a neural network, convolutional neural network, and other processes to execute artificial intelligence or deep learning tasks, according to David L. Dechow, Principal Vision Systems Architect, Integro Technologies, Inc. (Salisbury, NC, USA; www.integro-tech.com).

“Though these software libraries are general purpose, they can be used to execute more targeted deep learning in machine vision applications,” he says.

One such example exists in the November/December 2019 article, Automated system inspects radioactive medical imaging product labels (bit.ly/VSD-RTRC). In this application,a contact image sensor (CIS) linescan camera provides clear images of radiotracer labels for optical character recognition and verification tasks. As part of the system,a neural network running in TensorFlow checks for smudging, tears, and wrinkles on the labels.

Specialized libraries

Numerous companies also offer specialized libraries with pre-developed neural networks.Such libraries offer algorithms that can be used by developers to implement a full deep learning machine vision application without doing neural network and AI research and development beforehand. While these libraries still require a significant amount of coding to execute deep learning applications, they can save integrators time in developing a system.

Euresys (Angleur, Belgium; www.euresys.com), for example, offers the EasySegment (Figure 1) and EasyClassify deep learning libraries, which are offered together in the company’s Deep Learning Bundle. EasySegment, according to the company, works in unsupervised mode. After training with good images, EasySegment can reportedly detect and segment anomalies and defects in images, even in the absence of readily available defective samples. Additionally, the library includes the free Deep Learning Studio application for dataset creation, training, and evaluation.

EasyClassify includes functions for classifier training and image classification and reportedly can detect defective products or sort products into various classes. A developer labels training images as good or bad, or as part of a certain class, and following this training, EasyClassify classifies the images. For a given image, the library returns a list of probabilities, indicating the likelihood that the image belongs to each of the classes it has been taught. The library works with as few as one hundred training images per class and like EasySegment is compatible with CPU and GPU processing and offered with the Deep Learning Studio application.

“We have optimized the neural network architecture for industrial applications by integrating and adapting various ideas from state-of-the-art research such as residual connections. This allows us to obtain a product that is both fast and high-performing,” says Antoine Lejeune, Lead Software Engineer, EasyDeepLearning, Euresys. “Additionally, in close collaboration with the University of Louvain, we have developed an innovative training algorithm specifically tailored for unsupervised defect segmentation [EasySegment] in industrial images.”

In its Deep Learning Toolbox for MATLAB, MathWorks (Natick, MA, USA; www.mathworks.com) provides a framework for designing and implementing deep neural networks with algorithms, pre-trained models, and apps. Software capabilities enable developers to train advanced network architectures using custom training loops, automatic differentiation, shared weights, and custom loss functions, according to the company. MATLAB also enables the import of pre-trained networks from TensorFlow and PyTorch through the Open Neural Network Exchange (ONNX), as well as the export of networks trained in MATLAB to other frameworks using ONNX. With other tools from MathWorks it is possible to prepare images, video, and signals for training and testing deep learning models, as well as deploy inference models to CPUs, FPGAs, and GPUs, according to the company.

“As deep learning becomes more prevalent across multiple industries, there is a need to make it broadly available, accessible, and applicable to engineers and scientists with varying specializations,” says Johanna Pingel, deep learning product marketing manager, MathWorks. “With the Deep Learning Toolbox, vision systems engineers can quickly apply deep learning whether they’re a deep learning novice or an expert. With MATLAB,they can use an integrated deep learning workflow from research to prototype to production.”

With the latest update to the Matrox Imaging Library (MIL), Matrox Imaging (Dorval, QC,Canada; www.matrox.com/imaging) expands the capability of its classification module with detection using deep learning and provides users with the ability to train one of the supplied neural networks for a particular application.Detection produces classification maps as opposed to just category scores, as is the case with global classification, according to the company. Training is accomplished programmatically or within the MIL CoPilot interactive environment (Figure 2) using an NVIDIA (Santa Clara, CA, USA; www.nvidia.com) GPU or x64-based CPU while inference is performed efficiently on a CPU, avoiding the need for specialized GPU hardware.

“Classification using deep learning keeps proving itself as an effective tool for inspecting things with underlying texture, natural variation, and acceptable deviations” says Pierantonio Boriero, Director of Product Management, Matrox Imaging.MVTec (Munich, Germany; www.mvtec.com) offers deep-learning-powered optical character recognition in both its machine vision software products – HALCON and MERLIC. HALCON, however, provides a wider range of deep learning functionalities,including deep learning-based image classification for assigning images to trained classes, semantic segmentation for localizing trained defect classes with pixel accuracy, and object detection for localizing trained object classes and identifying them with a bounding box. With this, touching or partially overlapped objects are also separated, enabling object counting.

Another functionality offered in HALCON is anomaly detection for facilitating the automated detection and segmentation of defects. After training the software with a few good sample images, HALCON can detect various defect types on a standard CPU without previous labeling efforts needed.

The company also offers its Deep Learning Tool for HALCON (Figure 3), which provides training data labeling functionality for object detection and since a February 2020 update,also for image classification. Labeling is done by drawing oriented rectangles around each relevant object while also associating them with class information. To speed up the labeling process, the Deep Learning Tool enables multiple users to work on different parts of the image data set and merge them together later into one project.

“At MVTec, we see deep learning as an important complementary technology that extends our existing toolbox of machine vision algorithms,” says Mario Bohnacker, Technical Product Manager, HALCON. “By utilizing deep learning, we offer our customers new,data driven access to our software.”

He continues, “In the future, we want to further improve the usability of deep learning functionalities. In particular, we want to make black box deep learning more and more transparent, so that its decisions become more comprehensible, thereby increasing confidence in the technology.”

Use of deep learning is also possible using National Instruments’ (Austin, TX, USA; www.ni.com) LabVIEW software via the company’s Vision Development Module. With this, integrators and developers can deploy deep learning models developed in TensorFlow on NILinux Real-Time and Windows operating systems. For example, the Vision Development Module enables the deployment of deep learning-based object detection via the Model Importer API and a model pre-trained in TensorFlow (bit.ly/VSD-NILV).

Furthermore, third-party add-ons such as the DLHUB graphical deep learning platform from ANSCENTER (www.anscenter.com) enable the development, training, and evaluation of deep learning models for industrial deployment via drag-and-drop interface.This tool, according to its developer, targets engineers less familiar with deep learning and Python or C# programming. DLHUB supports various deep learning layer types,including dense, convolution, and pooling layers, and supports advanced layers such as the transfer learning model and the residual neural network layer. Additionally,it supports advanced training algorithms such as AdaDelta, Adam, FSAdaGrad, andRMSProp, and the export of trained models into LabVIEW, C++, and C# for deployment.

Available from Ngene (Yerevan, Armenia; www.ngene.com), the DeepLTK (deep learning toolkit) is another third-party tool for LabVIEW. This tool offers image recognition, object detection,and speech recognition capabilities for LabVIEW developers and helps simplify the process of creating, building, configuring,training, and visualizing deep neural networks in LabVIEW, according to the company. When running on a CPU, DeepLTK does not require external libraries or engines to function.

Stemmer Imaging’s (Puchheim, Germany; www.commonvisionblox.com), Polimago tool in Common Vision Blox (CVB) software also offers machine learning-based pattern recognition and pose estimation tools. CVB Polimago typically requires 20-50 training images per class and approximately 10 minutes to create a classifier from training data. This is possible because CVB Polimago uses an algorithm to generate artificial views of the model to simulate various positions or modified images of a component. In this way, the algorithm can learn the variability of the corresponding pattern, enabling a reliable identification of the training image at a higher recognition rate with much fewer training images, according to the company. Stemmer Imaging offers CVB Polimago for Windows and Linux, including ARM platforms.

Complete deep learning products

Only a handful of companies offer complete deep learning application products that execute deep learning for machine vision applications. In such implementations, according to Dechow, developers and integrators have an easy-to-use front-end HMI that facilitates collection of images, training of classes, and inspection execution.

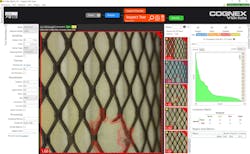

Having acquired both ViDi Systems and more recently, SUALAB, Cognex (Natick, MA, USA; www.cognex.com) has positioned itself as a leader in such deep learning products. Cognex ViDi software (Figure 4) uses artificial intelligence to improve image analysis in applications where it is difficult to predict the full range of image variations. Using feedback, the software trains the system to distinguish between acceptable variations and defects. Training of a deep learning based model is accomplished in just minutes, says the company, based on a small sample image set. Cognex ViDi consists of four sets of algorithms: Red-Analyze, Green-Classify, Blue-Locate, and Blue-Read tools. The Red- Analyze tool includes an option for unsupervised training of pass/fail inspection tasks that assists in applications where few defect samples are available, or where new and unpredictable types of defects can appear, according to Cognex.

Now part of Cognex, SUALAB offers SuaKIT inspection software (Figure 5; bit.ly/ VSD-SUA), which is a library based on actual image data generated from various industrial sites which has the primary functions of segmentation and classification. SuaKIT’s tools are optimized for highly detailed measurements, which are often required in validating high value electronics projects, as well as pharmaceutical and medical applications. The software also offers an environment for developers to optimize their application for deployment, including features to detect potentially mislabeled images among the hundreds or thousands used in training, and visual heat maps for highlighting features of special interest to the neural network.

“We know we’re competing with general purpose open source neural networks,” says John Petry, director of product marketing for vision software at Cognex. “But most automation engineers aren’t neural network PhDs. Our goal is to build deep learning vision systems that let them quickly create and deploy applications for the factory floor, while exceeding the performance of open source alternatives.”

He adds, “With ViDi, we have unique deep learning architecture that’s integrated directly with classic VisionPro machine vision tools. And with SuaKIT, we have algorithms for high-detail applications and advanced optimization. Our goal is to combine the best features of both to keep advancing the state of the art.”

Also developing deep learning software, Laon People (Seongnam-si, Gyeonggi-do; South Korea; www.laonpeople.com) offers its NAVI (new architecture for vision inspection) software suite for artificial intelligence-based vision inspection. Tools include: NAVI AI Mercury (classification), NAVI AI Venus (pattern matching), NAVI AI Mars (detection of atypical or fine defects), and NAVI AI OCR (optical character recognition).

Lastly, there exists a class of companies that integrate machine vision deep learning solutions. Such companies do not sell the software as a product, but rather provide a complete custom application typically built upon one of the products or libraries described in this article, says Dechow.

One such example is Cyth Systems (San Diego, CA, USA; www.cyth.com) and its Neural Vision software which uses deep learning to allow visual inspectors, instead of programmers, to configure the system. Neural Vision targets users with no machine vision experience to inspect and classify products. As the system is fed images of relevant objects and told either what unique parts are, or if what it is seeing is good or bad, it applies algorithms to learn to identify what is being seen. By showing the system a range of variation such as lighting, shadows, and environments, it learns to understand the important and unimportant features for identifying a part.

“Our why is to allow artificial intelligence to be shared by a larger audience and allow a larger percentage of the population to benefit from this huge technological leap forward,” says Andy Long, CEO, Cyth Systems.

Another example is Integro Technologies, which uses Cognex ViDi and MVTec HALCON software products and libraries for deep learning applications. Numerous other players exist in this market, including Artemis Vision (Denver, CO, USA; www.artemisvision.com) and Automated Vision Systems Inc. (San Jose, CA, USA; www.autovis.com).

Yet another example is Mariner (Charlotte, NC, USA; www.marinerusa.com) an Industrial Internet of Things and analytics product and services company offering contract subscription deep learning services for machine vision applications with its Spyglass Visual Inspection cloud-native product.

“Designed to address the common problem of unacceptable false positive [reject] rates, Mariner’s product integrates into existing production lines for pass/fail reporting and creates alerts when defect frequencies exceed control limits, while also informing a root cause analysis process and providing quality analytics,” says Phil Morris, CEO and Co-Founder, Mariner.

Related stories:

Deep learning algorithms use density-based crowd counting to track penguin populations

Deep learning powers chimpanzee face recognition research

How to get started with deep learning in machine vision

Share your vision-related news by contacting Dennis Scimeca, Associate Editor, Vision Systems Design

About the Author

James Carroll

Former VSD Editor James Carroll joined the team 2013. Carroll covered machine vision and imaging from numerous angles, including application stories, industry news, market updates, and new products. In addition to writing and editing articles, Carroll managed the Innovators Awards program and webcasts.