Deep learning enables everyday digital cameras to see around corners

Dave Tokic

Images of objects occluded or obstructed in some way, such as a person standing behind a tree or around a corner, cannot be captured by cameras. Approaches by the research community to date have proved non-line-of-sight (NLOS) imaging possible using specialized equipment requiring ultra-short pulsed laser illumination and detectors with < 10 ps resolution. The temporal ‘echoes’ of the laser pulses bouncing off the occluded object are captured to reconstruct an image of the target. But these setups remain extremely expensive, require laboratory conditions to operate, and are not practical for real-world applications.

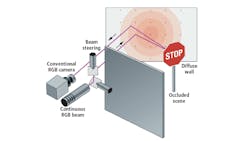

To enable an off-the-shelf machine vision camera to see beyond line-of-sight around obstructions and corners in everyday operating conditions, with high resolution and color, Algolux (Montréal, QC, Canada; www.algolux.com) conducted extensive research, collaborating with Princeton University (Princeton, NJ, USA; www.princeton.edu) and the University of Montréal (Montréal, QC, Canada; www.umontreal.ca/en). Together, the group used a camera with an inexpensive CMOS image sensor, low-cost illumination, and deep learning techniques to achieve non-line-of-sight imaging at the same or better performance of current, specialized academic experiments (Figure 1).

First, the researchers developed an image formation model for steady-state (non-temporal) NLOS imaging and an efficient implementation. Based on the model, two approaches were developed. The first was an optimization method derived for the special case of planar scenes (flat objects, signs, etc.) with known reflectance. The second being a learnable architecture trained with a synthetic set created for NLOS imaging for arbitrary scene geometries and reflectance of representative object classes.

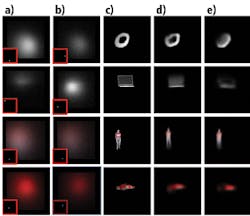

The deep learning encoder-decoder architecture takes in a stack of 5x5 synthetic indirect reflection measurements uniformly sampled on the wall. Figure 2 shows four examples of these 25 indirect reflection maps (Figure 2a) with the red inset boxes indicating the projected light beam position. A convolutional neural network (CNN) variant of the U-Net architecture (http://bit.ly/VSD-UNET; Figure 2b) acts as the backbone structure for predicting and outputting the projections of the unknown scene (Figure 2c). The network contains an 8-layer encoder and decoder, with each encoder layer reducing the image size by a factor of two in each dimension and doubling the feature channel.

The deep learning model requires a large training dataset to represent objects with arbitrary shapes, orientations, locations, and reflectance. Handling unknown scenes also requires exploring a very large search space to find the best match to the object(s) in the scene. As a result, the team used synthetic data generation, as experimental captures would not have been practically possible. However, creating the synthetic training dataset through ray-traced rendering of indirect reflections was not practical as it would require minutes per scene. At five minutes per measurement, a training set of 100,000 images would require one year of render time.

Instead, the team developed a novel pipeline using direct rendering to generate the training data. A given point on the wall (e.g. pixel) integrates light over the hemisphere of incoming light directions. The unit hemisphere is sampled and then direct rendering with orthographic projection (e.g. representing three-dimensional objects in two dimensions) is used for each sample direction, resulting in the accumulation of sampled views.

Hardware-accelerated OpenGL allows for microsecond render times for a single-view direction. A full indirect measurement of the reflection off the wall back to the camera (the “third-bounce,” as shown by the return arrow in Figure 1) synthesizes in 0.1 seconds for 10000 hemisphere samples—more than 600x faster than published methods. Three training datasets were used, MNIST Digits for text character shapes (http://bit.ly/VSD-MNIST), ShapeNet for more complex models (car, pillow, laptop, and chair), and SCAPE for human shapes and poses (http://bit.ly/VSD-SCAPE). As a result, 58,000 examples were rendered with random location, orientation, and reflectance.

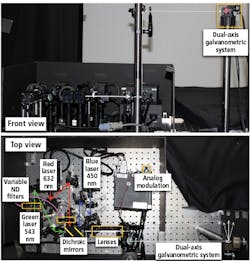

The researchers constructed an inexpensive test setup using an industrial camera and a laser-based illuminator. An Allied Vision (Stadtroda, Germany; www.alliedvision.com) Prosilica GT 1930C camera with a 2.3MPixel IMX174 color CMOS sensor from Sony (Tokyo, Japan; www.sony.com) captured images in the setup. The illuminator was comprised of three continuous red, green, and blue 200 mW lasers from Coherent (Santa Clara, CA USA; www.coherent.com) and collinearized with two soda-lime hot/cold dichroic mirrors (FM02R and FM04R) from Thorlabs (Newton, NJ, USA; www.thorlabs.com) to produce a collimated RGB light beam. Despite the commercial availability of inexpensive RGB laser modules in a very small footprint, this setup was used to provide more accurate control of the light source for the experiments (Figure 3).

Simulated and experimental validation performed with setup and scene specifications identical to those in previous time-resolved methods proved the method usable across objects with different reflectance and shapes.

The method was proven across objects with different reflectance and shapes. Both simulated and experimental validation was performed with setup and scene specifications identical to those in previous complex temporal approaches.

Assessed on unseen synthetic data from MNIST Digits, ShapeNet, and the SCAPE human datasets, the learned neural network model ran at a 32-fps reconstruction rate. Figure 4 shows two examples of indirect reflection maps and their corresponding light positions. These qualitative results show that the model precisely localizes objects and recovers accurate reflectance for large objects. Although recovery for smaller objects with diffuse reflectance becomes an extremely challenging task, the method still recovers coarse object geometry and reflectance.

Figure 5 shows three planar reconstruction examples of retroreflective and diffuse targets acquired using the experimental setup. The optimization method achieves accurate NLOS reconstruction of the object’s geometry and reflectance from the spatial variations in the large set of captured indirect reflections at almost interactive times of about 1 second. While the dominating retroreflective component returns to the virtual source position, a residual diffuse component remains and appears as a halo-like artifact in the reconstruction. Though even more apparent for the painted road sign, the diffuse halo still does not prohibit recovery of high-quality geometry and reflectance for these objects.

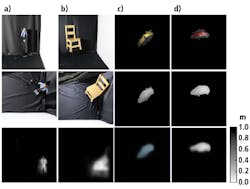

Figure 6 shows reconstruction results for diffuse objects without requiring planar scene geometry. The results demonstrate that the learned neural network, trained entirely on synthetic data, generalizes to these experimental captures. The character-shaped objects on the left show cutouts of diffuse white foam boards, comparable to the prior validation scenes. The data-driven method accurately recovers shape and location on the wall for these large characters from their diffuse indirect reflections. Though the smaller complex objects on the right have substantially dimmer diffuse reflections, the method still recovers rough shape and position. Despite measuring only 3 cm-wide, the mannequin figurine is still recovered.

Finally, the team showed this approach can recover depth of complex, occluded scenes. Using the same neural network architecture as before, but with depth maps now as labels, the retrained model recovers reasonable object depth—validated in Figure 7 for both synthetic and experimental measurements.

In summary, the results successfully show deep learning, conventional RGB cameras, and continuous illumination enable fast color imaging of objects outside of direct line-of-sight, as opposed to recent costly and complex methods that need pulsed light and picosecond resolution measurements.

These results open promising directions for future research, such as exploring the multiple interreflections in the hidden scene, as every surface in a scene can help illuminate further hidden objects and transport them back to the sensor. For example, light bouncing off a car around a corner could then bounce off a hidden wall to image the back of a car or a person hiding behind it.

Dave Tokic is Vice President of Marketing and Strategic Partnerships at Algolux (Montréal, QC, Canada; www.algolux.com).

Related stories:

Open-source deep learning model extracts street sign locations from Google Street View

Satellite images analyzed by neural networks to determine rooftop solar power potential

Vision system used to study development of memories for artificial intelligence

Share your vision-related news by contacting Dennis Scimeca, Associate Editor, Vision Systems Design

SUBSCRIBE TO OUR NEWSLETTERS